Introduction

Enterprises process millions of documents every day, and a question keeps coming up:

Can large language models like ChatGPT and Claude finally replace traditional OCR?

On paper, the idea is appealing. LLMs can understand context, reason about content, and handle complex layouts that often trip up classic OCR engines. But when we put that promise to the test, a different reality emerges. Today, LLMs are not a good replacement for OCR. Faster, simpler, and more reliable tools already exist, and they deliver better results at a fraction of the cost.

Our conclusion is clear: traditional OCR remains the most reliable and cost-effective solution for the vast majority of document-processing workloads. LLMs do offer real advantages, but only in specific edge cases. Used indiscriminately, they introduce higher costs, slower processing, and new reliability risks. Used strategically, they can complement OCR, but they shouldn’t replace it.

In this article, we break down when LLMs can help and when they hurt, the practical challenges of using them for OCR (including hallucinations, scalability, and cost), and real-world performance comparisons between ChatGPT 5.2, Claude Sonnet 4.5, and LLMWhisperer (in Unstract). We also explore how hybrid architectures combine OCR’s speed and determinism with LLMs’ reasoning capabilities, and why this approach makes the most sense for enterprises.

Whether you’re designing a document-processing pipeline or evaluating OCR technologies for production use, this analysis will help you choose the right tool for the right job, with clarity, data, and real-world insight.

Understanding Traditional OCR vs. AI OCR

Traditional OCR (Optical Character Recognition) tools, such as Tesseract, PaddleOCR, Azure Document Intelligence, AWS Textract, and Google Document AI, are purpose-built for extracting text from images and documents.

They rely on pattern recognition techniques to identify characters, words, and text structures, converting scanned documents into machine-readable text.

Key strengths of traditional OCR:

- High speed, capable of processing thousands of pages per hour

- Strong accuracy on clean, printed, and well-structured documents

- Very low cost per page, ideal for large-scale processing

- Predictable and deterministic output

- Well-suited for forms, invoices, receipts, and standardized layouts

Traditional OCR performs best on:

- Printed text

- Clear or high-quality scans

- Structured forms and tables

- Documents with consistent formatting

For most enterprise document-processing workflows, traditional OCR remains a reliable and efficient choice.

AI OCR takes a fundamentally different approach. Instead of pattern recognition, large language models use language understanding to interpret document content. This allows them to handle more complex layouts and challenging inputs.

Tesseract OCR is one of the most popular and powerful open-source OCR tools available today.

While Tesseract is an excellent tool for basic OCR tasks, it relies heavily on traditional image processing techniques and pre-trained models that may not perform well with non-standard or complex documents.LLMWhisperer, on the other hand, uses deep learning models that can adapt to the nuances of different writing styles, languages, and document structures.

Potential advantages of AI OCR:

- Better handling of complex or irregular layouts

- Support for multi-column text and nested tables

- Improved tolerance for noisy scans or partial data

- Context-aware extraction and field inference

However, these benefits come with important trade-offs:

- Slower processing times due to model inference

- Significantly higher costs at scale

- Non-deterministic outputs

- Reduced reliability for high-volume workloads

- Not optimized for raw text extraction

LLMs are designed primarily for language understanding tasks, not for the speed and precision required by large-scale document extraction.

The core difference is one of purpose:

- Traditional OCR: Pattern recognition and text extraction

- LLMs: Language understanding and interpretation

Effective document processing requires:

- Fast and accurate extraction first

- Interpretation, enrichment, and structuring second

Using an LLM as a pure OCR engine is like using a Swiss Army knife when you need a scalpel, it may work, but it’s not the right tool. This is why hybrid approaches, which combine OCR’s efficiency with LLM-driven intelligence, often deliver the best overall results.

Key Challenges with AI OCR

One of the most serious risks of using LLMs for OCR is hallucination, the generation of information that does not exist in the source document. Unlike traditional OCR, which either extracts text correctly or fails to recognize it, LLMs can confidently produce plausible but entirely fabricated content. At enterprise scale, this is particularly dangerous.

Key risks of hallucinations include:

- Invented values in financial, legal, or compliance documents

- Subtle, believable errors that pass quality checks

- Increased risk of regulatory violations and legal exposure

- Lower trust in automated document-processing pipelines

Cost and performance are equally significant concerns. Traditional OCR tools typically process documents for pennies per page and can handle thousands of pages per hour. In contrast, LLM-based processing can cost dollars per document and scale poorly.

Economic and performance challenges:

- Cost: Millions of pages can translate to tens of thousands of dollars in LLM usage

- Speed: OCR completes in seconds, while LLM calls may take minutes per document

- Scalability: High-volume batches can stretch from hours to days or weeks

- Operational overhead: API rate limits, retries, and orchestration complexity

LLMs also struggle with long-form documents. As document length increases, maintaining accuracy and contextual consistency becomes harder, often leading to incomplete or inconsistent extraction.

Limitations with long documents:

- Degraded accuracy as context windows fill

- Missed or partially extracted fields

- Inconsistent outputs across document chunks

This is why best-practice systems follow an extraction-first approach:

- Use traditional OCR for fast, reliable text extraction

- Apply LLMs only for interpretation, structuring, or enrichment

- Combine both in a controlled, auditable pipeline

Beyond these core challenges, LLMs introduce additional structural limitations:

- Token limits: Require chunking that can break semantic context

- Non-determinism: Identical inputs may produce different outputs

- Debugging difficulty: Errors are harder to diagnose and reproduce

- Reliability concerns: Unpredictable failures in production systems

For enterprise environments that demand accuracy, scalability, and predictable behavior, these challenges make AI models a poor choice for primary OCR. Instead, they are best used strategically, augmenting traditional OCR rather than replacing it.

Optical Character Recognition (OCR) technology has become indispensable in today’s digital landscape. The effectiveness of OCR software can greatly impact workflows, data accuracy, and overall operational efficiency.

Here are some of the top OCR tools in 2026:

- Tesseract,

- Paddle OCR,

- Azure Document Intelligence

- Amazon Textract

- LLMWhisperer.

Real-World OCR Performance Comparison

Research Methodology

To evaluate how LLMs perform for OCR tasks in real-world scenarios, we conducted a comprehensive comparison of ChatGPT 5.2, Claude Sonnet 4.5, and LLMWhisperer (in Unstract).

Our evaluation included a diverse set of documents spanning varying complexity levels. These documents were chosen to represent typical enterprise use cases.

Each solution was tested using the same extraction requirements to ensure a fair and consistent comparison.

Evaluation metrics included:

- Accuracy: Correctness of extracted text and values

- Completeness: Coverage of all relevant fields and sections

- Reliability: Consistency and stability across multiple documents

The results reveal significant differences in performance, highlighting critical considerations when selecting OCR and document-processing tools.

Why Direct AI OCR Parsing Often Fails

The core problem with sending raw documents directly to LLMs is that these models are designed for text, not for images or complex document structures.

When a PDF or scanned image is sent to ChatGPT or Claude, the model must:

- Interpret the visual layout

- Understand spatial relationships

- Extract text while maintaining context and structure

This multi-step process introduces multiple points of failure:

- Missing entire sections in complex layouts

- Misinterpreting tables and losing relationships between data points

- Confusing multi-column layouts and mixing content from different columns

- Inconsistent extraction of structured data across similar documents

- Hallucinating information when the document quality is poor, or the text is unclear

Technically, this occurs because when LLMs receive raw documents, the visual information is converted into tokens, losing critical spatial and structural cues. For example:

- Tables are flattened into linear text

- Multi-column layouts become jumbled paragraphs

- Visual cues such as borders, spacing, and alignment, which humans rely on to understand structure, are lost

The model then attempts to reconstruct the structure using language understanding alone. While this may work for simple documents, it fails for complex layouts.

Why LLMWhisperer Performs Better

LLMWhisperer is an advanced OCR tool that intelligently parses complex documents. It cleans up noisy scans and faithfully preserves the original layout, delivering data that’s perfectly formatted for LLMs and downstream systems.

By separating extraction from interpretation, LLMWhisperer avoids the fundamental mismatch that causes direct AI parsing to fail.

Performance Results: Side-by-Side Comparison

Let’s examine the results from ChatGPT 5.2, Claude Sonnet 4.5, and Unstract (with LLMWhisperer).

For each example document, we’ll review the outputs from each LLM alongside Unstract’s results.

We’ll highlight any gaps, flaws, and inaccuracies when they occur, as well as note areas where the results are accurate.

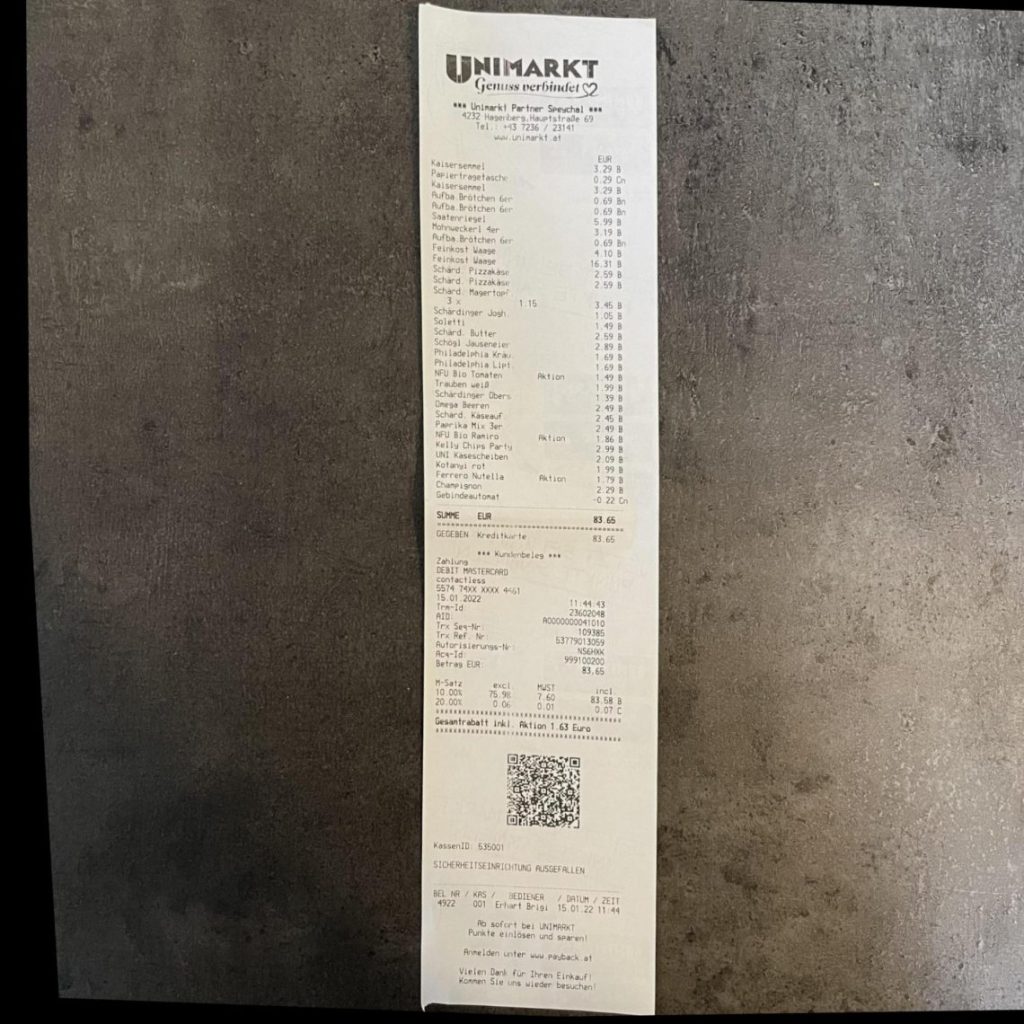

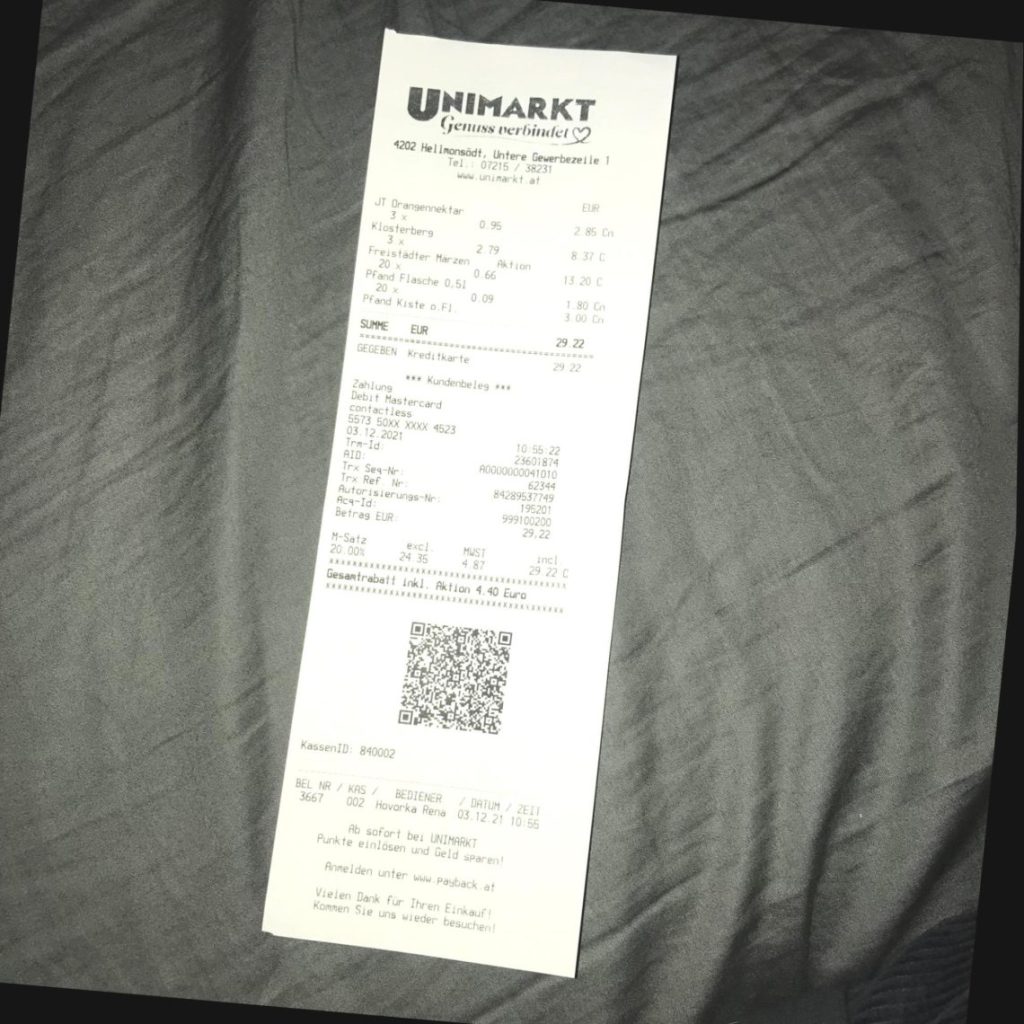

Document 1 – Photo of an Invoice

Chat GPT 5.2:

Let’s use the following prompt:

For this receipt, capture the items purchased, along with their corresponding costs and total.

Capture also the payment method, date of purchase, card number, and transaction ID.

Return only a formatted JSON.This is the output:

The returned JSON:

{

"merchant": "UNIMARKT",

"items": [

{ "name": "Kaisersemmel", "price_eur": 3.29 },

{ "name": "Papiertragetasche", "price_eur": 0.29 },

{ "name": "Rufda Brötchen 6er", "price_eur": 3.29 },

{ "name": "Rufda Brötchen 6er (2)", "price_eur": 3.29 },

{ "name": "Saatenriegel", "price_eur": 0.69 },

{ "name": "Mohnweckerl 4er", "price_eur": 3.19 },

{ "name": "Rufda Brötchen 6er (3)", "price_eur": 4.69 },

{ "name": "Feinkost Waage", "price_eur": 4.1 },

{ "name": "Schärd Pizzakäse", "price_eur": 2.59 },

{ "name": "Schärd Pizzakäse (2)", "price_eur": 2.59 },

{ "name": "Schärd Mayertopf", "price_eur": 3.45 },

{ "name": "Schärdinger Joghurt", "price_eur": 1.05 },

{ "name": "Soletti", "price_eur": 1.49 },

{ "name": "Schärd Butter", "price_eur": 2.59 },

{ "name": "Philadelphia Light", "price_eur": 1.69 },

{ "name": "NFU Bio Tomaten", "price_eur": 1.49 },

{ "name": "Trauben weiß", "price_eur": 1.99 },

{ "name": "Schärdinger Obers", "price_eur": 1.39 },

{ "name": "Omega Beeren", "price_eur": 2.49 },

{ "name": "Schärd Käseauf", "price_eur": 2.45 },

{ "name": "Paprika Mix 3er", "price_eur": 2.49 },

{ "name": "NFU Bio Karotten", "price_eur": 1.86 },

{ "name": "Kelly Chips Party", "price_eur": 2.99 },

{ "name": "UNI Käsescheiben", "price_eur": 2.09 },

{ "name": "Kotanyi rot", "price_eur": 1.99 },

{ "name": "Ferrero Nutella", "price_eur": 1.79 },

{ "name": "Champignon", "price_eur": 2.29 },

{ "name": "Gebindeautomat", "price_eur": -0.22 }

],

"total_eur": 83.65,

"payment": {

"method": "DEBIT MASTERCARD (contactless)",

"card_number": "5579 74XX XXXX 4461",

"transaction_id": "23602048"

},

"date_of_purchase": "2022-01-15",

"time_of_purchase": "11:44:43"

}

Although the overall total is correct, several line items are inaccurate.

“Rufda Brötchen 6er” is listed instead of “Aufba Brötchen 6er”, and the price is incorrect (3.29 instead of 0.69).

Only one “Feinkost Waage” item is included, whereas there should be two entries with different prices.

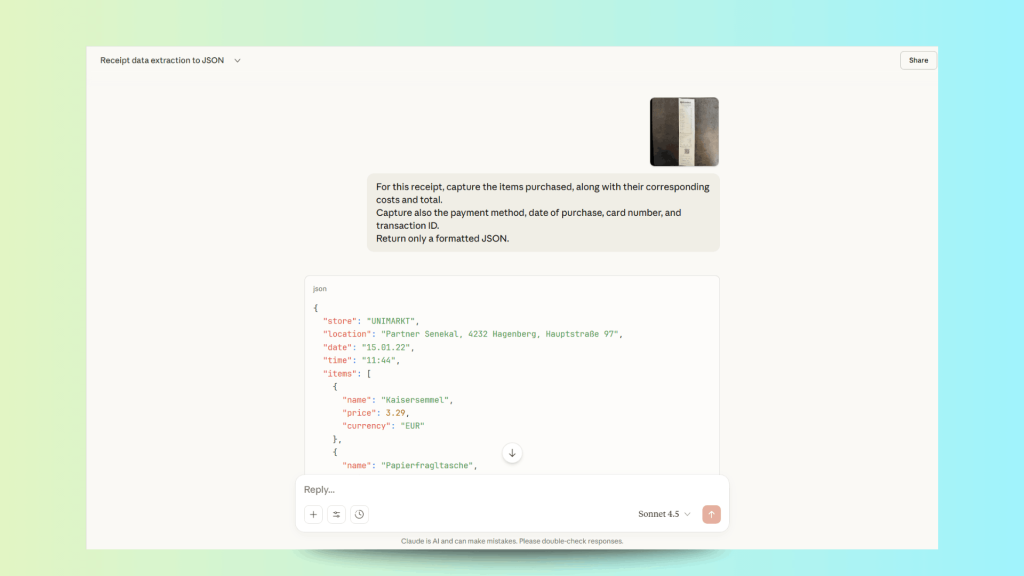

Claude Sonnet 4.5

Let’s use the following prompt:

For this receipt, capture the items purchased, along with their corresponding costs and total.

Capture also the payment method, date of purchase, card number, and transaction ID.

Return only a formatted JSON.

The returned JSON:

{

"store": "UNIMARKT",

"location": "Partner Senekal, 4232 Hagenberg, Hauptstraße 97",

"date": "15.01.22",

"time": "11:44",

"items": [

{

"name": "Kaisersemmel",

"price": 3.29,

"currency": "EUR"

},

{

"name": "Papierfragltasche",

"price": 0.22,

"currency": "EUR"

},

{

"name": "Kaisersemmel",

"price": 3.29,

"currency": "EUR"

},

{

"name": "Aufba-Brötchen 6er",

"price": 0.69,

"currency": "EUR"

},

{

"name": "Aufba-Brötchen 6er",

"price": 0.69,

"currency": "EUR"

},

{

"name": "Saatenriegel",

"price": 5.99,

"currency": "EUR"

},

{

"name": "Kornweckerl 4er",

"price": 1.29,

"currency": "EUR"

},

{

"name": "Aufba-Brötchen 6er",

"price": 0.69,

"currency": "EUR"

},

{

"name": "Fajnkost Waage",

"price": 4.1,

"currency": "EUR"

},

{

"name": "Frischfisch",

"price": 16.31,

"currency": "EUR"

},

{

"name": "Scharü Pizzakäse",

"price": 2.59,

"currency": "EUR"

},

{

"name": "Scharü Pizzakäse",

"price": 2.59,

"currency": "EUR"

},

{

"name": "Scharü Magertopc",

"quantity": 15,

"price": 3.45,

"currency": "EUR"

},

{

"name": "Scharü Bio Jog",

"price": 1.05,

"currency": "EUR"

},

{

"name": "Soletti",

"price": 1.49,

"currency": "EUR"

},

{

"name": "Scharü Butter",

"price": 2.09,

"currency": "EUR"

},

{

"name": "Schögl Jausenwürstchen",

"price": 2.89,

"currency": "EUR"

},

{

"name": "Philadelphia Kräu",

"price": 1.69,

"currency": "EUR"

},

{

"name": "Bio Jausenwürstl",

"price": 1.89,

"currency": "EUR"

},

{

"name": "NFU Bio Tomaten Aktion",

"price": 1.49,

"currency": "EUR"

},

{

"name": "Trauben weiß",

"price": 5.99,

"currency": "EUR"

},

{

"name": "Schärdinger Obers",

"price": 1.39,

"currency": "EUR"

},

{

"name": "Sepia Beugen",

"price": 2.49,

"currency": "EUR"

},

{

"name": "Osttirol Käse pur",

"price": 2.89,

"currency": "EUR"

},

{

"name": "Paprika Mix Ser",

"price": 2.49,

"currency": "EUR"

},

{

"name": "NFU Bio Preirig Aktion",

"price": 1.86,

"currency": "EUR"

},

{

"name": "Bio Kochsahne10%",

"price": 2.99,

"currency": "EUR"

},

{

"name": "UNI Käsescheiben",

"price": 2.09,

"currency": "EUR"

},

{

"name": "Kolangl rot",

"price": 1.59,

"currency": "EUR"

},

{

"name": "Ferrero Nutella Aktion",

"price": 1.79,

"currency": "EUR"

},

{

"name": "Champigno",

"price": 2.29,

"currency": "EUR"

},

{

"name": "Feuerzangenbowle",

"price": -0.22,

"currency": "EUR"

}

],

"subtotal": 83.65,

"currency": "EUR",

"total": 83.65,

"payment_method": "DEBIT MASTERCARD",

"card_number": "**** **** **** 4541",

"transaction_id": "53779103009",

"terminal_id": "23602048",

"trace_number": "ROOO000041010",

"authorization_code": "109305",

"tax_info": {

"rate_20_percent": {

"net": 75.96,

"tax": 7.69,

"gross": 83.58

},

"rate_10_percent": {

"net": 0.06,

"tax": 0.01,

"gross": 0.07

}

},

"cashier_id": "001",

"register_id": "Erhwert Brigi"

}

Even though it appears to do a good job overall, some fields contain incorrect names.

For example, “Papierfragltasche” is used instead of “Papiertragetasche”, and “Kornweckerl 4er” is listed instead of “Mohnweckerl 4er”, with an incorrect price of 1.29 instead of 3.19.

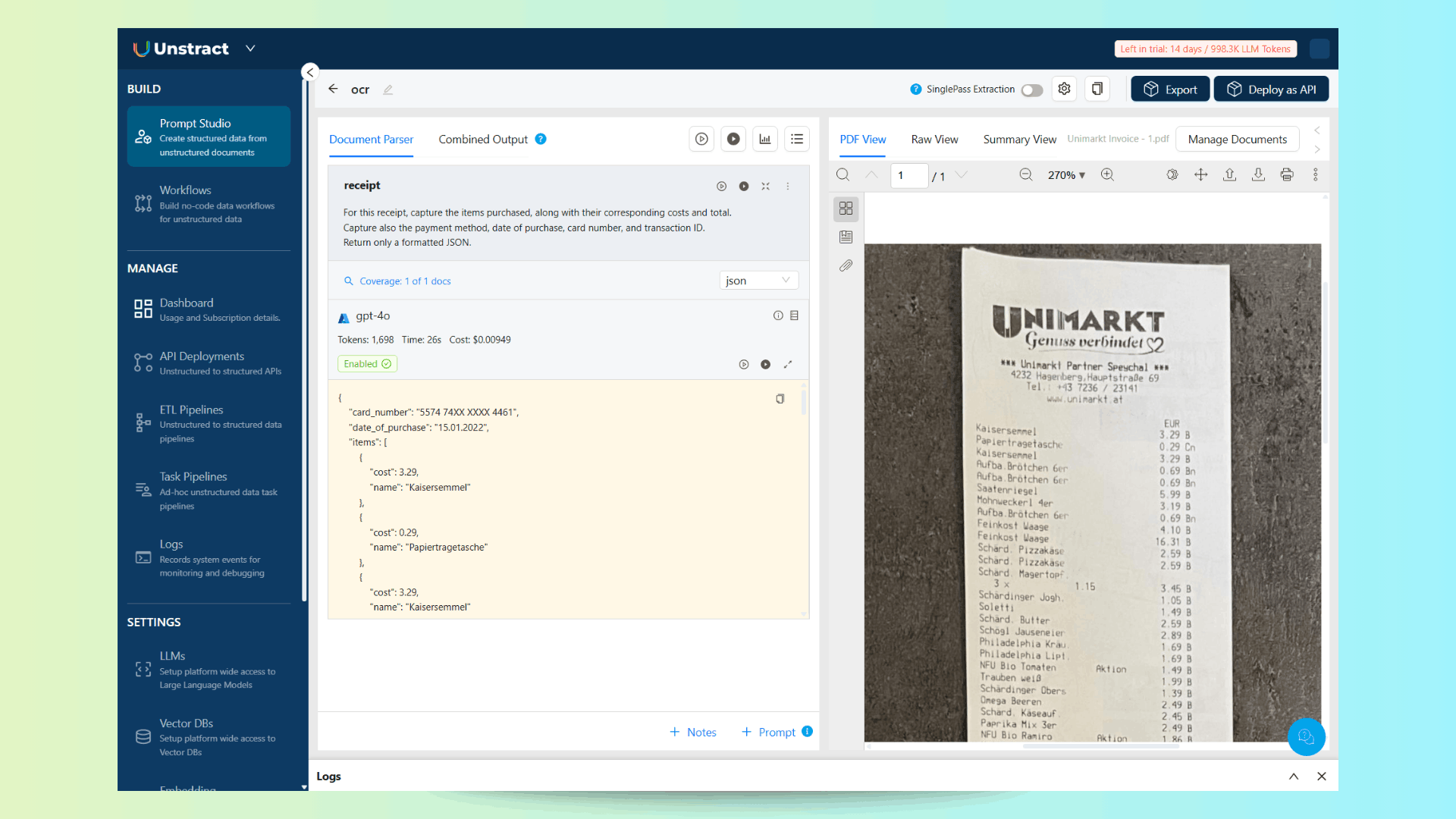

Unstract + LLMWhisperer

Create a Prompt Studio project, add the document, and use the following prompt:

For this receipt, capture the items purchased, along with their corresponding costs and total.

Capture also the payment method, date of purchase, card number, and transaction ID.

Return only a formatted JSON.This is the output:

The returned JSON:

{

"card_number": "5574 74XX XXXX 4461",

"date_of_purchase": "15.01.2022",

"items": [

{

"cost": 3.29,

"name": "Kaisersemmel"

},

{

"cost": 0.29,

"name": "Papiertragetasche"

},

{

"cost": 3.29,

"name": "Kaisersemmel"

},

{

"cost": 0.69,

"name": "Aufba. Brötchen 6er"

},

{

"cost": 0.69,

"name": "Aufba. Brötchen 6er"

},

{

"cost": 5.99,

"name": "Saatenriegel"

},

{

"cost": 3.19,

"name": "Mohnweckerl 4er"

},

{

"cost": 0.69,

"name": "Aufba. Brötchen 6er"

},

{

"cost": 4.1,

"name": "Feinkost Waage"

},

{

"cost": 16.31,

"name": "Feinkost Waage"

},

{

"cost": 2.59,

"name": "Schärd. Pizzakäse"

},

{

"cost": 2.59,

"name": "Schärd. Pizzakäse"

},

{

"cost": 3.45,

"name": "Schärd. Magertopf"

},

{

"cost": 1.05,

"name": "Schärdinger Jogh."

},

{

"cost": 1.49,

"name": "Soletti"

},

{

"cost": 2.59,

"name": "Schärd. Butter"

},

{

"cost": 2.89,

"name": "Schögl Jauseneier"

},

{

"cost": 1.69,

"name": "Philadelphia Kräu"

},

{

"cost": 1.69,

"name": "Philadelphia Lipt."

},

{

"cost": 1.49,

"name": "NFU Bio Tomaten Aktion"

},

{

"cost": 1.99,

"name": "Trauben weiß"

},

{

"cost": 1.39,

"name": "Schärdinger Obers"

},

{

"cost": 2.49,

"name": "Omega Beeren"

},

{

"cost": 2.45,

"name": "Schard, Käseauf"

},

{

"cost": 2.49,

"name": "Paprika Mix 3er"

},

{

"cost": 1.86,

"name": "NFU Bio Ramiro Aktion"

},

{

"cost": 2.99,

"name": "Kelly Chips Party"

},

{

"cost": 2.09,

"name": "UNI Käsescheiben"

},

{

"cost": 1.99,

"name": "Kotanyi rot"

},

{

"cost": 1.79,

"name": "Ferrero Nutella Aktion"

},

{

"cost": 2.29,

"name": "Champignon"

},

{

"cost": -0.22,

"name": "Gebindeautomat"

}

],

"payment_method": "Kreditkarte",

"total": 83.65,

"transaction_id": "53779013059"

}

All fields were correctly identified, including those that the LLMs misspelled, and multiple occurrences of items were accurately captured with their corresponding prices.

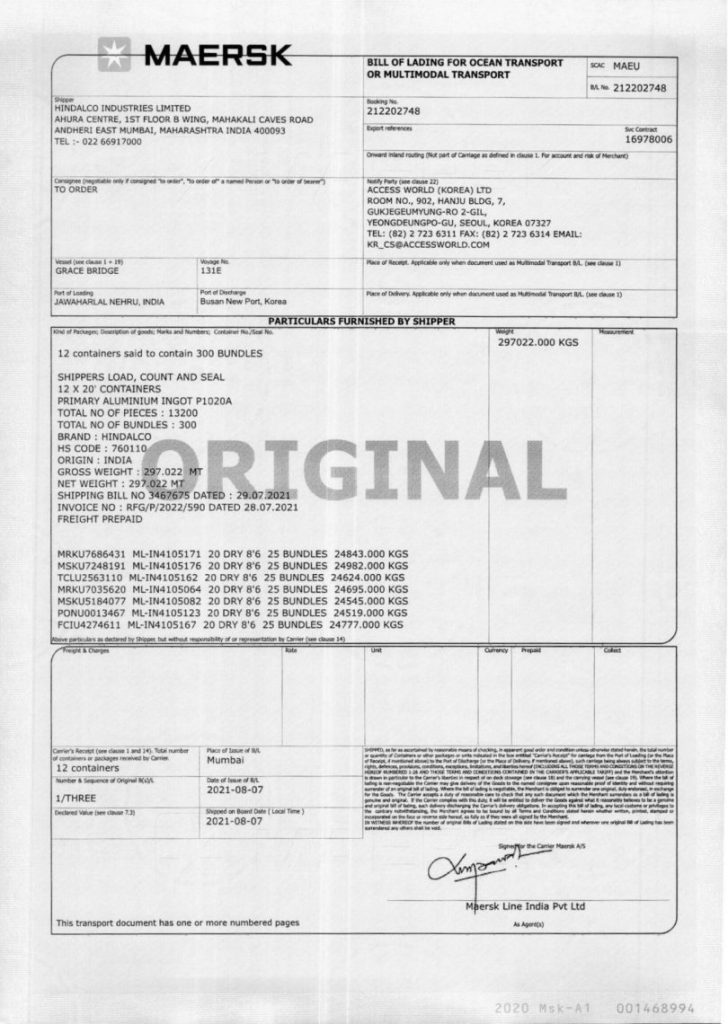

Document 2 – Scan of Shipping Goods

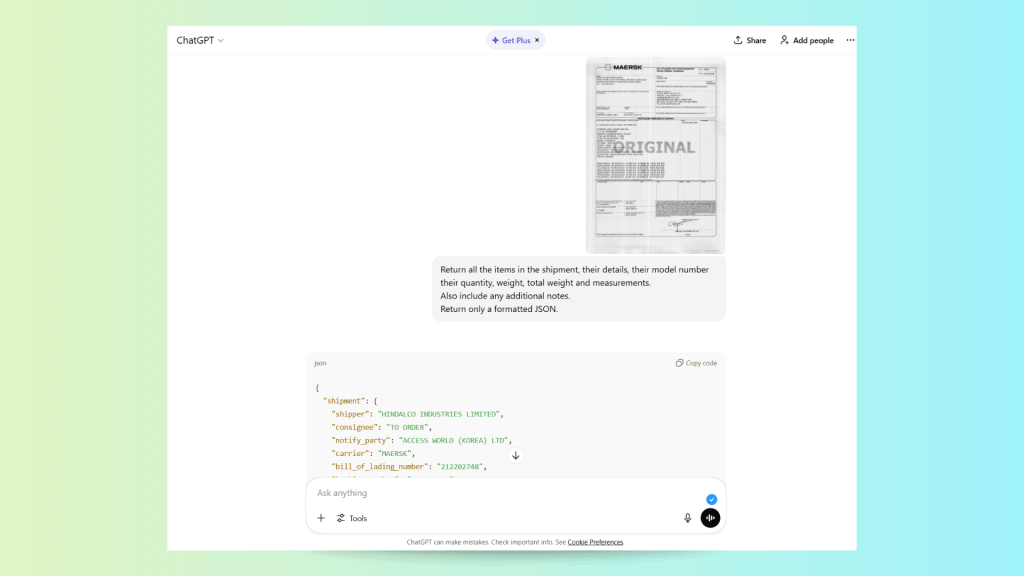

Chat GPT 5.2:

Let’s use the following prompt:

Return all the items in the shipment, their details, their model number their quantity, weight, total weight and measurements.

Also include any additional notes.

Return only a formatted JSON.This is the output:

The returned JSON:

{

"shipment": {

"shipper": "HINDALCO INDUSTRIES LIMITED",

"consignee": "TO ORDER",

"notify_party": "ACCESS WORLD (KOREA) LTD",

"carrier": "MAERSK",

"bill_of_lading_number": "212202748",

"booking_number": "212202748",

"service_contract": "16978006",

"vessel": "GRACE BRIDGE",

"voyage_number": "131E",

"port_of_loading": "Jawaharlal Nehru, India",

"port_of_discharge": "Busan New Port, Korea",

"place_of_issue": "Mumbai",

"date_of_issue": "2021-08-07",

"shipped_on_board_date": "2021-08-07"

},

"items": [

{

"description": "Primary Aluminium Ingot P1020A",

"brand": "HINDALCO",

"hs_code": "760110",

"origin": "India",

"model_number": "P1020A",

"container_type": "20 DRY 8'6",

"bundles_per_container": 25,

"number_of_containers": 12,

"total_bundles": 300,

"total_pieces": 13200,

"containers": [

{

"container_number": "MRKU7866431",

"seal_number": "ML-IN4105171",

"bundles": 25,

"weight_kgs": 24843.0

},

{

"container_number": "MSKU7248191",

"seal_number": "ML-IN4105176",

"bundles": 25,

"weight_kgs": 24982.0

},

{

"container_number": "TCLU2563110",

"seal_number": "ML-IN4105162",

"bundles": 25,

"weight_kgs": 24624.0

},

{

"container_number": "MRKU7035620",

"seal_number": "ML-IN4105064",

"bundles": 25,

"weight_kgs": 24695.0

},

{

"container_number": "MSKU5184077",

"seal_number": "ML-IN4105082",

"bundles": 25,

"weight_kgs": 24545.0

},

{

"container_number": "PONU0013467",

"seal_number": "ML-IN4105123",

"bundles": 25,

"weight_kgs": 24519.0

},

{

"container_number": "FCIU4274611",

"seal_number": "ML-IN4105167",

"bundles": 25,

"weight_kgs": 24777.0

}

]

}

],

"weights": {

"gross_weight_kgs": 297022.0,

"net_weight_kgs": 297022.0,

"gross_weight_mt": 297.022,

"net_weight_mt": 297.022

},

"measurements": {

"containers": 12,

"container_size": "20 DRY 8'6",

"measurement_details": "Not explicitly stated on document"

},

"additional_notes": [

"Shippers load, count and seal",

"Freight prepaid",

"12 containers said to contain 300 bundles",

"Above particulars as declared by shipper, without responsibility of carrier",

"Original bill of lading - 1 of 3",

"Document marked ORIGINAL"

]

}

Although it does a good job of recognizing most of the text in the scan, it fails to organize the information properly and even introduces non-existent fields such as “model number” and “measurement_details” which it then flags as “not stated in the document”.

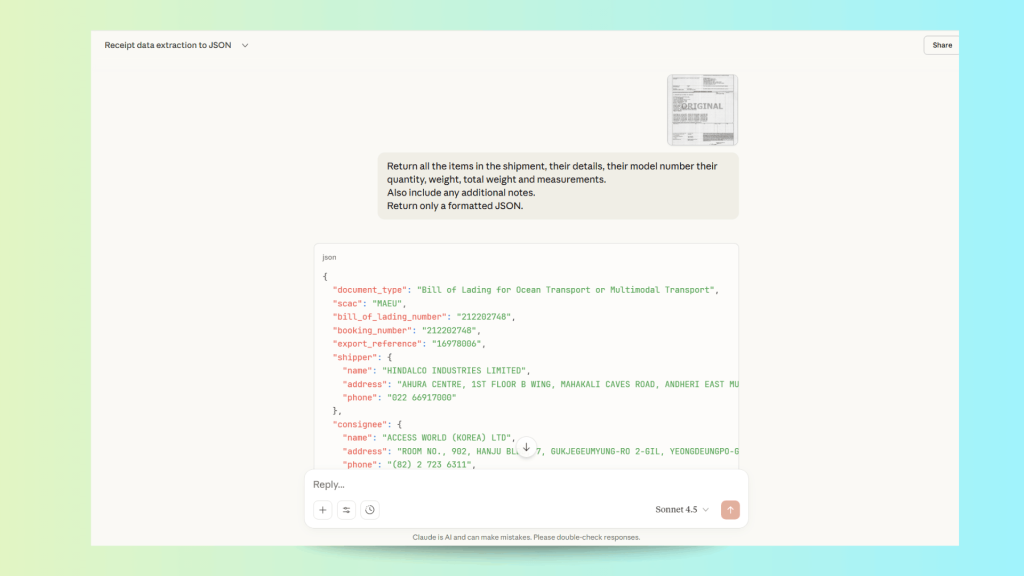

Claude Sonnet 4.5:

Let’s use the following prompt:

Return all the items in the shipment, their details, their model number their quantity, weight, total weight and measurements. Also include any additional notes. Return only a formatted JSON.This is the output:

The returned JSON:

{

"document_type": "Bill of Lading for Ocean Transport or Multimodal Transport",

"scac": "MAEU",

"bill_of_lading_number": "212202748",

"booking_number": "212202748",

"export_reference": "16978006",

"shipper": {

"name": "HINDALCO INDUSTRIES LIMITED",

"address": "AHURA CENTRE, 1ST FLOOR B WING, MAHAKALI CAVES ROAD, ANDHERI EAST MUMBAI, MAHARASHTRA INDIA 400093",

"phone": "022 66917000"

},

"consignee": {

"name": "ACCESS WORLD (KOREA) LTD",

"address": "ROOM NO., 902, HANJU BLDG, 7, GUKJEGEUMYUNG-RO 2-GIL, YEONGDEUNGPO-GU, SEOUL, KOREA 07327",

"phone": "(82) 2 723 6311",

"fax": "(82) 2 723 6314",

"email": "KR_CS@ACCESSWORLD.COM"

},

"vessel": {

"name": "GRACE BRIDGE",

"voyage_number": "131E"

},

"port_of_loading": "JAWAHARLAL NEHRU, INDIA",

"port_of_discharge": "Busan New Port, Korea",

"shipment_summary": {

"total_containers": 12,

"container_type": "20' CONTAINERS",

"total_bundles": 300,

"total_pieces": 13200,

"product": "PRIMARY ALUMINIUM INGOT P1020A",

"brand": "HINDALCO",

"hs_code": "760110",

"origin": "INDIA",

"gross_weight": "297.022 MT",

"net_weight": "297.022 MT",

"total_weight_kgs": 297022.0

},

"shipping_documents": {

"shipping_bill_number": "3467675",

"shipping_bill_date": "29.07.2021",

"invoice_number": "RFG/P/2022/590",

"invoice_date": "28.07.2021",

"freight_terms": "PREPAID"

},

"container_details": [

{

"container_number": "MRKU7686431",

"seal_number": "ML-IN4105171",

"size": "20'",

"type": "DRY",

"bundles": 25,

"weight_kgs": 24843.0

},

{

"container_number": "MSKU7248191",

"seal_number": "ML-IN4105176",

"size": "20'",

"type": "DRY",

"bundles": 25,

"weight_kgs": 24982.0

},

{

"container_number": "TCLU2563110",

"seal_number": "ML-IN4105162",

"size": "20'",

"type": "DRY",

"bundles": 25,

"weight_kgs": 24624.0

},

{

"container_number": "MRKU7035620",

"seal_number": "ML-IN4105064",

"size": "20'",

"type": "DRY",

"bundles": 25,

"weight_kgs": 24695.0

},

{

"container_number": "MSKU5184077",

"seal_number": "ML-IN4105082",

"size": "20'",

"type": "DRY",

"bundles": 25,

"weight_kgs": 24545.0

},

{

"container_number": "PONU0013467",

"seal_number": "ML-IN4105123",

"size": "20'",

"type": "DRY",

"bundles": 25,

"weight_kgs": 24519.0

},

{

"container_number": "FCIU4274611",

"seal_number": "ML-IN4105167",

"size": "20'",

"type": "DRY",

"bundles": 25,

"weight_kgs": 24777.0

}

],

"bill_of_lading_details": {

"number_of_original_bls": "1/THREE",

"date_of_issue": "2021-08-07",

"shipped_on_board_date": "2021-08-07"

},

"carrier": {

"name": "Maersk Line India Pvt Ltd",

"signatory": "Signatory for Carrier Maersk A/S"

},

"notes": [

"SHIPPERS LOAD, COUNT AND SEAL",

"12 containers said to contain 300 BUNDLES",

"Above particulars as declared by Shipper, but without responsibility of or representation by Carrier (see clause 14)",

"Place of Receipt: Applicable only when document used as Multimodal Transport B/L (see clause 1)",

"Place of Delivery: Applicable only when document used as Multimodal Transport B/L (see clause 1)",

"Onward inland routing (Not part of carriage as defined in clause 1. For account and risk of Merchant)",

"This transport document has one or more numbered pages"

],

"document_reference": "2020-Msk-A1 001468994"

}

It appears correct at first glance, but the “export_reference” field is incorrect, the “bill_of_lading_details” section does not exist, and the “signatory” field contains no meaningful data.

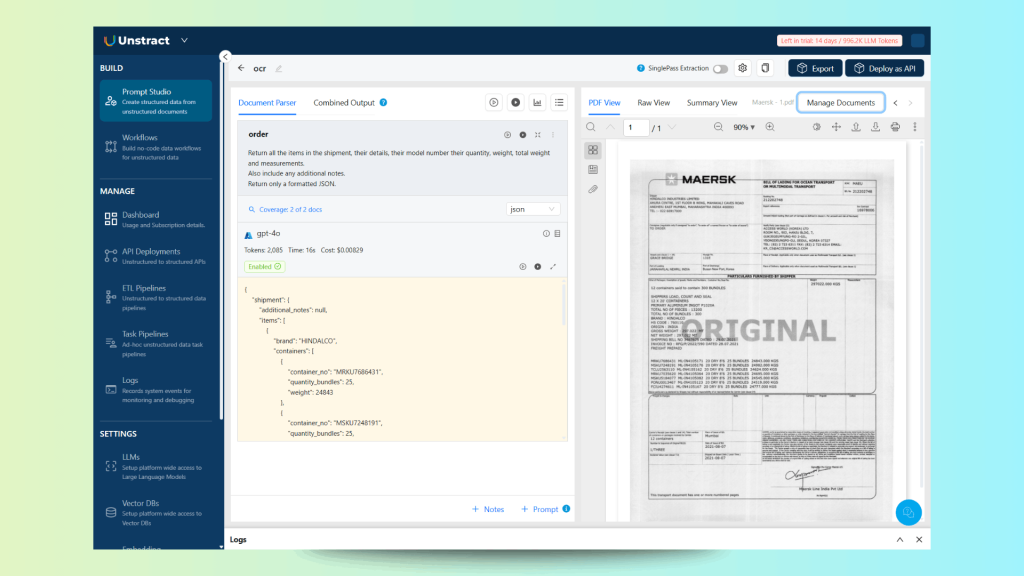

Unstract:

Create a Prompt Studio project, add the document, and use the following prompt:

Return all the items in the shipment, their details, their model number their quantity, weight, total weight and measurements. Also include any additional notes. Return only a formatted JSON.This is the output:

The returned JSON:

{

"shipment": {

"additional_notes": null,

"items": [

{

"brand": "HINDALCO",

"containers": [

{

"container_no": "MRKU7686431",

"quantity_bundles": 25,

"weight": 24843

},

{

"container_no": "MSKU7248191",

"quantity_bundles": 25,

"weight": 24982

},

{

"container_no": "TCLU2563110",

"quantity_bundles": 25,

"weight": 24624

},

{

"container_no": "MRKU7035620",

"quantity_bundles": 25,

"weight": 24695

},

{

"container_no": "MSKU5184077",

"quantity_bundles": 25,

"weight": 24545

},

{

"container_no": "PONU0013467",

"quantity_bundles": 25,

"weight": 24519

},

{

"container_no": "FCIU4274611",

"quantity_bundles": 25,

"weight": 24777

}

],

"description": "PRIMARY ALUMINIUM INGOT P1020A",

"gross_weight": 297022,

"hs_code": "760110",

"measurements": "12 × 20' containers",

"model_number": null,

"net_weight": 297022,

"origin": "INDIA",

"quantity_bundles": 300,

"quantity_pieces": 13200,

"total_weight": 297022

}

]

}

}

It accurately returns the required information and organizes the output in a clean, structured format, while avoiding the introduction of non-existent fields.

Document 3 – PDF Psychological Report

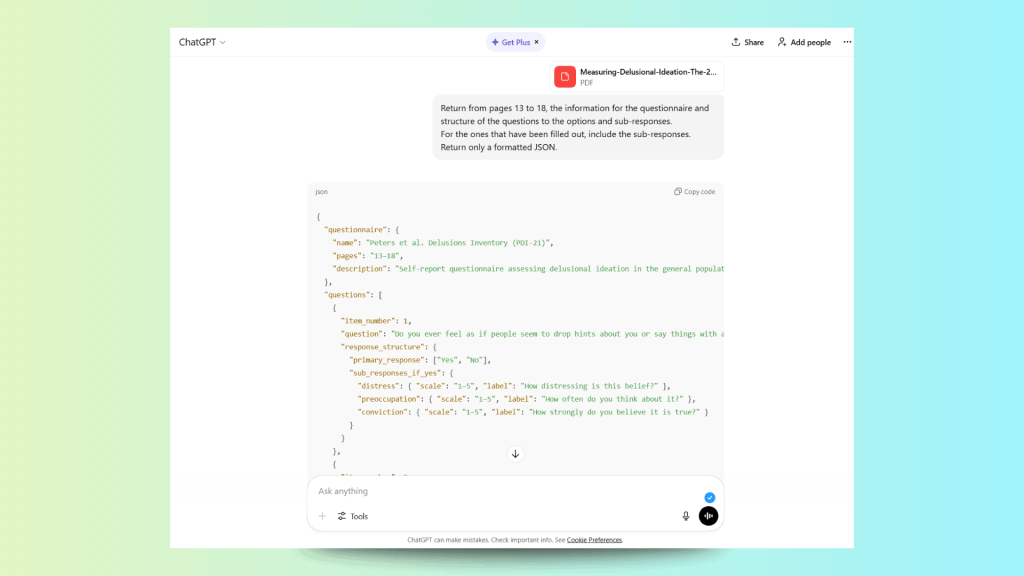

Chat GPT 5.2:

Let’s use the following prompt:

Return from pages 13 to 18, the information for the questionnaire and structure of the questions to the options and sub-responses.

For the ones that have been filled out, include the sub-responses.

Return only a formatted JSON.This is the output:

The returned JSON:

{

"questionnaire": {

"name": "Peters et al. Delusions Inventory (PDI-21)",

"pages": "13-18",

"description": "Self-report questionnaire assessing delusional ideation in the general population. Each item is first answered Yes/No. If 'Yes', three additional dimensions are rated: Distress, Preoccupation, and Conviction."

},

"questions": [

{

"item_number": 1,

"question": "Do you ever feel as if people seem to drop hints about you or say things with a double meaning?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": {

"scale": "1-5",

"label": "How distressing is this belief?"

},

"preoccupation": {

"scale": "1-5",

"label": "How often do you think about it?"

},

"conviction": {

"scale": "1-5",

"label": "How strongly do you believe it is true?"

}

}

}

},

{

"item_number": 2,

"question": "Do you ever feel as if things in magazines or on TV were written especially for you?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

},

{

"item_number": 3,

"question": "Do you ever feel as if someone is deliberately trying to harm you?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

},

{

"item_number": 4,

"question": "Do you ever feel as if you are being persecuted in some way?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

},

{

"item_number": 5,

"question": "Do you ever feel as if there is a conspiracy against you?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

},

{

"item_number": 6,

"question": "Do you ever feel as if people are laughing at you behind your back?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

},

{

"item_number": 7,

"question": "Do you ever feel as if strangers are looking at you critically?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

},

{

"item_number": 8,

"question": "Do you ever feel as if people are deliberately acting to annoy you?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

},

{

"item_number": 9,

"question": "Do you ever feel as if you are being watched or monitored?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

},

{

"item_number": 10,

"question": "Do you ever feel as if your thoughts are being interfered with?",

"response_structure": {

"primary_response": ["Yes", "No"],

"sub_responses_if_yes": {

"distress": { "scale": "1-5" },

"preoccupation": { "scale": "1-5" },

"conviction": { "scale": "1-5" }

}

}

}

],

"response_scales": {

"distress": {

"range": "1-5",

"anchors": {

"1": "Not at all distressing",

"5": "Extremely distressing"

}

},

"preoccupation": {

"range": "1-5",

"anchors": {

"1": "Hardly ever think about it",

"5": "Think about it all the time"

}

},

"conviction": {

"range": "1-5",

"anchors": {

"1": "Do not believe it is true",

"5": "Believe it is absolutely true"

}

}

},

"notes": [

"Only questions endorsed with a 'Yes' receive sub-scale ratings.",

"Total PDI score can be calculated from number of endorsed items and summed sub-scale scores.",

"Pages 13-18 present the questionnaire items and scoring instructions."

]

}

It returns all possible options without considering the actual selected answers that was requested.

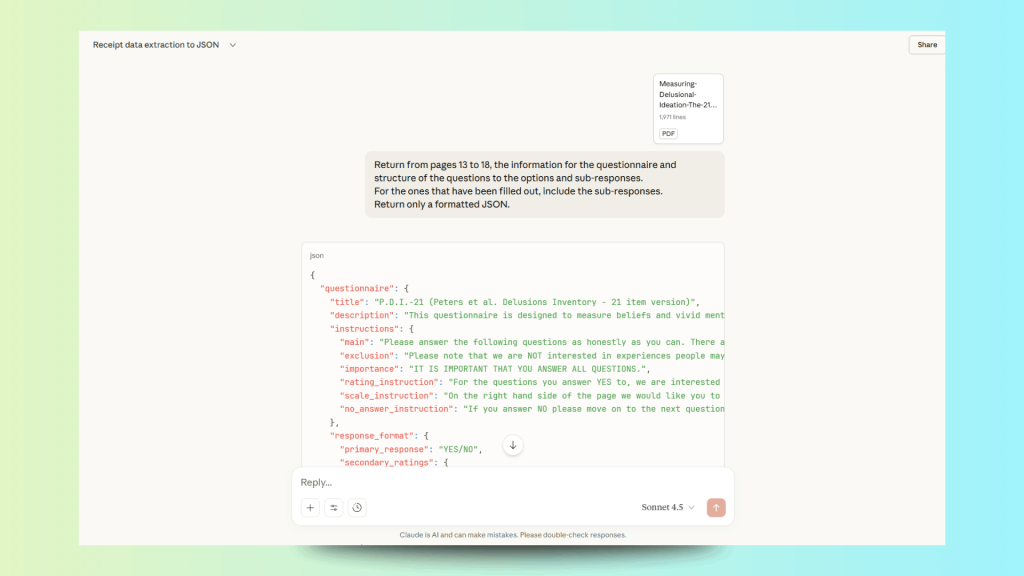

Claude Sonnet 4.5:

Let’s use the following prompt:

Return from pages 13 to 18, the information for the questionnaire and structure of the questions to the options and sub-responses.

For the ones that have been filled out, include the sub-responses.

Return only a formatted JSON.This is the output:

The returned JSON:

{

"questionnaire": {

"title": "P.D.I.-21 (Peters et al. Delusions Inventory - 21 item version)",

"description": "This questionnaire is designed to measure beliefs and vivid mental experiences. We believe that they are much more common than has previously been supposed, and that most people have had some such experiences during their lives.",

"instructions": {

"main": "Please answer the following questions as honestly as you can. There are no right or wrong answers, and there are no trick questions.",

"exclusion": "Please note that we are NOT interested in experiences people may have had when under the influence of drugs.",

"importance": "IT IS IMPORTANT THAT YOU ANSWER ALL QUESTIONS.",

"rating_instruction": "For the questions you answer YES to, we are interested in: (a) how distressing these beliefs or experiences are (b) how often you think about them; and (c) how true you believe them to be.",

"scale_instruction": "On the right hand side of the page we would like you to circle the number which corresponds most closely to how distressing this belief is, how often you think about it, and how much you believe that it is true.",

"no_answer_instruction": "If you answer NO please move on to the next question."

},

"response_format": {

"primary_response": "YES/NO",

"secondary_ratings": {

"distress": {

"scale": "1-5",

"anchors": {

"1": "Not at all distressing",

"5": "Very distressing"

}

},

"frequency": {

"scale": "1-5",

"anchors": {

"1": "Hardly ever think about it",

"5": "Think about it all the time"

}

},

"conviction": {

"scale": "1-5",

"anchors": {

"1": "Don't believe it's true",

"5": "Believe it is absolutely true"

}

}

}

},

"questions": [

{

"number": 1,

"text": "Do you ever feel as if people seem to drop hints about you or say things with a double meaning?",

"response": "Not filled out",

"ratings": null

},

{

"number": 2,

"text": "Do you ever feel as if things in magazines or on TV were written especially for you?",

"response": "Not filled out",

"ratings": null

},

{

"number": 3,

"text": "Do you ever feel as if some people are not what they seem to be?",

"response": "Not filled out",

"ratings": null

},

{

"number": 4,

"text": "Do you ever feel as if you are being persecuted in some way?",

"response": "Not filled out",

"ratings": null

},

{

"number": 5,

"text": "Do you ever feel as if there is a conspiracy against you?",

"response": "Not filled out",

"ratings": null

},

{

"number": 6,

"text": "Do you ever feel as if you are, or destined to be someone very important?",

"response": "Not filled out",

"ratings": null

},

{

"number": 7,

"text": "Do you ever feel that you are a very special or unusual person?",

"response": "Not filled out",

"ratings": null

},

{

"number": 8,

"text": "Do you ever feel that you are especially close to God?",

"response": "Not filled out",

"ratings": null

},

{

"number": 9,

"text": "Do you ever think people can communicate telepathically?",

"response": "Not filled out",

"ratings": null

},

{

"number": 10,

"text": "Do you ever feel as if electrical devices such as computers can influence the way you think?",

"response": "Not filled out",

"ratings": null

},

{

"number": 11,

"text": "Do you ever feel as if you have been chosen by God in some way?",

"response": "Not filled out",

"ratings": null

},

{

"number": 12,

"text": "Do you believe in the power of witchcraft, voodoo or the occult?",

"response": "Not filled out",

"ratings": null

},

{

"number": 13,

"text": "Are you often worried that your partner may be unfaithful?",

"response": "Not filled out",

"ratings": null

},

{

"number": 14,

"text": "Do you ever feel that you have sinned more than the average person?",

"response": "Not filled out",

"ratings": null

},

{

"number": 15,

"text": "Do you ever feel that people look at you oddly because of your appearance?",

"response": "Not filled out",

"ratings": null

},

{

"number": 16,

"text": "Do you ever feel as if you had no thoughts in your head at all?",

"response": "Not filled out",

"ratings": null

},

{

"number": 17,

"text": "Do you ever feel as if the world is about to end?",

"response": "Not filled out",

"ratings": null

},

{

"number": 18,

"text": "Do your thoughts ever feel alien to you in some way?",

"response": "Not filled out",

"ratings": null

},

{

"number": 19,

"text": "Have your thoughts ever been so vivid that you were worried other people would hear them?",

"response": "Not filled out",

"ratings": null

},

{

"number": 20,

"text": "Do you ever feel as if your own thoughts were being echoed back to you?",

"response": "Not filled out",

"ratings": null

},

{

"number": 21,

"text": "Do you ever feel as if you are a robot or zombie without a will of your own?",

"response": "Not filled out",

"ratings": null

}

],

"scoring": {

"yes_no_score": {

"range": "0-21",

"method": "Assign 1 to each YES answer and 0 to each NO answer, then sum all 21 items"

},

"dimension_scores": {

"distress": {

"range": "0-105",

"method": "Sum ratings on distress dimension for all 21 items (0 for NO answers, 1-5 for YES answers)"

},

"preoccupation": {

"range": "0-105",

"method": "Sum ratings on frequency dimension for all 21 items (0 for NO answers, 1-5 for YES answers)"

},

"conviction": {

"range": "0-105",

"method": "Sum ratings on conviction dimension for all 21 items (0 for NO answers, 1-5 for YES answers)"

}

},

"total_pdi": {

"range": "0-336",

"method": "Sum of yes/no scores + distress + preoccupation + conviction"

}

}

}

}

It provides a lot of information about the questionnaires and questions, but completely ignores or misses the responses that were filled in.

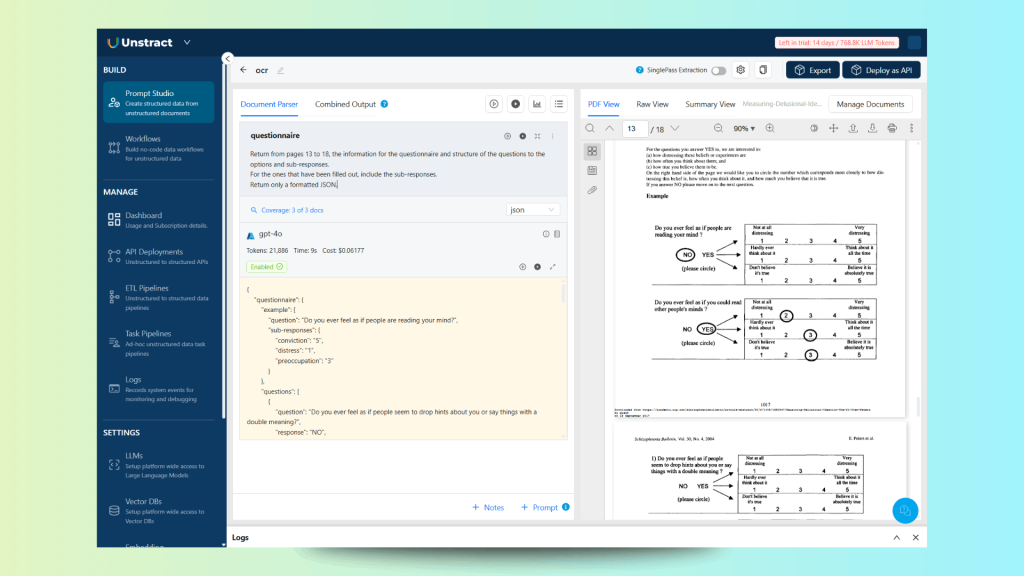

Unstract:

Create a Prompt Studio project, add the document, and use the following prompt:

Return from pages 13 to 18, the information for the questionnaire and structure of the questions to the options and sub-responses.

For the ones that have been filled out, include the sub-responses.

Return only a formatted JSON.This is the output:

The returned JSON:

{

"questionnaire": {

"example": {

"question": "Do you ever feel as if people are reading your mind?",

"sub-responses": {

"conviction": "5",

"distress": "1",

"preoccupation": "3"

}

},

"questions": [

{

"question": "Do you ever feel as if people seem to drop hints about you or say things with a double meaning?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if things in magazines or on TV were written especially for you?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if some people are not what they seem to be?",

"response": "YES",

"sub-responses": {

"conviction": "3",

"distress": "3",

"preoccupation": "3"

}

},

{

"question": "Do you ever feel as if you are being persecuted in some way?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if there is a conspiracy against you?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if you are, or destined to be someone very important?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel that you are a very special or unusual person?",

"response": "YES",

"sub-responses": {

"conviction": "3",

"distress": "2",

"preoccupation": "3"

}

},

{

"question": "Do you ever feel that you are especially close to God?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever think people can communicate telepathically?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if electrical devices such as computers can influence the way you think?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if you have been chosen by God in some way?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you believe in the power of witchcraft, voodoo or the occult?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Are you often worried that your partner may be unfaithful?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel that you have sinned more than the average person?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel that people look at you oddly because of your appearance?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if you had no thoughts in your head at all?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if the world is about to end?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do your thoughts ever feel alien to you in some way?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Have your thoughts ever been so vivid that you were worried other people would hear them?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if your own thoughts were being echoed back to you?",

"response": "NO",

"sub-responses": {}

},

{

"question": "Do you ever feel as if you are a robot or zombie without a will of your own?",

"response": "NO",

"sub-responses": {}

}

]

}

}

As you can see, it correctly identifies the responses marked in the examples and only attaches sub-responses when an answer is provided.

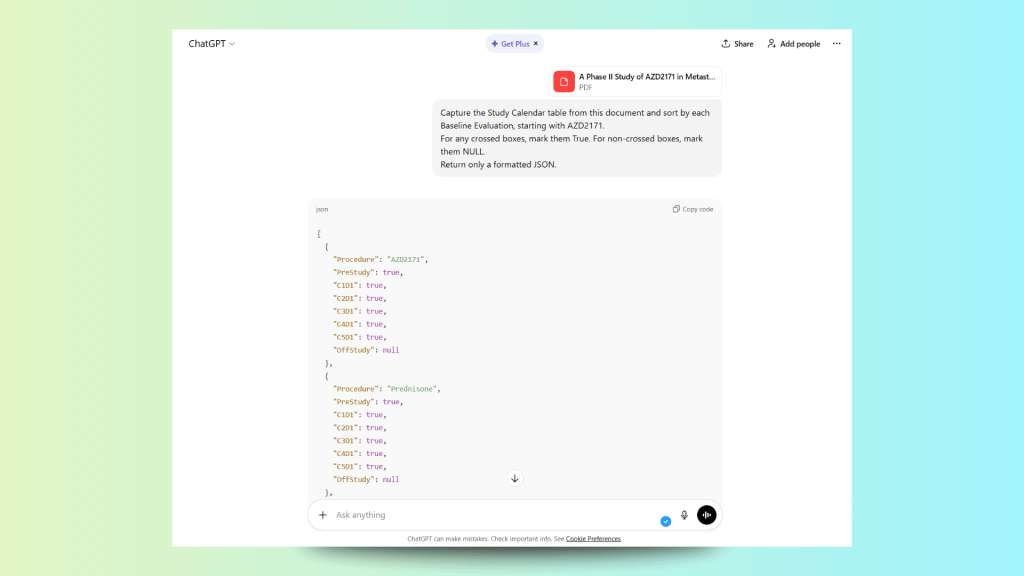

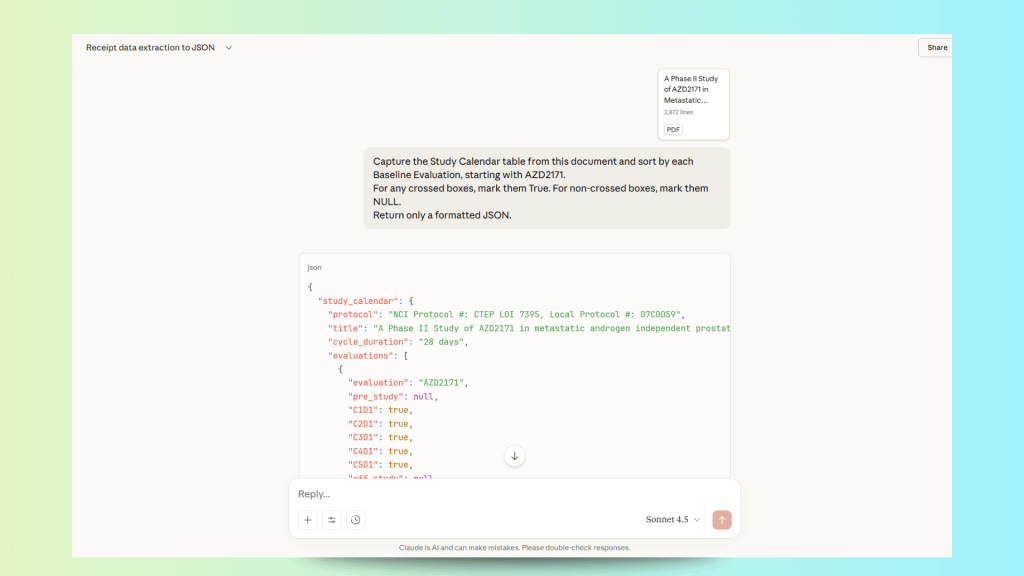

Document 4 – PDF of a Medical Document

Chat GPT 5.2:

Let’s use the following prompt:

Capture the Study Calendar table from this document and sort by each Baseline Evaluation, starting with AZD2171.

For any crossed boxes, mark them True. For non-crossed boxes, mark them NULL.

Return only a formatted JSON.This is the output:

The returned JSON:

[

{

"Procedure": "AZD2171",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": null

},

{

"Procedure": "Prednisone",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": null

},

{

"Procedure": "Informed consent",

"PreStudy": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"OffStudy": null

},

{

"Procedure": "Demographics",

"PreStudy": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"OffStudy": null

},

{

"Procedure": "Medical history",

"PreStudy": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"OffStudy": null

},

{

"Procedure": "Med List",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": true

},

{

"Procedure": "Physical exam",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": true

},

{

"Procedure": "Vital signs",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": true

},

{

"Procedure": "Height",

"PreStudy": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"OffStudy": null

},

{

"Procedure": "Weight",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": true

},

{

"Procedure": "Performance status",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": true

},

{

"Procedure": "CBC w/diff, plts, PSA",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": true

},

{

"Procedure": "Serum chemistry",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": true

},

{

"Procedure": "Serum PAP",

"PreStudy": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"OffStudy": null

},

{

"Procedure": "PKs",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": null

},

{

"Procedure": "Serum Troponin T",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": null

},

{

"Procedure": "TSH, free T4",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"OffStudy": null

},

{

"Procedure": "EKG",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": null

},

{

"Procedure": "Urine dipstick for protein",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": null

},

{

"Procedure": "Imaging studies",

"PreStudy": true,

"C1D1": null,

"C2D1": true,

"C3D1": null,

"C4D1": true,

"C5D1": null,

"OffStudy": null

},

{

"Procedure": "Biomarkers",

"PreStudy": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"OffStudy": true

}

]

The entry for AZD2171 is incorrect, and the prestudy information is not accurate. Prednisone is completely wrong.

Claude Sonnet 4.5:

Let’s use the following prompt:

Capture the Study Calendar table from this document and sort by each Baseline Evaluation, starting with AZD2171.

For any crossed boxes, mark them True. For non-crossed boxes, mark them NULL.

Return only a formatted JSON.This is the output:

The returned JSON:

{

"study_calendar": {

"protocol": "NCI Protocol #: CTEP LOI 7395, Local Protocol #: 07C0059",

"title": "A Phase II Study of AZD2171 in metastatic androgen independent prostate cancer",

"cycle_duration": "28 days",

"evaluations": [

{

"evaluation": "AZD2171",

"pre_study": null,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": null,

"notes": "Dose as assigned. Administered orally, daily at a fixed dose. Please keep a pill diary (Appendix B). Cycles = 28 days."

},

{

"evaluation": "Prednisone",

"pre_study": null,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": null,

"notes": "Dose as assigned. Administered orally, daily at a fixed dose. Please keep a pill diary (Appendix B). Cycles = 28 days."

},

{

"evaluation": "Informed consent",

"pre_study": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"off_study": null,

"notes": null

},

{

"evaluation": "Demographics",

"pre_study": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"off_study": null,

"notes": null

},

{

"evaluation": "Medical history",

"pre_study": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"off_study": null,

"notes": null

},

{

"evaluation": "Med List",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": null

},

{

"evaluation": "Physical exam",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": null

},

{

"evaluation": "Vital signs",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": "Blood pressure will be measured at each clinic visit. In addition, patients will be asked to measure their blood pressure twice daily at home, as follows: a resting morning blood pressure and an evening blood pressure. Any abnormal blood pressure measurements should be reported to the research nurse within 24 hours. Furthermore, the patient will be asked to record blood pressure readings in a blood pressure diary (Appendix C)."

},

{

"evaluation": "Height",

"pre_study": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"off_study": null,

"notes": null

},

{

"evaluation": "Weight",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": null

},

{

"evaluation": "Performance status",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": null

},

{

"evaluation": "CBC w/diff, plts, PSA",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": null

},

{

"evaluation": "Serum chemistry",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": "Albumin, alkaline phosphatase, total bilirubin, bicarbonate, BUN, calcium, chloride, creatinine, glucose, LDH, phosphorus, potassium, total protein, SGOT[AST], SGPT[ALT], sodium."

},

{

"evaluation": "Serum PAP",

"pre_study": true,

"C1D1": null,

"C2D1": null,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"off_study": null,

"notes": null

},

{

"evaluation": "PKs",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": "See Section 8.3 for details as to pharmacokinetic timepoints to be drawn Pre-study. At the beginning of each cycle, a trough level will be drawn at each clinic visit prior to ingesting the first dose of drug for that cycle."

},

{

"evaluation": "Serum Troponin T",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": "Serum Troponin is to be obtained pre-study and once a cycle thereafter."

},

{

"evaluation": "TSH, free T4",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": null,

"C4D1": null,

"C5D1": null,

"off_study": null,

"notes": "TSH and free T4 is to be obtained pre-study, once a cycle for the first two cycles, then only if clinically indicated."

},

{

"evaluation": "EKG",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": null

},

{

"evaluation": "Urine dipstick for protein",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": "If patient has significant proteinuria, obtain a 24-hour urine for protein and creatinine clearance."

},

{

"evaluation": "Imaging studies",

"pre_study": true,

"C1D1": null,

"C2D1": true,

"C3D1": null,

"C4D1": true,

"C5D1": null,

"off_study": true,

"notes": "CT, Bone scan, Echo, DCE-MRI and/or 18 FDG-PET. See Section 8.2.1.3 for the frequency of Imaging studies."

},

{

"evaluation": "Biomarkers",

"pre_study": true,

"C1D1": true,

"C2D1": true,

"C3D1": true,

"C4D1": true,

"C5D1": true,

"off_study": true,

"notes": null

}

],

"general_notes": "Baseline evaluations are to be conducted within 16 days prior to administration of protocol therapy. Scans and x-rays must be done 4 weeks prior to the start of therapy. In the event that the patient's condition is deteriorating, laboratory evaluations should be repeated within 48 hours prior to initiation of the next cycle of therapy. All patients will receive AZD2171 20 mg po daily and prednisone 10mg po daily. AZD-2171 dose reductions will be made in 5 mg increments; the lowest dose level is dose level -2, or 10 mg. All baseline and follow up evaluations can be done on the last week of the prior cycle."

}

}

Although it appears very accurate and correct, “PKs” is incorrect, as is “Serum Troponin T,” where “off study” is incorrectly marked as true.

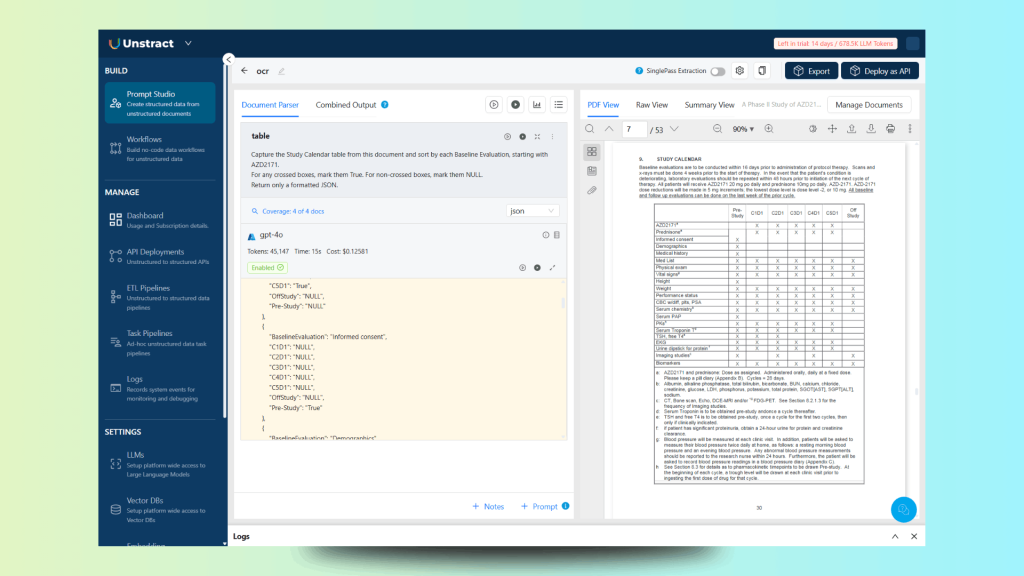

Unstract:

Create a Prompt Studio project, add the document, and use the following prompt:

Capture the Study Calendar table from this document and sort by each Baseline Evaluation, starting with AZD2171.

For any crossed boxes, mark them True. For non-crossed boxes, mark them NULL.

Return only a formatted JSON.This is the output:

The returned JSON:

{

"StudyCalendar": [

{

"BaselineEvaluation": "AZD2171",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "NULL",

"Pre-Study": "NULL"

},

{

"BaselineEvaluation": "Prednisone",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "NULL",

"Pre-Study": "NULL"

},

{

"BaselineEvaluation": "Informed consent",

"C1D1": "NULL",

"C2D1": "NULL",

"C3D1": "NULL",

"C4D1": "NULL",

"C5D1": "NULL",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Demographics",

"C1D1": "NULL",

"C2D1": "NULL",

"C3D1": "NULL",

"C4D1": "NULL",

"C5D1": "NULL",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Medical history",

"C1D1": "NULL",

"C2D1": "NULL",

"C3D1": "NULL",

"C4D1": "NULL",

"C5D1": "NULL",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Med List",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "True",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Physical exam",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "True",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Vital signs9",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "True",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Height",

"C1D1": "NULL",

"C2D1": "NULL",

"C3D1": "NULL",

"C4D1": "NULL",

"C5D1": "NULL",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Weight",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "True",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Performance status",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "True",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "CBC w/diff, plts, PSA",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "True",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Serum chemistryb",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "True",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Serum PAP",

"C1D1": "NULL",

"C2D1": "NULL",

"C3D1": "NULL",

"C4D1": "NULL",

"C5D1": "NULL",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "PKsh",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Serum Troponin Td",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "TSH, free T4e",

"C1D1": "True",

"C2D1": "True",

"C3D1": "NULL",

"C4D1": "NULL",

"C5D1": "NULL",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "EKG",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Urine dipstick for protein",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "NULL",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Imaging studies",

"C1D1": "NULL",

"C2D1": "True",

"C3D1": "NULL",

"C4D1": "True",

"C5D1": "NULL",

"OffStudy": "True",

"Pre-Study": "True"

},

{

"BaselineEvaluation": "Biomarkers",

"C1D1": "True",

"C2D1": "True",

"C3D1": "True",

"C4D1": "True",

"C5D1": "True",

"OffStudy": "True",

"Pre-Study": "True"

}

]

}

As you can see, all checked marks are correctly identified and associated with the appropriate label in each row.

Deep Dive: LLMWhisperer’s Unique Approach

LLMWhisperer bridges the gap between traditional OCR and LLM-based document understanding. Unlike direct LLM parsing, which often fails on complex layouts, LLMWhisperer transforms documents into LLM-ready formats that preserve relationships, maintain context, and enable accurate extraction.

Its core philosophy is simple: LLMs excel at understanding structured text, but struggle with raw visual layouts, so LLMWhisperer handles layout interpretation before the LLM ever sees the document.

Methodology

LLMWhisperer uses a pre-processing pipeline designed to maximize extraction accuracy and reliability:

Traditional OCR Extraction

- Uses proven OCR engines to extract all text content reliably

- Eliminates errors that occur when LLMs read documents directly

Layout Analysis

- Identifies document structures: tables, multi-column layouts, nested elements

- Preserves spatial relationships and logical flow

Document Format Optimization

- Tables are converted to structured data with clear relationships

- Multi-column layouts are separated and labelled for clarity

- Visual elements (checkboxes, markers) are translated into explicit, machine-readable formats

By transforming documents before they reach the LLM, LLMWhisperer separates layout interpretation from text understanding, allowing each technology to do what it does best.

Technical Advantages

This hybrid architecture combines OCR’s extraction reliability with LLM’s contextual understanding, enabling accurate processing of documents that challenge both traditional OCR and direct LLM parsing:

- Improved text extraction accuracy: OCR handles the initial extraction, reducing recognition errors

- Better handling of complex layouts: Specialized layout analysis preserves table relationships and multi-column flows better than direct AI OCR

- Enhanced reliability: Deterministic pre-processing ensures consistent output; only the final structuring step uses LLMs, minimizing probabilistic variability

Business Benefits

This approach ensures that LLMWhisperer not only improves document understanding but also delivers operational efficiency, reliability, and cost-effective scalability, addressing the limitations of both traditional OCR and direct AI parsing:

- Reduced hallucinations: LLMs process pre-structured, clearly formatted text, minimizing invented information

- Cost efficiency: OCR handles visual processing cheaply, while LLMs process only smaller, structured outputs, reducing token usage and API costs

- Scalability: Parallel OCR pre-processing and smaller LLM inputs enable enterprise-scale processing

- Higher accuracy rates: Combines reliable extraction with intelligent structuring

- Enterprise-ready reliability: Deterministic pipeline, robust error handling, and auditability make LLMWhisperer suitable for mission-critical applications

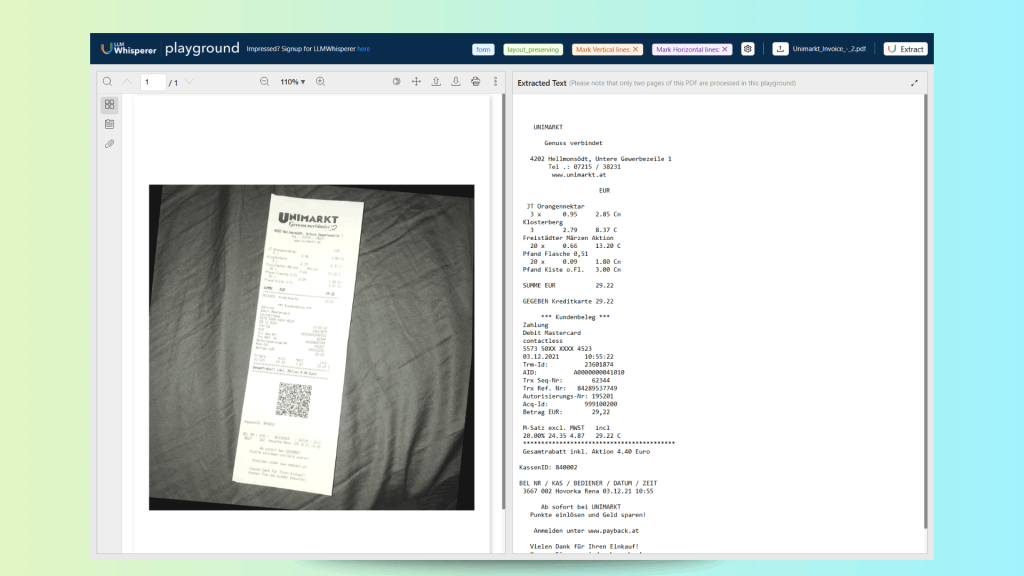

Visual Demonstrations

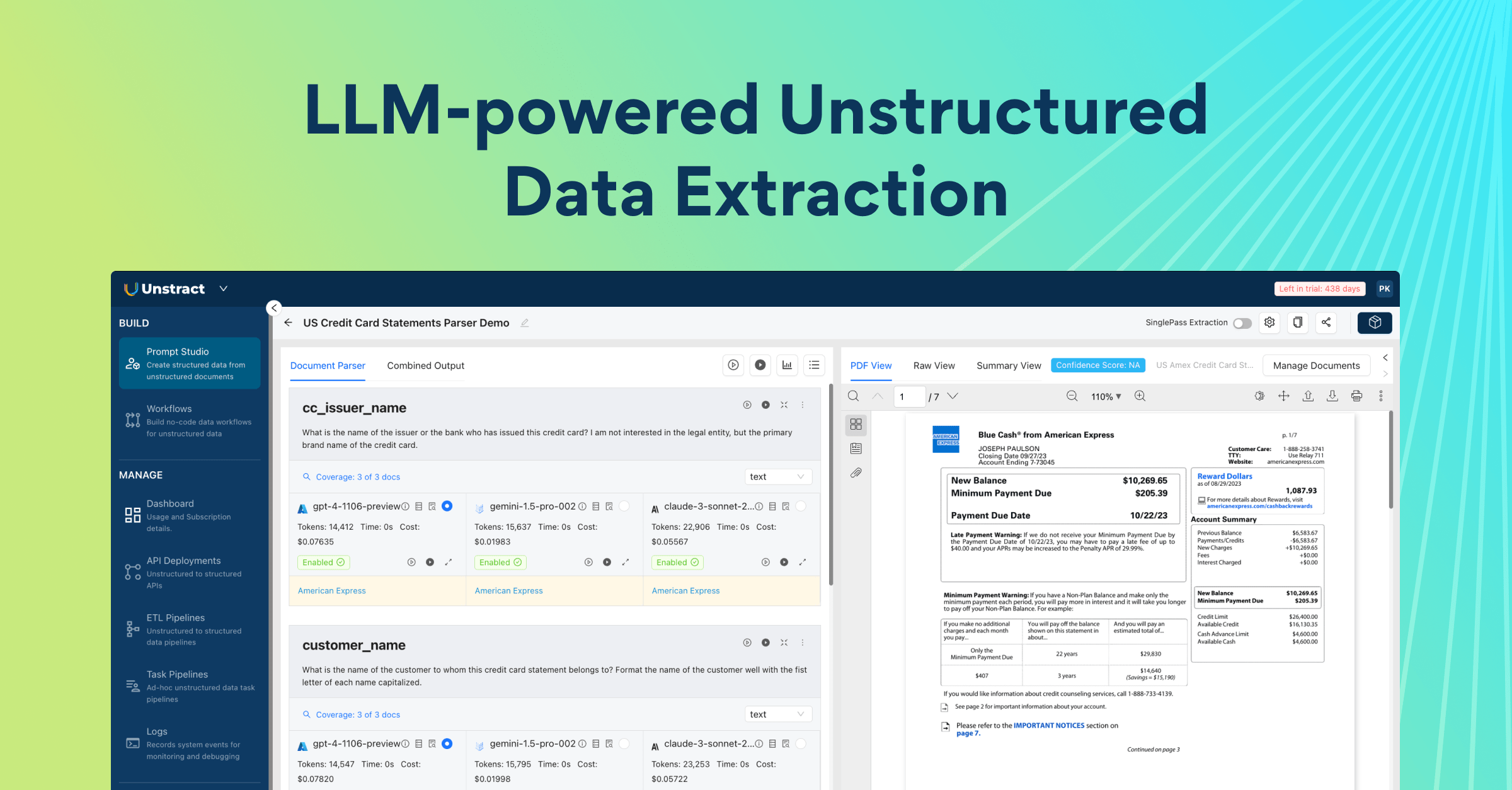

Let’s see LLMWhisperer in action. You can try it yourself in the LLMWhisperer playground.

First, let’s demonstrate its OCR capabilities using a skewed photo of a receipt:

Produces the following output:

As you can see, it not only fully recognizes all the text and values but also preserves the original layout structure.

Next, let’s see how it handles a PDF of an SEC document:

Focusing on the table on page 33, we obtain the following output:

Here we can see how powerful the layout extraction is, as it preserves the entire table structure, allowing the full context to be used in the pipeline.

Recommendations, Enterprise Considerations, and Future Outlook

For most document processing needs, traditional OCR tools remain the clear choice. Tools like Tesseract, PaddleOCR, Azure Document Intelligence, AWS Textract, and Google Document AI excel at speed, accuracy, and cost-efficiency, processing thousands of pages per hour at pennies per page. They are well-tested in enterprise environments and optimized over decades for reliability.

Best use cases for traditional OCR:

- Standard business documents: invoices, forms, reports, contracts with consistent formatting

- High-volume processing needs

- Scenarios requiring predictable, deterministic outputs

Key advantages:

- High speed and throughput

- Low cost per page ($0.001–$0.01)

- Reliable and consistent extraction

- Proven enterprise integrations and auditability

When LLMs Can Help

LLMs can outperform traditional OCR in specific edge cases. They are particularly useful for low-quality or noisy scans, recognizing handwritten text, handling complex layouts such as nested tables or multi-column formats, and processing documents that require contextual understanding.

However, AI-based OCR comes with significant trade-offs. Costs per page are 10–100 times higher than traditional OCR, processing times are slower (minutes per document versus seconds), and outputs can be inconsistent or include hallucinated information.

The recommended approach is to use LLMs strategically. Combine them with traditional OCR in a hybrid workflow, such as LLMWhisperer, where OCR handles raw text extraction first. LLMs can then focus on interpretation, structuring, and extracting relationships, leveraging their intelligence only where it adds value.

Key Enterprise Considerations

Document Volume: High-volume workflows generally favor traditional OCR, which can process thousands of pages quickly and reliably. LLMs are better suited for small batches of complex documents where their language understanding adds value.

Accuracy Requirements: Mission-critical applications demand deterministic outputs. Traditional OCR provides reliable extraction, while LLMs can produce plausible but incorrect results, making them less predictable for high-stakes use cases.

Cost Management: OCR costs just pennies per page, whereas LLMs can cost dollars per page. Enterprises should consider the total cost of ownership (TCO), including infrastructure, maintenance, and API usage, when evaluating options.

Compliance and Audit: Combining OCR with human validation remains the gold standard for regulatory compliance. LLM outputs are more difficult to trace and validate, which can complicate audits.

Scalability: OCR scales easily, running on-premises or in the cloud. LLMs face API rate limits, higher latency, and infrastructure dependencies that can hinder large-scale deployment.

System Integration: OCR tools offer mature APIs, SDKs, and pre-built connectors for enterprise platforms. LLM APIs evolve rapidly, which can introduce breaking changes and require ongoing maintenance.

Data Security and Privacy: OCR supports deployment on-premises, in private clouds, or encrypted public clouds, giving enterprises control over sensitive data. LLM APIs typically send documents to third-party services, raising potential compliance and privacy concerns.

Total Cost of Ownership (TCO) Considerations

Processing costs differ dramatically. Traditional OCR costs just $0.001–$0.01 per page, while LLM-based OCR can range from $0.10 to $5.00 per page, depending on document complexity.

At enterprise scale, these differences compound. Processing millions of pages with LLMs can cost between $100,000 and $5,000,000, whereas OCR processing for the same volume may only cost $1,000–$10,000.

Infrastructure requirements also vary. OCR runs predictably on standard servers or cloud environments. LLMs, in contrast, depend on API access and reliable network connectivity, which can introduce bottlenecks.

Maintenance and support are additional considerations. OCR technology is mature, well-documented, and supported, while LLM solutions require ongoing adjustments, monitoring, and updates to maintain accuracy and reliability.

Benchmarking and Continuous Improvement

Start by selecting a representative sample of documents, including edge cases that may challenge your extraction pipeline.

Compare the extracted results against a ground truth dataset, evaluating key metrics such as accuracy (correct fields), completeness (all relevant fields extracted), reliability (consistency across multiple runs), speed (processing time per document), and cost (total per-document cost).

Use these insights to identify failure patterns, refine your pipelines, and re-test iteratively. Continuous benchmarking ensures your document processing solution remains accurate, efficient, and scalable.

OCR Future Outlook

Traditional OCR continues to evolve, incorporating deep learning to handle challenging documents, improving table extraction and multi-column layouts, and offering better support for handwritten text and non-Latin scripts. Incremental accuracy improvements mean top OCR tools now achieve 99%+ accuracy on standard documents.

AI-based OCR is also advancing. Vision-language models may improve visual understanding, and handling of complex layouts or low-quality documents could get better. Cost reductions are possible but unlikely to match OCR-level economics, and hallucinations may persist due to the probabilistic nature of LLMs.

Hybrid approaches are emerging as the most practical solution. By combining OCR extraction with LLM interpretation, like LLMWhisperer, enterprises can leverage OCR for speed and reliability while using LLMs for intelligence and context. The future likely favours integrated pipelines rather than a full replacement of one technology with the other.

Enterprise takeaways: Choose the right tool for the task, OCR for standard, high-volume documents and LLMs for complex edge cases. Hybrid solutions provide flexibility, accuracy, and scalability. Always prioritize auditability, cost-effectiveness, and reliability for mission-critical applications.

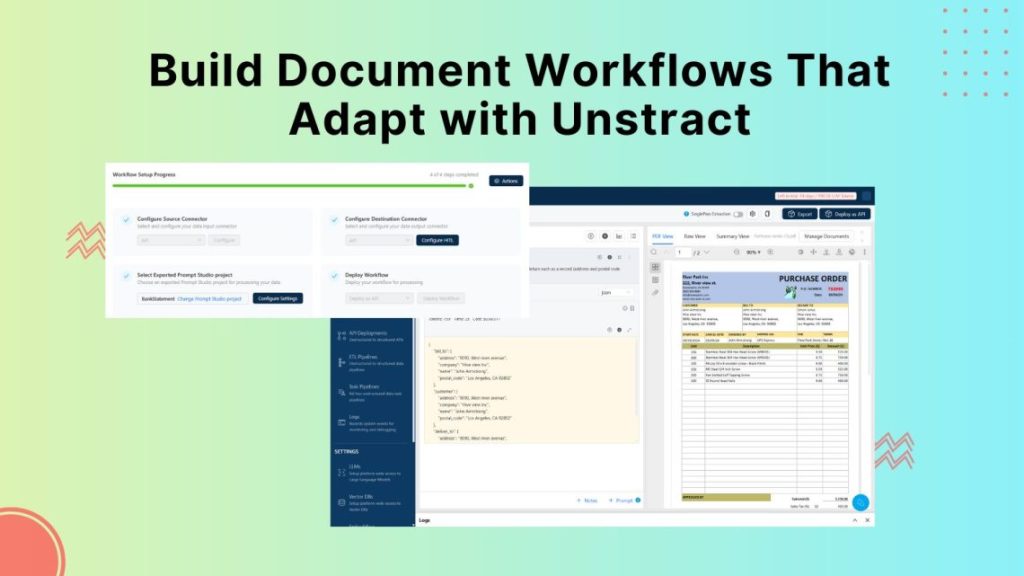

Build AI-Ready Document Extraction: OCR Solutions for LLM Workflows

Our research shows that there is no one-size-fits-all solution for OCR. The best tool depends on your document types, processing volume, accuracy requirements, and budget. For most enterprise applications, traditional OCR remains the fastest, most reliable, and cost-effective option, delivering accurate extraction at a fraction of the cost of LLMs.

Standard business documents, such as invoices, forms, contracts, and reports, are best handled by OCR tools like Tesseract, PaddleOCR, or cloud services such as AWS Textract and Azure Document Intelligence. These tools excel at high-volume processing, predictable outputs, and auditability.

LLMs are useful in specific edge cases, such as low-quality scans, handwritten text, or complex nested structures where OCR may struggle. However, they come with trade-offs: higher cost, slower processing, and potential hallucinations or inconsistent results.

The most effective strategy is a hybrid approach. Use OCR for initial extraction to ensure speed and accuracy, and apply LLMs for interpretation, structuring, or complex layout understanding. Tools like LLMWhisperer and Unstract demonstrate that combining OCR and LLM capabilities yields better extraction quality while avoiding the pitfalls of AI OCR.

Enterprise best practices include benchmarking on representative documents, measuring accuracy, cost, and speed, and identifying cases where OCR alone falls short. LLMs should be used strategically, not as a primary OCR solution.

In summary, for most document processing tasks, OCR provides reliability and scalability, while LLMs add intelligence for complex scenarios. A hybrid workflow delivers the best balance of speed, accuracy, and contextual understanding. Try LLMWhisperer in Unstract to see how a hybrid approach can improve extraction quality on your documents.