This is a companion article to the YouTube video where you can see a live coding session in which we extract raw text and structure data from a PDF form that has checkboxes and radiobuttons.

One challenge when it comes to processing PDF forms is the need to extract and interpret PDF form elements like checkboxes and radio buttons. Without this, automating business processes that involve these forms just won’t be possible. And in the wild, these PDF forms can either be native PDFs or just scans of paper documents. Or worse, smartphone-clicked images of paper forms. In this article, we’ll look at how to both extract and interpret PDF documents that contain forms in native text or scanned image formats.

Before you run the project

The source code for the project can be found here on Github. To successfully run the extraction script, you’ll need 2 different API keys. One for LLMWhisperer and the other for OpenAI APIs. Please be sure to read the Github project’s README to fully understand OS and other dependency requirements. You can sign up for LLMWhisperer, get your API key, and process up to 100 pages per day free of charge.

Our approach to PDF checkbox extraction

Because these documents can come in a wide variety of formats and structures, we will use a Large Language Model in order to interpret and structure the contents of those PDF documents. However, before that, we’ll need to extract raw text from the PDF (irrespective of whether it’s native text or scanned).

If you carefully think about it, the system that extracts raw text from the PDF needs to both detect and render PDF form elements like checkboxes and radiobuttons in a way that LLMs can understand. In this example, we’ll use LLMWhisperer to extract PDF raw text representing checkboxes and radiobuttons. You can use LLMWhisperer completely free for processing up to 100 pages per day. As for structuring the output from LLMWhisperer, we’ll use GPT3.5-Turbo and we’ll use Langchain and Pydantic to help make our job easy.

Input and expected output

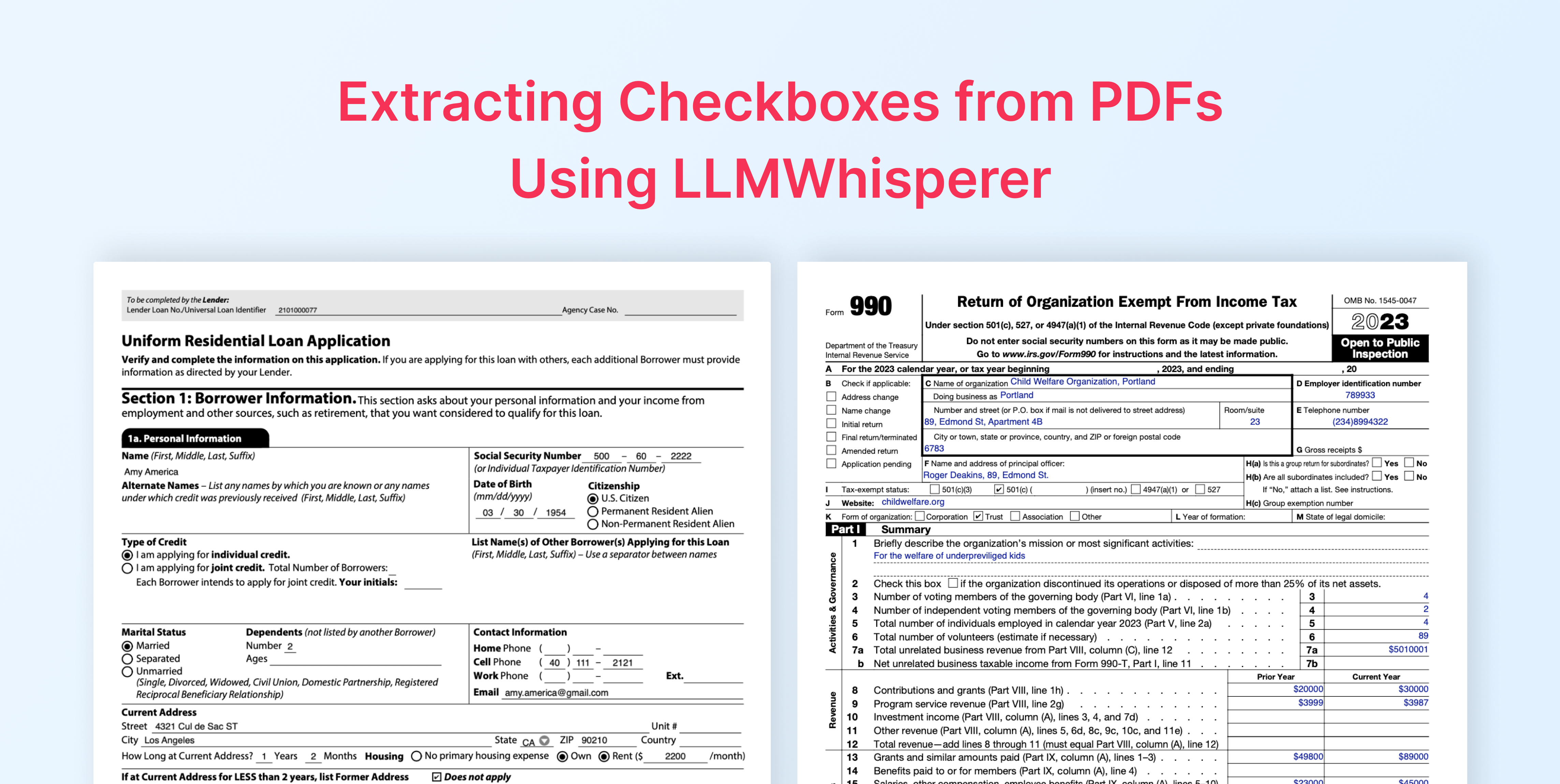

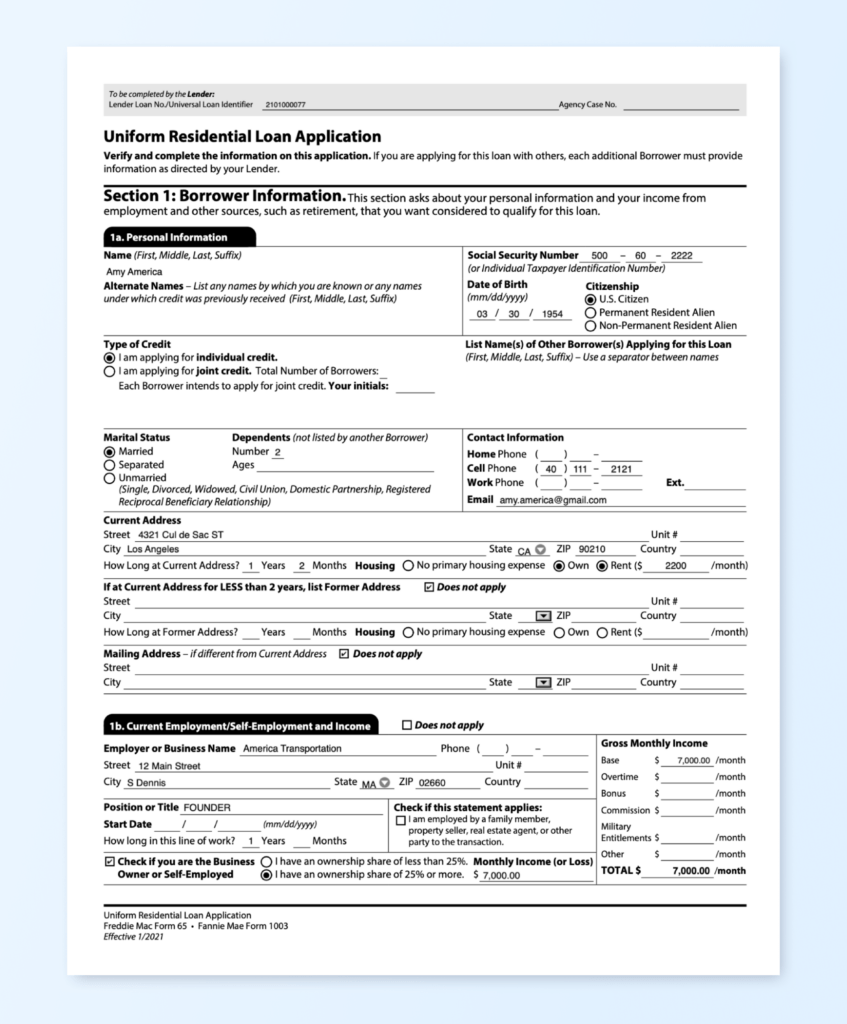

Let’s take a look at the input document and what we expect to see as output.

The PDF document

We want to extract structure information from this 1003 form, but in this exercise, we’ll only be processing the first page.

Extracted raw text via LLMWhisperer

Let’s look at the output from LLMWhisperer

To be completed by the Lender:

Lender Loan No./Universal Loan Identifier 2101000077 Agency Case No.

Uniform Residential Loan Application

Verify and complete the information on this application. If you are applying for this loan with others, each additional Borrower must provide

information as directed by your Lender.

Section 1: Borrower Information. This section asks about your personal information and your income from

employment and other sources, such as retirement, that you want considered to qualify for this loan.

1a. Personal Information

Name (First, Middle, Last, Suffix) Social Security Number 500 - 60 2222

Amy America (or Individual Taxpayer Identification Number)

Alternate Names - List any names by which you are known or any names Date of Birth Citizenship

under which credit was previously received (First, Middle, Last, Suffix) (mm/dd/yyyy) [X] U.S. Citizen

03 30 1954 [ ] Permanent Resident Alien

[ ] Non-Permanent Resident Alien

Type of Credit List Name(s) of Other Borrower(s) Applying for this Loan

[X] I am applying for individual credit. (First, Middle, Last, Suffix) - Use a separator between names

[ ] )I am applying for joint credit. Total Number of Borrowers:

Each Borrower intends to apply for joint credit. Your initials:

Marital Status Dependents (not listed by another Borrower) Contact Information

[X] Married Number 2 Home Phone ( 1

[ ] Separated Ages Cell Phone ( 408 ) 111 - 2121

[ ] Unmarried Work Phone ( ) Ext.

(Single, Divorced, Widowed, Civil Union, Domestic Partnership, Registered

Reciprocal Beneficiary Relationship) Email amy.america@gmail.com

Current Address

Street 4321 Cul de Sac ST Unit #

City Los Angeles State CA [X] ZIP 90210 Country

How Long at Current Address? 10 Years 2 Months Housing [ ] No primary housing expense [X] Own [X] Rent ($ 2200 /month)

If at Current Address for LESS than 2 years, list Former Address [X] Does not apply

Street Unit #

City State [X] ZIP Country

How Long at Former Address? Years Months Housing [ ] )No primary housing expense [ ] Own [ ] Rent ($ /month)

Mailing Address - if different from Current Address [X] Does not apply

Street Unit #

City State [X] ZIP Country

1b. Current Employment/Self-Employment and Income [ ] Does not apply

Gross Monthly Income

Employer or Business Name America Transportation Phone ( -

Unit # Base $ 7,000.00 /month

Street 12 Main Street

Overtime $ /month

City S Dennis State MA ZIP 02660 Country

Bonus $ /month

Position or Title FOUNDER Check if this statement applies: Commission $ /month

[ ] I am employed by a family member,

Start Date 1 / (mm/dd/yyyy)

property seller, real estate agent, or other Military

Entitlements $ /month

How long in this line of work? 15 Years Months party to the transaction.

Other $ /month

[X] Check if you are the Business [ ] I have an ownership share of less than 25%. Monthly Income (or Loss)

TOTAL $ 7,000.00/month

Owner or Self-Employed [X] I have an ownership share of 25% or more. $ 7,000.00

Uniform Residential Loan Application

Freddie Mac Form 65 . Fannie Mae Form 1003

<<<

Two things should jump out:

- LLMWhisperer preserves the layout of the input document in the output! This makes it easy for LLMs to get a good idea about the column layout and what different sections mean

- LLMWhisperer has rendered checkboxes and radio buttons as simple plain text! This allows LLMs to interpret the document as the user has filled it.

What we want the final JSON to look like

We want to output structured JSON using Pydantic and output something like the following so that it’s easy to process downstream. With LLMWhisperer giving us output—especially around checkboxes and radio buttons—it should be easy for us to get exactly what we want.

{

"personal_details": {

"name": "Amy America",

"ssn": "500-60-2222",

"dob": "1954-03-30T00:00:00Z",

"citizenship": "U.S. Citizen"

},

"extra_details": {

"type_of_credit": "Individual",

"marital_status": "Married",

"cell_phone": "(408) 111-2121"

},

"current_address": {

"street": "4321 Cul de Sac ST",

"city": "Los Angeles",

"state": "CA",

"zip_code": "90210",

"residing_in_addr_since_years": 10,

"residing_in_addr_since_months": 2,

"own_house": true,

"rented_house": true,

"rent": 2200,

"mailing_address_different": false

},

"employment_details": {

"business_owner_or_self_employed": true,

"ownership_of_25_pct_or_more": true

}

}

The Code for checkbox extraction

We’re able to achieve our objectives in about 100 lines of code. Let’s look at the different aspects of it.

Extracting raw text from the PDF

Using LLMWhisperer’s Python client, we can extract data from PDF documents as needed. LLMWhisperer is a cloud service and requires an API key, which you can get for free. LLMWhisperer’s free plan allows you to extract up to 100 pages of data per day, which is more than we need for this example.

def extract_text_from_pdf(file_path, pages_list=None):

llmw = LLMWhispererClient()

try:

result = llmw.whisper(file_path=file_path, pages_to_extract=pages_list)

extracted_text = result["extracted_text"]

return extracted_text

except LLMWhispererClientException as e:

error_exit(e)Just calling the whisper() method on the client, we’re able to extract raw text from images, native text PDFs, scanned PDFs, smartphone photos of documents, etc.

Defining the schema

To use Pydantic with Langchain, we define the schema or structure of the data we want to extract from the unstructured source as Pydantic classes. For our structuring use case, this is how it looks like:

class PersonalDetails(BaseModel):

name: str = Field(description="Name of the individual")

ssn: str = Field(description="Social Security Number of the individual")

dob: datetime = Field(description="Date of birth of the individual")

citizenship: str = Field(description="Citizenship of the individual")

class ExtraDetails(BaseModel):

type_of_credit: str = Field(description="Type of credit")

marital_status: str = Field(description="Marital status")

cell_phone: str = Field(description="Cell phone number")

class CurrentAddress(BaseModel):

street: str = Field(description="Street address")

city: str = Field(description="City")

state: str = Field(description="State")

zip_code: str = Field(description="Zip code")

residing_in_addr_since_years: int = Field(description="Number of years residing in the address")

residing_in_addr_since_months: int = Field(description="Number of months residing in the address")

own_house: bool = Field(description="Whether the individual owns the house or not")

rented_house: bool = Field(description="Whether the individual rents the house or not")

rent: float = Field(description="Rent amount")

mailing_address_different: bool = Field(description="Whether the mailing address is different from the current "

"address or not")

class EmploymentDetails(BaseModel):

business_owner_or_self_employed: bool = Field(description="Whether the individual is a business owner or "

"self-employed")

ownership_of_25_pct_or_more: bool = Field(description="Whether the individual owns 25% or more of a business")

class Form1003(BaseModel):

personal_details: PersonalDetails = Field(description="Personal details of the individual")

extra_details: ExtraDetails = Field(description="Extra details of the individual")

current_address: CurrentAddress = Field(description="Current address of the individual")

employment_details: EmploymentDetails = Field(description="Employment details of the individual")

Form1003 is our main class, which refers to other classes that hold more information from subsections of the form. One main thing to note here is how each field includes a description in plain English. These descriptions, along with the type of each variable are then used to construct prompts for the LLM to structure the data we need.

Constructing the prompt and calling the LLM

The following code uses Langchain to let us define the prompts to structure data from the raw text like we need it. The process_1003_information() function has all the logic to compile our final prompt from the preamble, the instructions from the Pydantic class definitions along with the extracted raw text. It then calls the LLM and returns the JSON response. That’s pretty much all there is to it. This code should give us the output we need as structured JSON.

def process_1003_information(extracted_text):

preamble = ("What you are seeing is a filled out 1003 loan application form. Your job is to extract the "

"information from it accurately.")

postamble = "Do not include any explanation in the reply. Only include the extracted information in the reply."

system_template = "{preamble}"

system_message_prompt = SystemMessagePromptTemplate.from_template(system_template)

human_template = "{format_instructions}\n\n{extracted_text}\n\n{postamble}"

human_message_prompt = HumanMessagePromptTemplate.from_template(human_template)

parser = PydanticOutputParser(pydantic_object=Form1003)

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt, human_message_prompt])

request = chat_prompt.format_prompt(preamble=preamble,

format_instructions=parser.get_format_instructions(),

extracted_text=extracted_text,

postamble=postamble).to_messages()

chat = ChatOpenAI()

response = chat(request, temperature=0.0)

print(f"Response from LLM:\n{response.content}")

return response.content

Conclusion

When dealing with forms in native or scanned PDFs, it is important to employ a text extractor that can both recognize form elements like checkboxes and radiobuttons and render them in a way that LLMs can interpret.

In this example, we saw how a 1003 form was processed taking into account various sections that made meaning only if the checkboxes and radiobuttons they contain are interpreted correctly. We saw how LLMWhisperer rendered them in plain text and how using just a handful lines of code, we were able to define a schema and pull out structured data in the exact format we need using Langchain and Pydantic.

Links to libraries, packages, and code

- The code for this guide can be found in this Github Repository

- LLMWhisperer: A general purpose text extraction service that extracts data from images and PDFs, preparing it and optimizing it for consumption by Large Language Models or LLMs.

LLMwhisperer Python client on PyPI | Try LLMWhisperer Playground for free | Learn more about LLMWhisperer - Pydantic: Use Pydantic to declare your data model. This output parser allows users to specify an arbitrary Pydantic Model and query LLMs for outputs that conform to that schema.

Related topics on PDF checkbox extraction

PDF Hell: Explore the challenges in extracting text/tables from PDF documents.

Comparing approaches for using LLMs to extract tables and text from PDFs

For the curious. Who are we, and why are we writing about PDF text extraction?

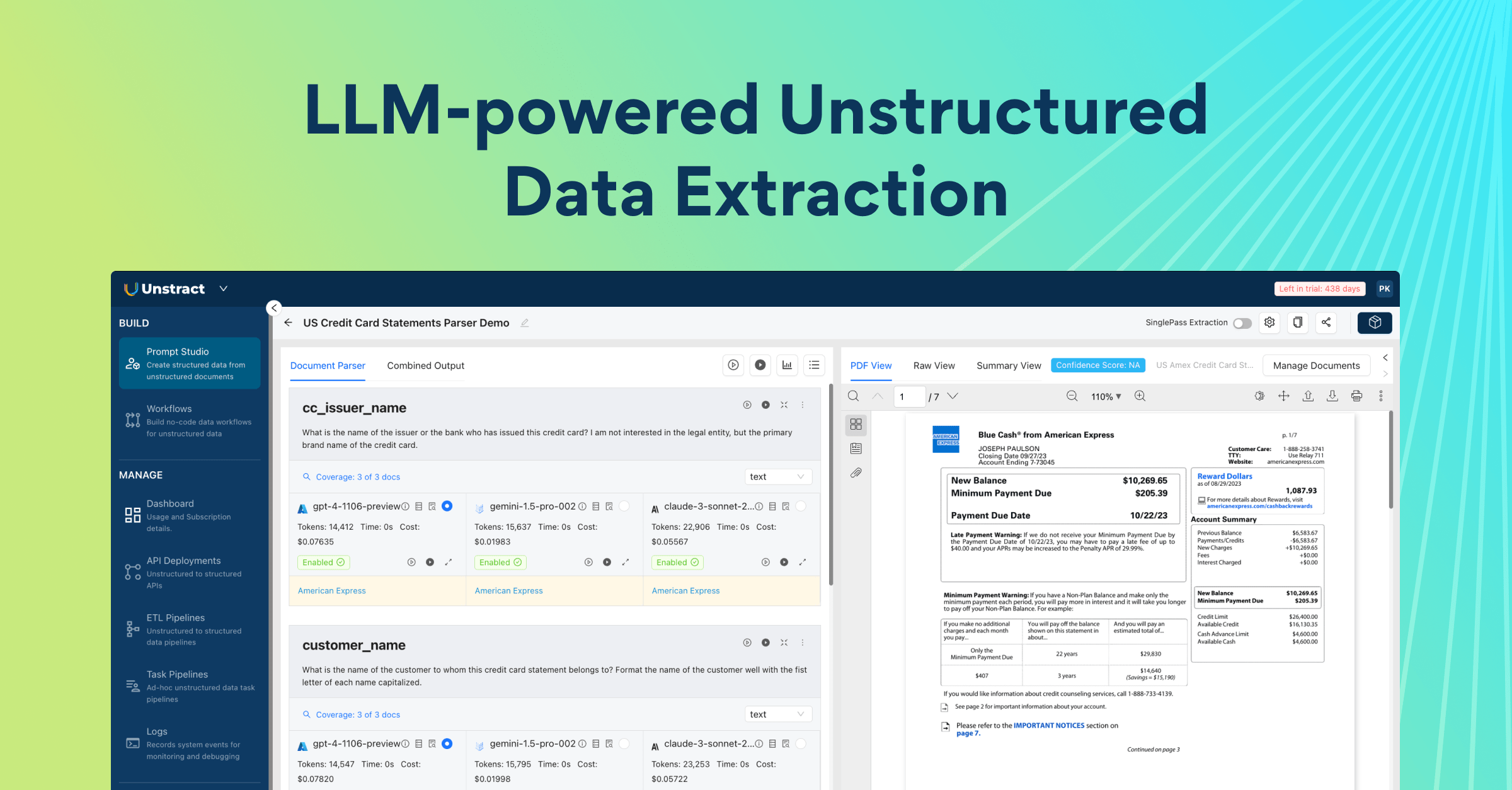

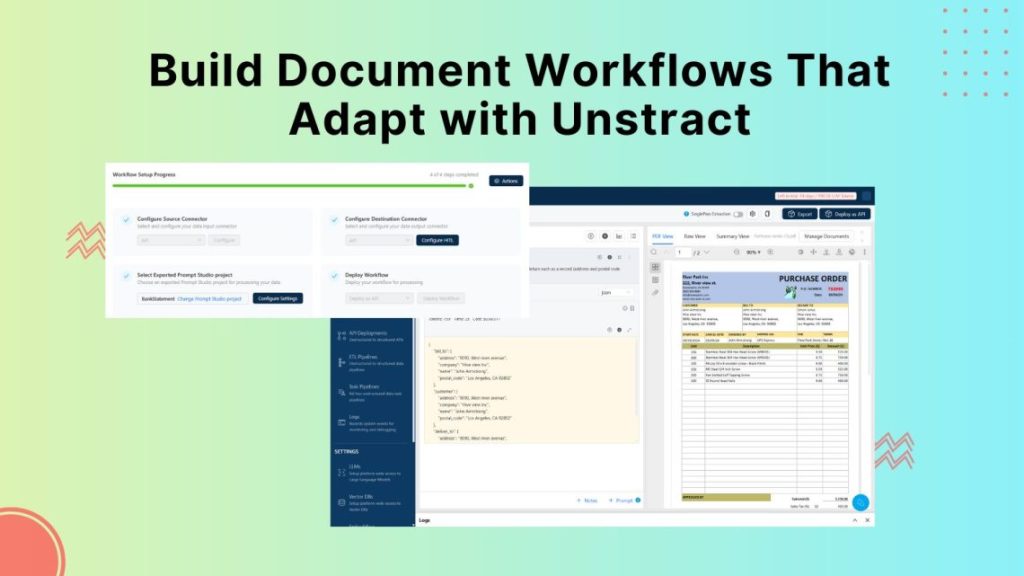

We are building Unstract. Unstract is a no-code platform to eliminate manual processes involving unstructured data using the power of LLMs. The entire process discussed above can be set up without writing a single line of code. And that’s only the beginning. The extraction you set up can be deployed in one click as an API or ETL pipeline.

With API deployments you can expose an API to which you send a PDF or an image and get back structured data in JSON format. Or with an ETL deployment, you can just put files into a Google Drive, Amazon S3 bucket or choose from a variety of sources and the platform will run extractions and store the extracted data into a database or a warehouse like Snowflake automatically. Unstract is an open-source software that is available at https://github.com/Zipstack/unstract.

Sign up for our free trial if you want to try it out quickly. More information here.

LLMWhisperer is a document-to-text converter. Prep data from complex documents for use in Large Language Models. LLMs are powerful, but their output is as good as the input you provide. Documents can be a mess: widely varying formats and encodings, scans of images, numbered sections, and complex tables.

Extracting data from these documents and blindly feeding them to LLMs is not a good recipe for reliable results. LLMWhisperer is a technology that presents data from complex documents to LLMs in a way they’re able to best understand it.

If you want to quickly take it for test drive, you can checkout our free playground.