We have all seen the power of Large Language Models in the form of a GPT-based personal assistant from OpenAI called ChatGPT. You can ask questions about the world, ask for recipes, or ask it to generate a poem about a person. We have all been awestruck by the capabilities of this personal assistant.

Unlike many other personal assistants, this is not a toy. It has significant capabilities which can increase your productivity. You can ask it to write a marketing copy or Python script for work. You can ask it to provide a detailed itinerary for a weekend getaway.

This is powered by Large Language Models (LLMs) using a technology called Generative Pre-trained Transformer (GPT). LLMs are a subset of a broader category of AI models known as neural networks, which are systems inspired by the human brain. It all started with the pivotal paper “Attention is all you need” released by Google in 2017.

Since then, brilliant scientists and engineers have created and mastered the transformer model which has created groundbreaking changes that are disrupting the status quo in things ranging from creative writing, language translation, image generation, and software coding to personalized education.

This technology harnesses the patterns in the vast quantities of text data they have been trained with to predict and generate outputs. Till now, this path-breaking technology has been used by enterprises primarily in these areas:

- Personal assistants

- Chatbots

- Content generation (marketing copies, blogs, etc)

- Question answering over documents (RAG)

One of the main capabilities of these LLMs is their ability to reason within a given context. We do not know if they reason the way we humans reason, but they do show some emergent behaviour that has the capacity to somehow do it, given the right prompts to do so. This might not match humans, but it is good enough to extract information from a given context. This extraction capability powers the question-answering use case of LLMs.

Structured data from unstructured sources

Multiple analysts estimate that up to 80% of the data available with enterprises exist in an unstructured form. That is information stored in text documents, video, audio, social media, server logs etc. It is a known fact that if enterprises can extract information from these unstructured sources it would give them a huge comparative advantage.

Unfortunately, today if we have to extract information from these unstructured sources, we need humans to do it and it is costly, slow, and error-prone. We could write applications to extract information, but that would be a very difficult and expensive project and in some cases impossible. Given the ability of LLMs to “see” patterns in text and do some form of “pseudo reasoning”, they would be a good choice to extract information from these vast troves of unstructured data in the form of PDFs and other document files.

Defining our use case

For the sake of this discussion, let us define our typical use cases. These are actual real-world use cases many of our customers have. Note that some of the customers need information extracted from tens of thousands of these types of documents every month.

Information extracted could be simple ones like personal data (name, email address, address) and complex ones like line items (details of each product/service item in the invoice, details of all companies in prior employment in resumes etc)

Most of these documents have between 1 and 20 pages and they fit into the context size of OpenAI’s GPT4 Turbo and Google’s Gemini Pro LLMs.

- Information extraction from Invoices.

- Information extraction from Resumes.

- Information extraction from Purchase orders.

- Information extraction from Medical bills.

- Information extraction from Insurance documents.

- Information extraction from Bank and Credit card statements.

- Information extraction from SaaS contracts.

Traditional RAG is an overkill for many use cases

Retrieval-Augmented Generation is a technique used in natural language processing that combines the capabilities of a pre-trained language model with information retrieval to enhance the generation of text. This method leverages the strengths of two different types of models: a language model and a document retrieval system.

RAG is typically used in a question-answering scenario. When we have a bunch of documents or one large document and we want to answer a specific question. We would use RAG techniques to:

- Determine which document contains the information

- Determine which part of the document contains the information

- Send this part of the document as a context along with the question to an LLM and get an answer

The above steps are for the simplest of RAG use cases. Libraries like Llamaindex and Langchain provide the tools to deploy a RAG solution. And they have workflows for more complex and smarter RAG implementations.

RAGs are very useful to implement smart chatbots and allow employees or customers of enterprises to interact with vast amounts of information. RAGs can be used for information extraction too, but it would be an overkill for many use cases. Sometimes it could become expensive to do so too.

We deal with some customers who need information extracted from tens of thousands of documents every month. And the information extracted is not for human consumption. The information goes straight into a database or to other downstream automated services. Here is where a simple prompt based extraction could be way more efficient than traditional RAG. Both from a cost perspective and computational complexity perspective. More information in the next section.

Prompt-based data extraction

The context windows of LLMs are increasing and the cost of LLM services are coming down. We can comfortably extrapolate this and conclude that this trend will continue into the near future. We can make use of this and use direct prompting techniques to extract information from documents.

Source document

Let’s take a couple of restaurant invoices as the source documents to explore the extraction process. An enterprise might encounter hundreds of these documents in claims processing. Also, note that these two documents are completely different in their form and layouts. Traditional machine learning and intelligent document processing (IDP) tools will not be able to parse both documents using the same learning or setups. The true power of LLMs is their ability to understand the context through language. We will see how LLMs are capable of extracting information from these documents using the same prompts.

Photo of printed restaurant invoice

PDF of restaurant invoice

Traditional machine learning and intelligent document processing (IDP) will not be able to parse different documents using the same learning or setups.

Preprocessing

LLMs required pure text as inputs. This means that all documents need to be converted to plain text. The weakest link in setting up an LLM-based toolchain to do extraction is the conversion of the original document into a pure text document which LLMs can consume as input.

Most documents available in enterprises are in PDF format. PDFs can contain text or their pages can be made of scanned documents that exist as images inside the document. Even if information is stored as text inside PDFs, extracting them is no simple task. PDFs were not designed as a text store. They contain layout information that can reproduce the “document” for printing or visual purposes. The text inside the PDFs can be broken and split at random places. They do not always follow a logical order. But they contain layout information which will be used by the PDF rendering software which will make it look as if the text is coherent to a human eye.

For example, the simple text “Hello world, welcome to PDFs” could be split up as “Hello”, “world, wel ”, “come”, “to” and “PDFs”. And the order can be mixed up too. But precise location information would be available for the rendering software to reassemble the text visually.

The PDF-to-text converter has to consider the layout information and try to reconstruct the text as intended by the author and make grammatical sense. In the case of scanned PDF documents, the information inside is in the form of images and we need to use an OCR to extract the text from the PDF

The following texts are extracted from the documents mentioned above using Unstract’s LLM Whisperer.

Data extracted from Document #1

From the photo of the physical restaurant invoice

Extracted with LLMWhisperer

BURGER SEIGNEUR

No. 35, 80 feet road,

HAL 3rd Stage,

Indiranagar, Bangalore

GST: 29AAHFL9534H1ZV

Order Number : T2- 57

Type : Table

Table Number: 2

Bill No .: T2 -- 126653

Date:2023-05-31 23:16:50

Kots: 63

Item Qty Amt

Jack The

Ripper 1 400.00

Plain Fries +

Coke 300 ML 1 130.00

Total Qty: 2

SubTotal: 530.00

GST@5% 26.50

CGST @2.5% 13.25

SGST @2.5% 13.25

Round Off : 0.50

Total Invoice Value: 557

PAY : 557

Thank you, visit again!

Powered by - POSIST

Data extracted from Document #2

PDF of restaurant invoice

Extracted with LLMWhisperer

Zomato Food Order: Summary and Receipt

Order ID: 5628294065

Order Time: 07 March 2024, 03:46 PM

Customer Name: Arun Venkataswamy

Delivery Address: Awfis, 8 Floor, Tower Main, Prestige Palladium Bayan, Greams

Road, Thousand Lights West, Thousand Lights, Chennai

Restaurant Name: Chai Kings

Restaurant Address: Old Door 28, New 10, Kader Nawaz Khan Road, Thousand Lights,

Chennai

Delivery partner's Name: Jegan Rathinam

Item Quantity Unit Price Total Price

Bun Butter Jam 1 ₹50 ₹50

Masala Pori 2 ₹25 ₹50

Ginger Chai 1 ₹158 ₹158

Taxes ₹11.84

Restaurant Packaging Charges ₹20

Delivery charge subtotal ₹39

Donate ₹4 to Feeding India ₹4

Platform fee ₹3

Free Delivery with Gold (₹39)

Coupon - (GET100) (₹100)

Total ₹196.84

Terms & Conditions (https://www.zomato.com/policies/terms-of-service/) :

1. W.e.f. 1 January 2022, for items ordered where Zomato is obligated to raise a tax invoice on behalf of the Restaurant, it can be

downloaded from the link provided in email containing order summary. For other products in the order that are not covered in Zomato

issued tax invoice, tax invoice will be provided by the Restaurant Partner directly.

2. The delivery charge and delivery surge are collected by Zomato on behalf of the person or entity undertaking delivery of this order.

3. If you have any issues or queries in respect of your order, please contact the customer chat support through Zomato platform.

4. If you need an 80G certificate for any donation made by you to Feeding India, please reach out to Feeding India team at

contact@feedingindia.org.

5. In case you need to get more information about restaurant's FSSAI status, please visit https://foscos.fssai.gov.in/ and use the FBO

search option with the FSSAI License / Registration number.

6. Please note that we do not have a customer care phone number and we never ask for any bank account details such as CVV, account

number, UPI Pin etc. across our other support channels. For your safety please do not share these details with anyone over any

medium.

Chai Kings zomato

fssaà fssai

Lic. No. 12421002002977 Lic. No. 10019064001810

Extraction prompt engineering

Constructing an extraction prompt for a LLM is an iterative process in general. We will keep tweaking the prompt till we are able to extract the information you require. In the case of generalised extraction – when the same prompt has to work over multiple different documents more care might be taken by experimenting with a sample set of documents. When I say “multiple different documents” I mean different documents with the same central context. Take for example the two documents we consider in this article. Both are restaurant invoices but their form and layouts are completely different. But their context is the same. They are restaurant invoices.

The following prompt structure is what we use while dealing with relatively big LLMs like GPT3.5, GPT4 and Gemini Pro:

- Preamble

- Context

- Grammar

- Task

- Postamble

Preamble is the text we prepend to every prompt. A typical preamble would look like this:

Your ability to extract and summarise this restaurant invoice accurately is essential for effective analysis. Pay close attention to the context's language, structure, and any cross-references to ensure a comprehensive and precise extraction of information. Do not use prior knowledge or information from outside the context to answer the questions. Only use the information provided in the context to answer the questions.

Context is the text we extracted from PDF or image

Grammar is used when we want to provide synonyms information. Especially for smaller models. For example for the document type we are considering, restaurant invoices – invoice can be “bill” in some countries. For sake of this example, we will ignore grammar information.

Task is the actual prompt or question you want to ask. The crux of the extraction.

Postamble is text we add to the end of every prompt. A typical postamble would look like this:

Do not include any explanation in the reply. Only include the extracted information in the reply.

Note that Except for the context and task none of the other sections of the prompt is compulsory.

Let’s put an entire prompt together and see the results. Let’s ignore the grammar bit for now. In this example, our task prompt would be,

Extract the name of the restaurant

The entire prompt to send to the LLM:

Your ability to extract and summarise this restaurant invoice accurately is essential for effective analysis. Pay close attention to the context's language, structure, and any cross-references to ensure a comprehensive and precise extraction of information. Do not use prior knowledge or information from outside the context to answer the questions. Only use the information provided in the context to answer the questions.

Context:

—-------

BURGER SEIGNEUR

No. 35, 80 feet road,

HAL 3rd Stage,

Indiranagar, Bangalore

GST: 29AAHFL9534H1ZV

Order Number : T2- 57

Type : Table

Table Number: 2

Bill No .: T2 -- 126653

Date:2023-05-31 23:16:50

Kots: 63

Item Qty Amt

Jack The

Ripper 1 400.00

Plain Fries +

Coke 300 ML 1 130.00

Total Qty: 2

SubTotal: 530.00

GST@5% 26.50

CGST @2.5% 13.25

SGST @2.5% 13.25

Round Off : 0.50

Total Invoice Value: 557

PAY : 557

Thank you, visit again!

Powered by - POSIST

-----------

Extract the name of the restaurant.

Your response:

Copy and paste the above prompt into ChatGPT virtual assistant. Or you may use their APIs directly to complete the prompt.

The result you get is this:

The name of the restaurant is Burger Seigneur.

If you just need the name of the restaurant and not a verbose answer, you can play around with the postamble or the task definition itself. Let’s change the task to be more specific:

Extract the name of the restaurant. Reply with just the name.

The result you get now is:

BURGER SEIGNEUR

If you construct a similar prompt for document #2, you will get the following result:

CHAI KINGS

Here is a list of task prompts and their results

Please note that if you use the same prompts in ChatGPT, the results can be a bit more verbose. These results are from the Azure OpenAI with GPT4 turbo model accessed through their API. You can always tweak the prompts to get the desired outputs.

Task Prompt 1Extract the name of the restaurantDocument 1 responseBURGER SEIGNEURDocument 2 responseChai Kings

Task Prompt 2Extract the date of the invoiceDocument 1 response2023-05-31Document 2 response07 March 2024

Task Prompt 3Extract the customer name if it is present. Else return nullDocument 1 responseNULLDocument 2 responseArun Venkataswamy

Task Prompt 4Extract the address of the restaurant in the following JSON format: {"address": "","city": ""}Document 1 response{"address": "No. 35, 80 feet road, HAL 3rd Stage, Indiranagar","city": "Bangalore"}Document 2 response{ "address": "Old Door 28, New 10, Kader Nawaz Khan Road, Thousand Lights", "city": "Chennai" }

Task Prompt 5What is the total value of the invoiceDocument 1 response557Document 2 response₹196.84

Task Prompt 6Extract the line items in the invoice in the following JSON format: [{ "item": "", "quantity": 0, "total_price": 0 }]Document 1 response[ { "item": "Jack The Ripper", "quantity": 1, "total_price": 400 }, { "item": "Plain Fries + Coke 300 ML", "quantity": 1, "total_price": 130 }] Document 2 response[ { "item": "Bun Butter Jam", "quantity": 1, "total_price": 50 }, { "item": "Masala Pori", "quantity": 2, "total_price": 50 }, { "item": "Ginger Chai", "quantity": 1, "total_price": 158 } ]

As we can see from the above table, LLMs are pretty smart with their ability to extract information from a given context. A single prompt works across multiple documents with different forms and layouts. This is a huge step up from traditional machine learning models and methods.

Post-processing

We can extract almost any piece of information from the given context using LLMs. But sometimes, it might require multiple passes with an LLM to get a result that can be directly sent to a downstream application. For example, if the downstream application or database requires a number, we have to convert the result to a number. Take a look at the invoice value extraction prompt in the above table. For document #2 it returns a number with a currency symbol. The LLM returned ₹196.84. So in this case we need to have one more step to convert the extracted information to an acceptable format. This can be done in two ways:

- Programmatically: We can programmatically convert the result into a number format. But this would be a difficult task since the formatting could include hundreds separators too. For example $1,456.34. This needs to be converted to 1456.34. Similarly, the hundreds separator could be different for different locales. Example €1.456,34.

- With LLMs: Using LLMs to convert the result into the format we require could be much easier. Since the full context is not required, the cost involved will also be relatively much smaller compared to the actual extraction itself. We can create a prompt like this:

"Convert the following to a number which can be directly stored in the database: $1,456.34. Answer with just the number. No explanations required“. Will produce the output:1456.34

Similar to numbers, we might have to post the process results for dates and boolean values too.

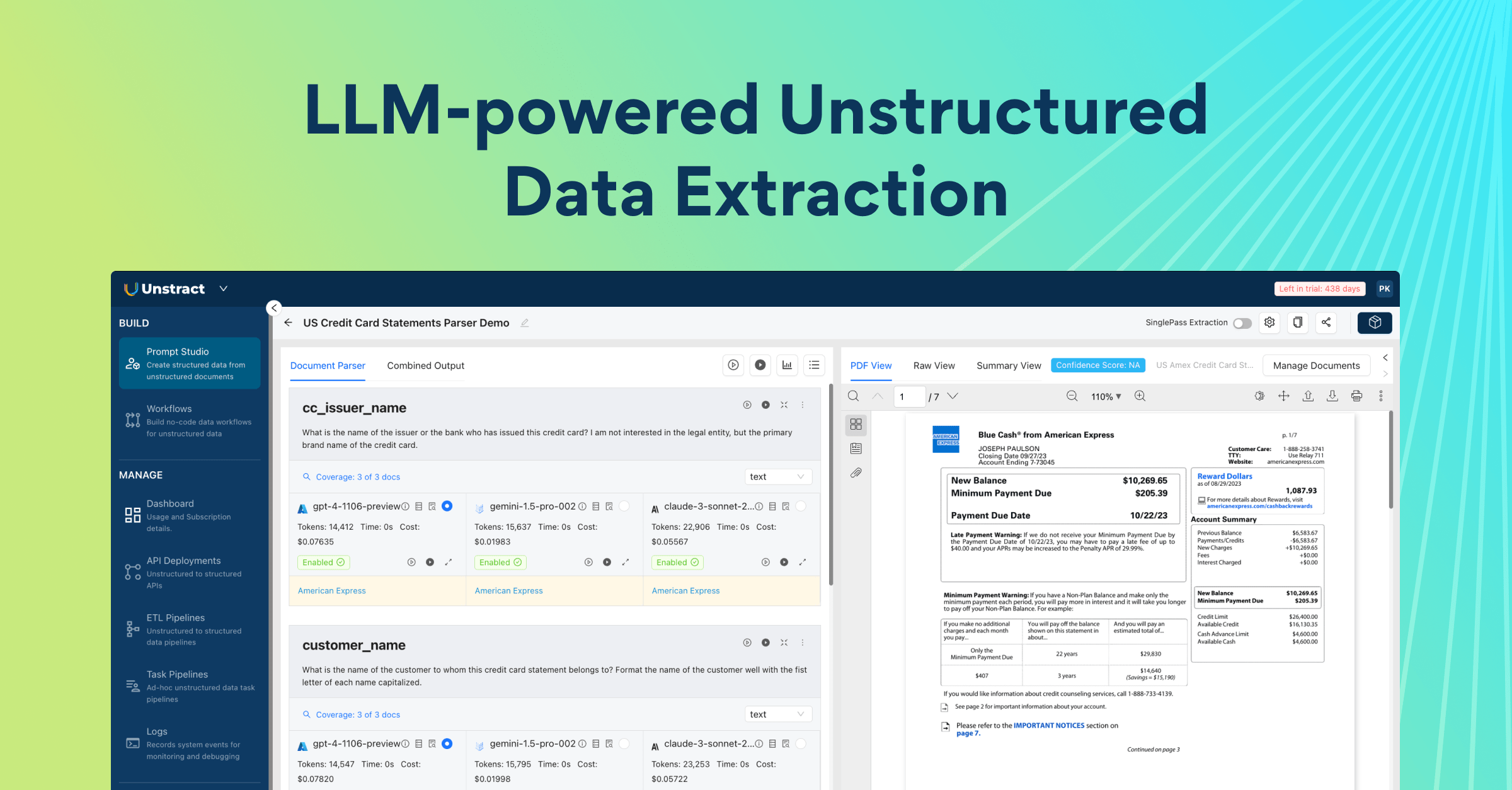

Introducing Unstract and LLMWhisperer

Unstract is a no-code platform to eliminate manual processes involving unstructured data using the power of LLMs. The entire process discussed above can be set up without writing a single line of code. And that’s only the beginning. The extraction you set up can be deployed in one click as an API or ETL pipeline.

With API deployments you can expose an API to which you send a PDF or an image and get back structured data in JSON format. Or with an ETL deployment, you can just put files into a Google Drive, Amazon S3 bucket or choose from a variety of sources and the platform will run extractions and store the extracted data into a database or a warehouse like Snowflake automatically. Unstract is an Open Source software and is available at https://github.com/Zipstack/unstract.

If you want to quickly try it out, signup for our free trial. More information here.

LLMWhisperer is a document-to-text converter. Prep data from complex documents for use in Large Language Models. LLMs are powerful, but their output is as good as the input you provide. Documents can be a mess: widely varying formats and encodings, scans of images, numbered sections, and complex tables. Extracting data from these documents and blindly feeding them to LLMs is not a good recipe for reliable results. LLMWhisperer is a technology that presents data from complex documents to LLMs in a way they’re able to best understand it.

If you want to quickly take it for test drive, you can checkout our free playground.