Over the past few months, our team has been working heads-down to deliver a series of updates designed to make your AI-powered document workflows faster, smarter, and more efficient.

From integrations with your favorite tools to new APIs, human-in-the-loop improvements, and support for advanced reasoning models—these updates are all about saving you time while giving you more control.

In this blog, we offer a detailed look at what’s new! Alternatively, you can watch our video on what’s new in Unstract below.

MCP-Enabled Document Workflows

Businesses are rethinking how work gets done with MCP-enabled workflows—and document extraction is no exception. With the new Unstract MCP server and LLMWhisperer MCP server, your LLMs can now talk directly to these tools and run document workflows inside your MCP host (like Claude Desktop or Windsurf).

No more juggling multiple apps, writing glue code, or managing extra integrations. Simply connect Unstract or LLMWhisperer alongside your other tools, enter prompts in plain language, and let MCP handle the heavy lifting.

The result? Document workflows that are faster to set up, easier to maintain, and accessible to everyone on your team.

Want to dive deeper? Check out our MCP Webinar for a hands-on walkthrough and real-world demos.

Unstract-n8n Integration

You can now deploy Unstract on n8n, the popular open-source workflow automation tool. This integration makes it easy to automate document-heavy processes that involve complex logic, decision-making, and routing—without manual effort.

Unstract is available as a community node in n8n—just connect it to your account and start building. Here’s how it works:

- Build your document extraction project in Prompt Studio.

- Deploy it as an API.

- Plug it into your n8n workflows using the Unstract node.

With n8n, document extraction flows seamlessly into your broader business pipelines. Each node can be inspected individually, so you always know what’s happening at every step. And if you need clean, LLM-ready text, the LLMWhisperer node has you covered.

Together, these nodes unlock intelligent, agentic document workflows with minimal human intervention—freeing your team to focus on higher-value work.

Want to see it in action? Watch the Unstract-n8n webinar for a full walkthrough and live demo.

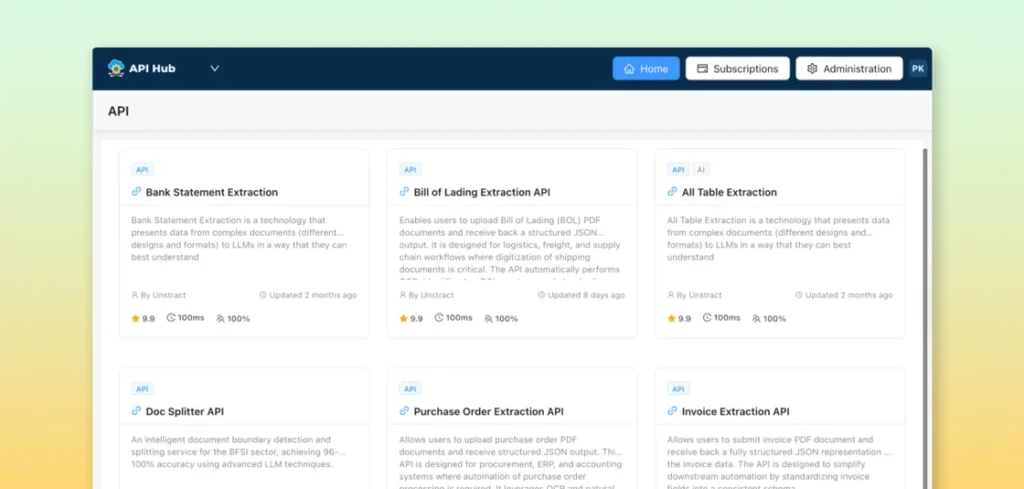

Unstract API Hub

In addition to these domain APIs, you also get access to powerful utility APIs:

Unstract’s new API Hub helps you skip lengthy development cycles with ready-to-use, plug-and-play APIs for document extraction. Each API is prebuilt and tested to deliver domain-specific structured data from documents like invoices, purchase orders, bills of lading, bank statements, and more.

- PDF Splitter – In many industries, it’s common to receive a single PDF that combines multiple documents. Manually splitting these files can be slow and error-prone. With the PDF Splitter API, you can send in one combined PDF and get back a neatly split set of documents—packaged in a zip file. This service uses advanced LLM techniques for intelligent document boundary detection, achieving 96–100% accuracy.

- All Table Extractor – Sometimes you don’t need the whole document, just the data-rich tables. The All Table Extractor API pulls only the tables from uploaded files, making it especially useful in finance, legal, and other data-heavy workflows.

And we’re just getting started—support for more document types and utility APIs is on the way.

Learn more and explore the available APIs in our complete webinar on Unstract’s API Hub.

Human-In-The-Loop Enhancements

Unstract’s Human-in-the-Loop (HITL) feature makes it simple to review and validate fields extracted by LLMs—and we’ve just rolled out improvements that make the review process easier, faster, and more secure.

Here are the three key enhancements:

- Expanded format support for highlighting – Previously, source highlighting worked only with PDFs. With this update, we’ve expanded support to include text and image files. While Unstract can structure data from many more formats, highlighting is currently limited to formats browsers can natively display.

- Multiple-instance highlighting – Sometimes an extracted field appears in multiple places in the same document. Earlier, reviewers could only see the first instance. Now, you can cycle through every occurrence of the extracted field in the document, giving you deeper visibility and control during manual reviews.

- Time-to-live (TTL) for sensitive docs – Documents sent to the HITL queue used to stay there indefinitely if left unattended. With TTL, you can now define an expiration window so sensitive files are automatically removed from the review queue if not actioned within the set time.

Below, you’ll see multiple-instance highlighting in action

To see demos of all three HITL improvements, head over to our Product Updates Video.

Check out our HITL Webinar for a deeper dive into how HITL fits into real-world workflows.

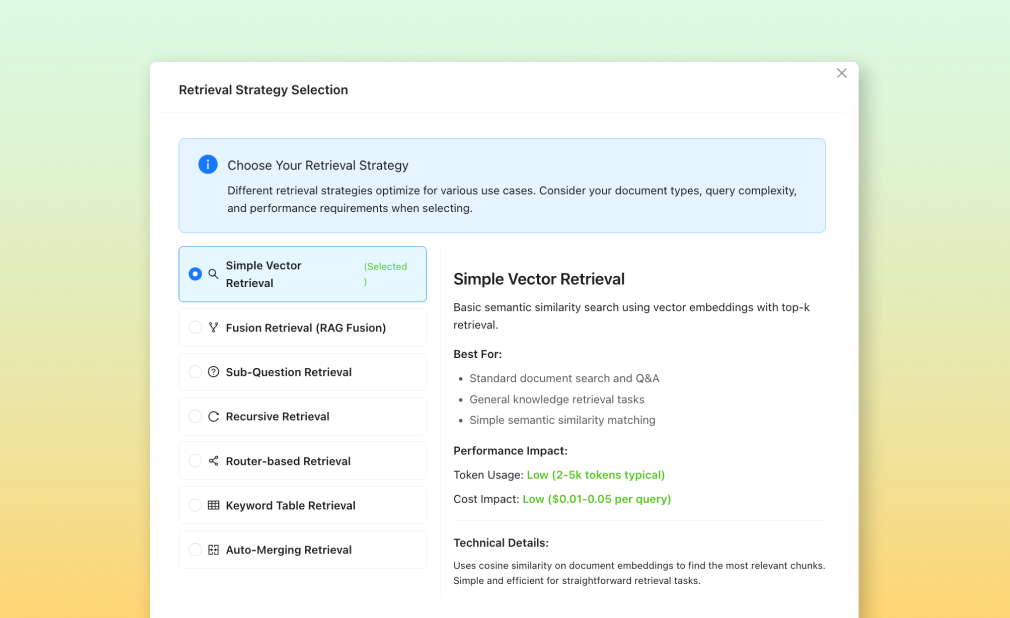

Advanced Retrieval Strategies

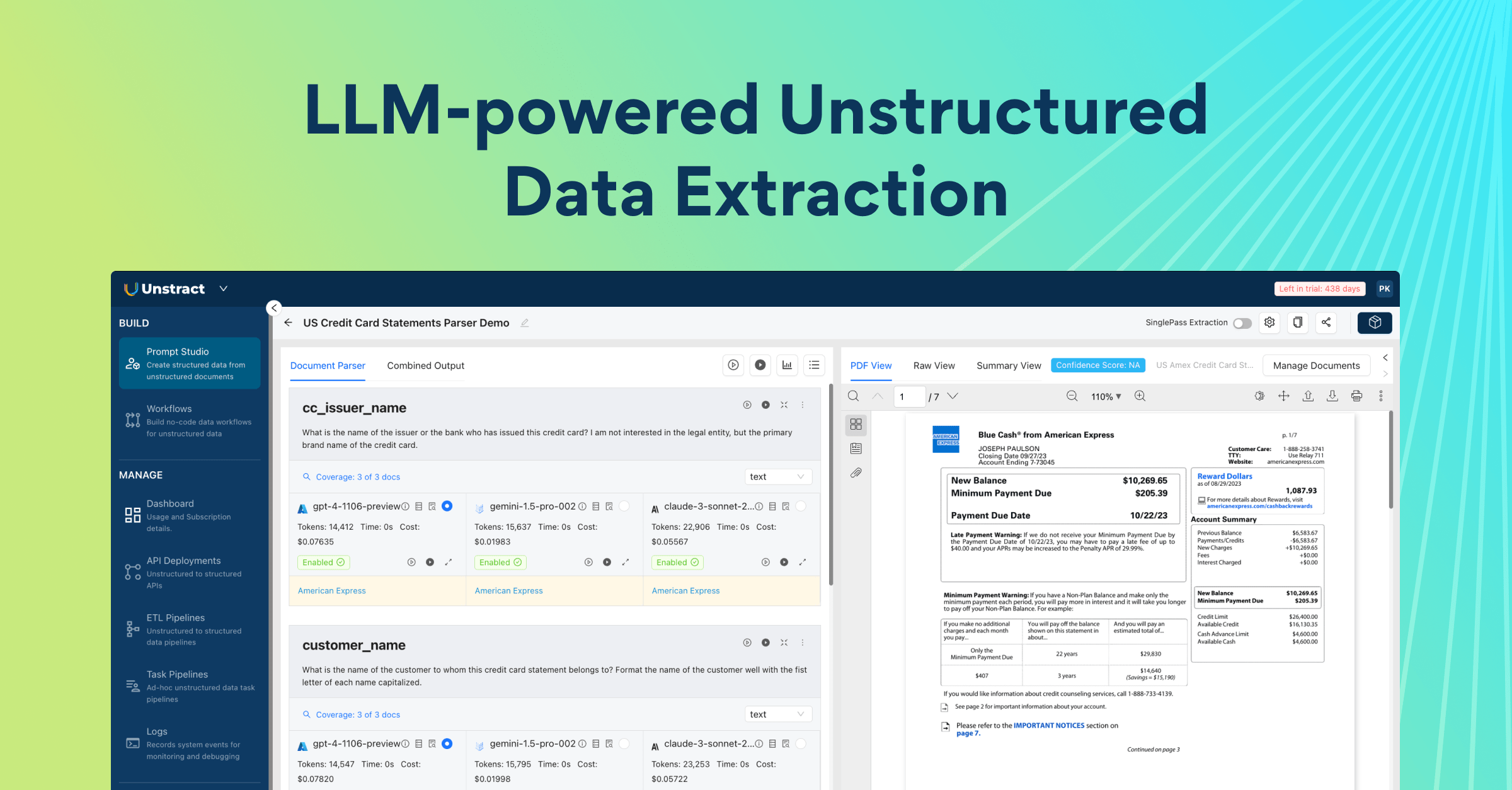

Large documents don’t always fit into your LLM’s context window. To handle this, you need a retrieval strategy—a way to break the document into smaller chunks and surface only the most relevant parts for processing. Without this step, the LLM can’t make sense of the entire document effectively.

Unstract now supports multiple advanced retrieval strategies, each with its own trade-offs in speed, cost, and accuracy. In Prompt Studio, you can easily switch between strategies and experiment to see which one delivers the best results for your project.

This gives you the flexibility to balance cost efficiency and accuracy—so whether you’re working on lightweight automations or mission-critical workflows, you can choose the strategy that fits best.

Import & Export Prompt Studio Projects

Prompt Studio in Unstract is where prompt engineering comes to life—but real-world development isn’t always static. You might start a project in the cloud and later move to a self-hosted setup, test in staging before going live, or switch regions and need to carry your projects with you.

Until now, migrating Prompt Studio projects across environments was tedious and manual. That’s a thing of the past. You can now export your entire project as a JSON file, including all prompts, grammar, preambles, and postambles.

This makes it easy to:

- Collaborate seamlessly across teams

- Create reusable templates for similar document types

- Move projects between cloud, self-hosted, staging, or production environments without losing configuration

To bring a project back into any environment, simply click Import Project, upload the JSON file, and you’re back up and running—no configuration lost.

It’s a simple, powerful way to keep your Prompt Studio projects portable, shareable, and production-ready.

Direct API Deployments from Prompt Studio

Why build an entire workflow if you just need an API? With our latest update, you can instantly deploy any Prompt Studio project as an API—straight from the export menu.

Simply click Export in your project and select Create API Deployment. Within seconds, your project is live as an API, ready to plug into your apps or automations.

This streamlined approach gets your API endpoint up and running faster, saving time and eliminating unnecessary manual steps.

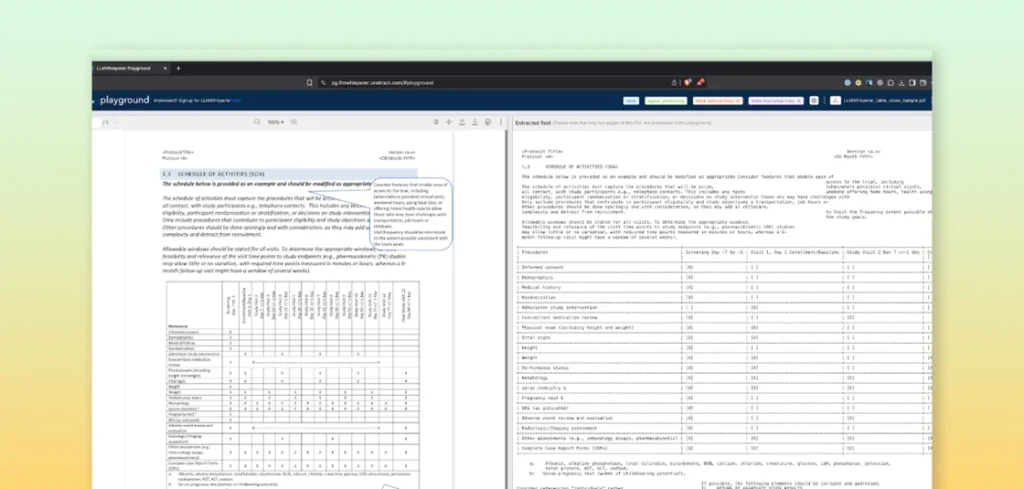

Vertical Text Handling in LLMWhisperer

Authors often use vertical or rotated column headers in tables to save space. With the latest update, LLMWhisperer now converts such tables into an expanded, horizontal format, making them much easier for downstream LLMs to interpret.

This approach prioritizes functionality over perfect visual fidelity, ensuring that the structure and meaning of the table remain clear and usable for document processing and analysis tasks. Wide tables with vertical headers are no longer a barrier to accurate data extraction.

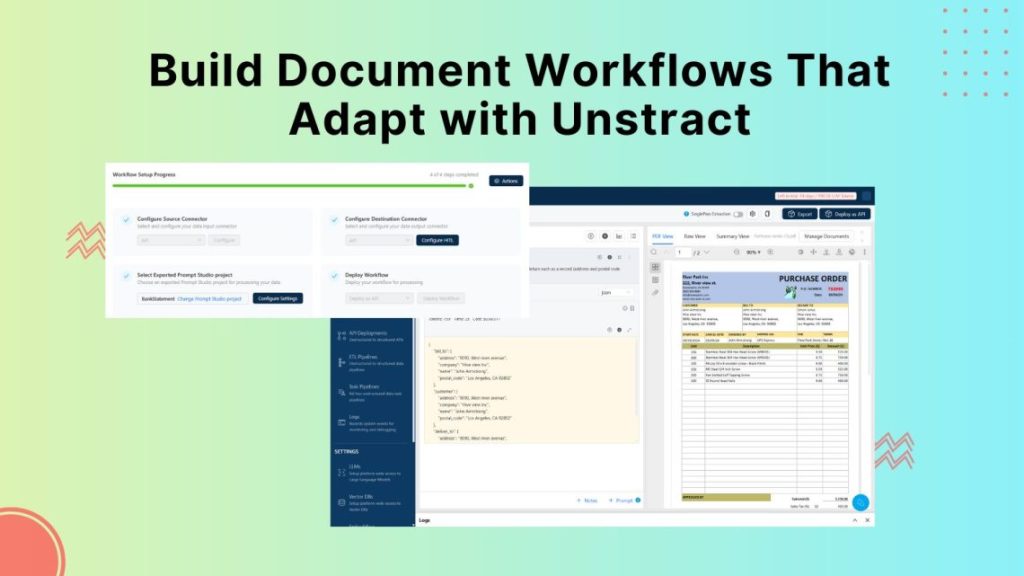

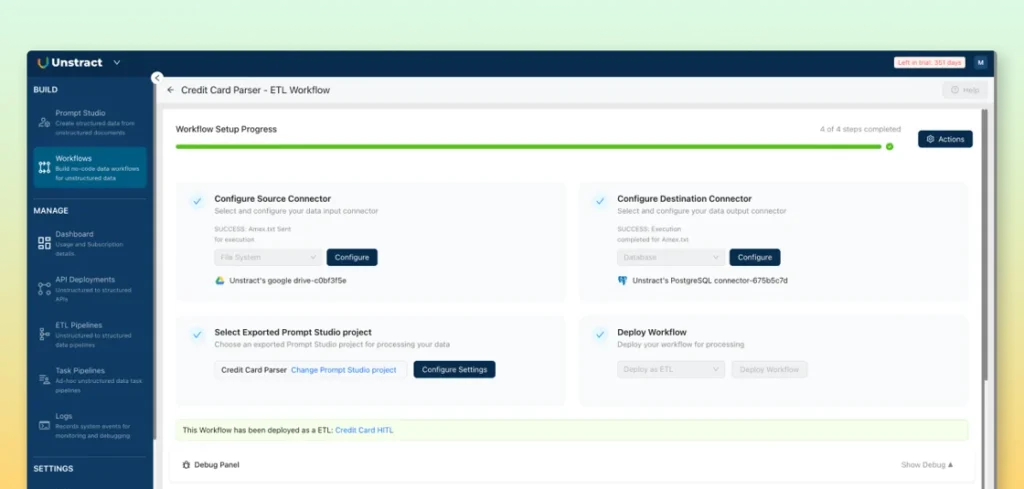

Simplified Workflows Interface

We’ve redesigned the workflows interface to make building pipelines simpler, faster, and more intuitive. Key features are now easier to find, so you can get your work done with fewer clicks and less friction.

At the same time, we’ve retained the Debug Panel, so you can still trace and debug every step.

The new workflow interface is both efficient and transparent, giving you full control without slowing you down.

Centralized Connectors

Previously, when building ETL or task pipelines, you had to enter credentials for each pipeline—even if you were connecting to the same endpoints multiple times.

With centralized connectors, that’s no longer necessary. You can now define a connector once from the Connectors menu and reuse it across all your pipelines. Even better, we’ve automatically created connectors for your existing pipelines using the credentials you had already entered, so you’re ready to go without any extra setup.

This update saves time, reduces redundancy, and makes managing multiple pipelines simpler and more secure.

Extended Support for Reasoning Models

Complex documents sometimes demand reasoning models for better accuracy. Unstract now supports reasoning models from Anthropic, AWS Bedrock, and Azure OpenAI—giving you more options for tackling edge cases.

Cross-Region Inference for AWS Bedrock

Unstract now supports Amazon Bedrock’s Cross-Region Inference, allowing requests to automatically run across multiple AWS regions. This helps ensure high availability, reduces latency, and optimizes performance through dynamic routing.

Other Updates

We’re wrapping up with a few smaller—but impactful—updates to the platform:

- Drag-and-Drop Uploads in Prompt Studio – Uploading files is now faster and smoother with a simple drag-and-drop interface.

- Enhanced Auto-Scaling for Enterprise LLMWhisperer Users – Enterprise customers will notice faster, more responsive auto-scaling, helping handle heavy workloads with ease.

- Expanded File Support in LLMWhisperer Playground – You can now upload all file types accepted by the API, not just PDFs. While some files may not display a preview in your browser, their extracted data remains fully accessible.

- New API Deployment Option – Instead of uploading files in the POST body, you can now pass a pre-signed S3 URL. The API will download the file from S3 and structure it automatically, simplifying integrations and improving workflow efficiency.

These updates are designed to make everyday tasks smoother, more flexible, and better suited to real-world enterprise workflows.

Wrap Up

And that’s a wrap for this round of updates!

All these features are live and ready for you to explore. We’d love to hear how you’re using them — your feedback helps shape what we build next.

If you haven’t checked out the latest updates yet, now’s the perfect time to dive in. Sign in today and experience a simpler, faster document processing workflow — we think you’ll love the improvements.

Have any questions or need guidance? Schedule a demo or reach out to us on Slack — we’re here to help.