Introduction

Given the complex data ecosystems that we have today, the ability to seamlessly connect intelligent APIs with familiar development and productivity tools is no longer a luxury—it’s a necessity.

This article provides an in-depth walkthrough of Unstract’s MCP (Model Context Protocol) Server and demonstrates how it can be integrated with popular MCP hosts such as Cursor and Claude Desktop.

Through a working demonstration, you’ll see exactly how Unstract turns complex document extraction workflows into a few simple API calls, all from within the applications you already use every day.

By the end of this guide, you will have a clear understanding of:

- Unstract’s MCP capabilities – how the server implements the MCP specification to deliver context-aware extraction services.

- Host integration – the steps required to hook Unstract into Cursor and Claude Desktop, enabling in-app querying of source documents without switching contexts.

- Practical use cases – real-world scenarios showing how data engineers and insurance underwriters can dramatically speed up their workflows.

For customers and prospects evaluating document-extraction platforms, knowing that Unstract is fully MCP-ready means you can plug it into your existing toolchain with minimal friction.

Whether you’re embedding extraction logic in a developer IDE or empowering business teams with conversational AI assistants, Unstract’s MCP Server ensures that your organization can scale intelligent data access wherever it’s needed most.

Understanding the MCP Ecosystem

What is an MCP Server?

The Model Context Protocol (MCP) is an open specification designed to standardize how AI models—particularly large language models—interact with external tools, data sources, and applications.

At its core, MCP defines:

- A unified API surface: A consistent set of endpoints and message formats that any compliant client or server can implement.

- A discoverable tool registry: A way for AI clients to learn what “tools” (e.g., document extractors, search engines, calculators) a host application can expose.

- Contextual execution semantics: A protocol for passing rich context (prompts, metadata, user inputs) along with tool invocations, so the model can reason about which tool to call and how to interpret its response.

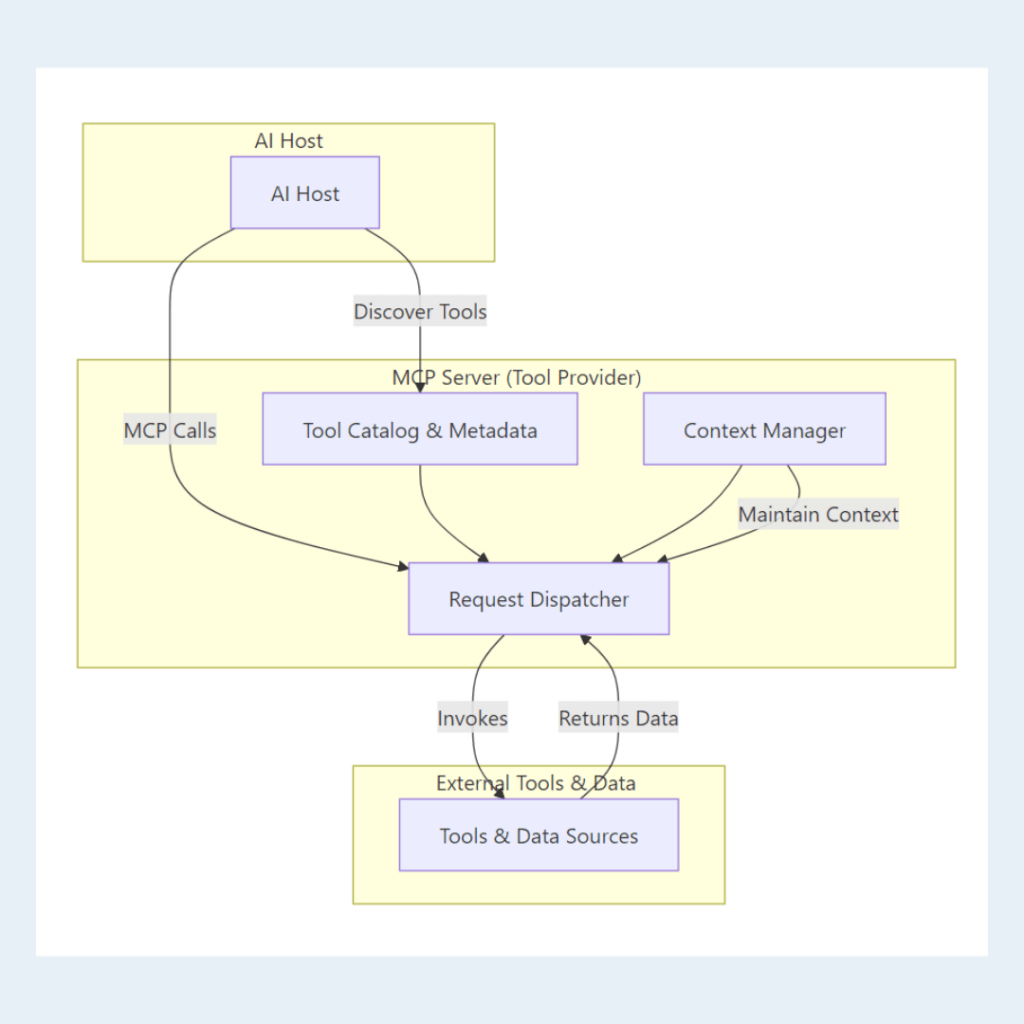

An MCP server sits at the heart of this ecosystem as the “tool provider”:

- Tool Catalog & Metadata: It advertises the set of extraction routines, prompt templates, and helper functions that AI clients can invoke.

- Request Dispatcher: It receives standardized MCP calls from AI hosts (e.g., “extract loan terms from this PDF”), maps them to the appropriate internal routines or microservices, and returns structured results.

- Context Manager: It maintains session state and shared context—document locations, user preferences, authentication tokens—so that multi-step or conversational workflows remain coherent.

By conforming to MCP, Unstract’s MCP server becomes a drop-in component for any host or client that speaks the protocol, ensuring seamless, context-aware integration across IDEs, chat interfaces, and automated pipelines.

Why MCP Matters

Modern AI-driven workflows often span multiple tools, data repositories, and user interfaces—each with its own API or plugin model.

MCP unifies these disparate components under a single, well-defined contract, delivering three key advantages:

Enhanced Productivity for Developers & Data Engineers

- Rapid tooling adoption: Rather than writing bespoke adapters for every new service or model, teams implement MCP once and immediately gain access to any compliant host or server.

- Consistent debugging: Standardized request and response formats mean logs, error messages, and test harnesses work the same way across all integrations, reducing cognitive overhead when troubleshooting.

- Extensible architecture: Adding new extraction routines, prompt templates, or data sources is as simple as registering them in the MCP server’s catalog—no changes required in the host application.

Empowered Analysts & Business Users

- In-app querying: Analysts can submit natural-language queries (e.g., “What are the key terms in this contract?”) directly from their IDE or chat assistant, rather than exporting and reimporting documents across multiple tools.

- Self-service insights: By surfacing extraction capabilities as discoverable “tools,” MCP enables non-technical users to explore and leverage advanced features without writing custom scripts.

Seamless Integration & Reduced Context-Switching

- Single pane of glass: Whether you’re in Cursor, Claude Desktop, or another MCP-enabled host, the interface for invoking document-extraction, search, or transformation remains identical.

- Fluid workflows: Engineers no longer juggle multiple windows or manual data transfers—every step from prompt formulation to API invocation happens inline, preserving focus and minimizing interruptions.

- Consistent security boundary: Authentication, authorization, and auditing are centralized in the MCP server, so hosts don’t need custom security logic for each integration.

By adopting MCP, organizations gain a future-proof integration layer that scales with evolving AI capabilities—delivering faster time-to-value, lower maintenance costs, and a better end-user experience across teams.

Components of an MCP Ecosystem

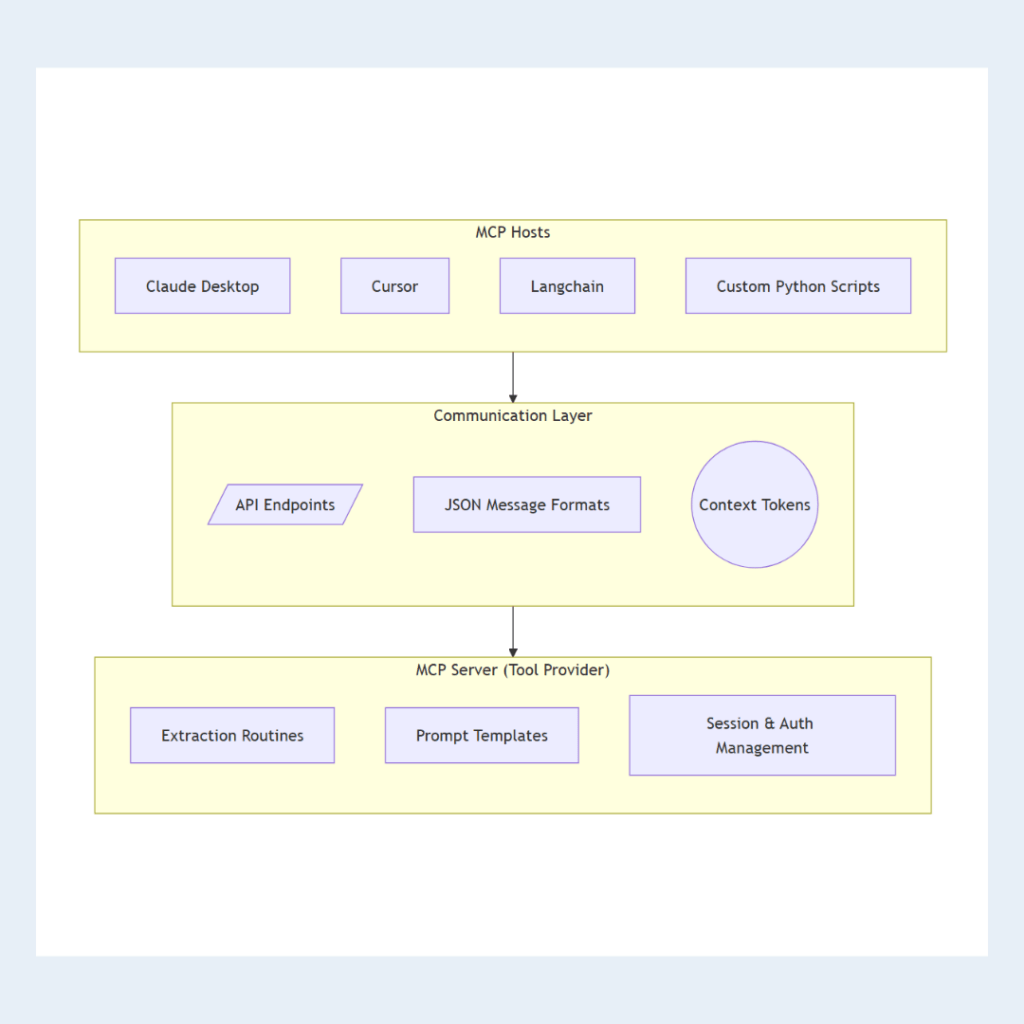

An MCP ecosystem is built around three fundamental elements, each playing a distinct role in enabling seamless AI–tool interoperability:

MCP Host

The host is the client application that initiates MCP calls and presents results to the user.

Examples include:

- Claude Desktop: A chat-based interface that can call out to external tools to enrich its conversational context.

- Cursor: Developer-focused environments where code editors and terminals can embed MCP-triggered panels or command palettes for in-IDE queries.

- Custom Python Scripts: Lightweight scripts to invoke MCP endpoints directly for ad-hoc data extraction or automation tasks.

- Business Intelligence Platforms: BI tools (e.g. Tableau, Power BI) with MCP plugins that allow end users to run natural-language extraction queries on connected document repositories.

MCP Server

The server acts as the “tool provider,” implementing the MCP specification to expose and execute a catalog of services.

For Unstract’s MCP server, this includes:

- Extraction routines for PDFs, Word docs, insurance forms, and more.

- Prompt templates and helper functions for specialized tasks (e.g., table parsing, key-value extraction).

- Session management to track user context and maintain authentication across multiple requests.

Communication Layer

This is the standardized protocol that glues hosts and servers together.

It consists of:

- API Endpoints: Well-defined HTTP routes (e.g., /mcp/discover, /mcp/invoke) that hosts use to fetch tool metadata and submit execution requests.

- Message Formats: JSON schemas for tool descriptions, invocation payloads (prompt, parameters, document references), and structured responses (extracted fields, debug logs).

- Context Tokens: Session or conversation identifiers that flow with each request, ensuring that multi-turn or stateful workflows remain coherent.

Together, these components form a flexible, extensible framework: hosts consume the server’s available tools through the communication layer, while the server handles execution, context management, and result formatting—allowing users to focus on solving domain problems rather than plumbing.

Introducing the Unstract MCP Server

Unstract’s MCP Server is a purpose-built implementation of the Model Context Protocol, combining Unstract’s leading document-extraction engine with a standardized, discoverable tool interface.

By packaging extraction routines, prompt templates, and session management into a single, MCP-compliant service, it enables rapid integration across a range of AI hosts and developer environments.

What Makes Unstract’s MCP Server Unique

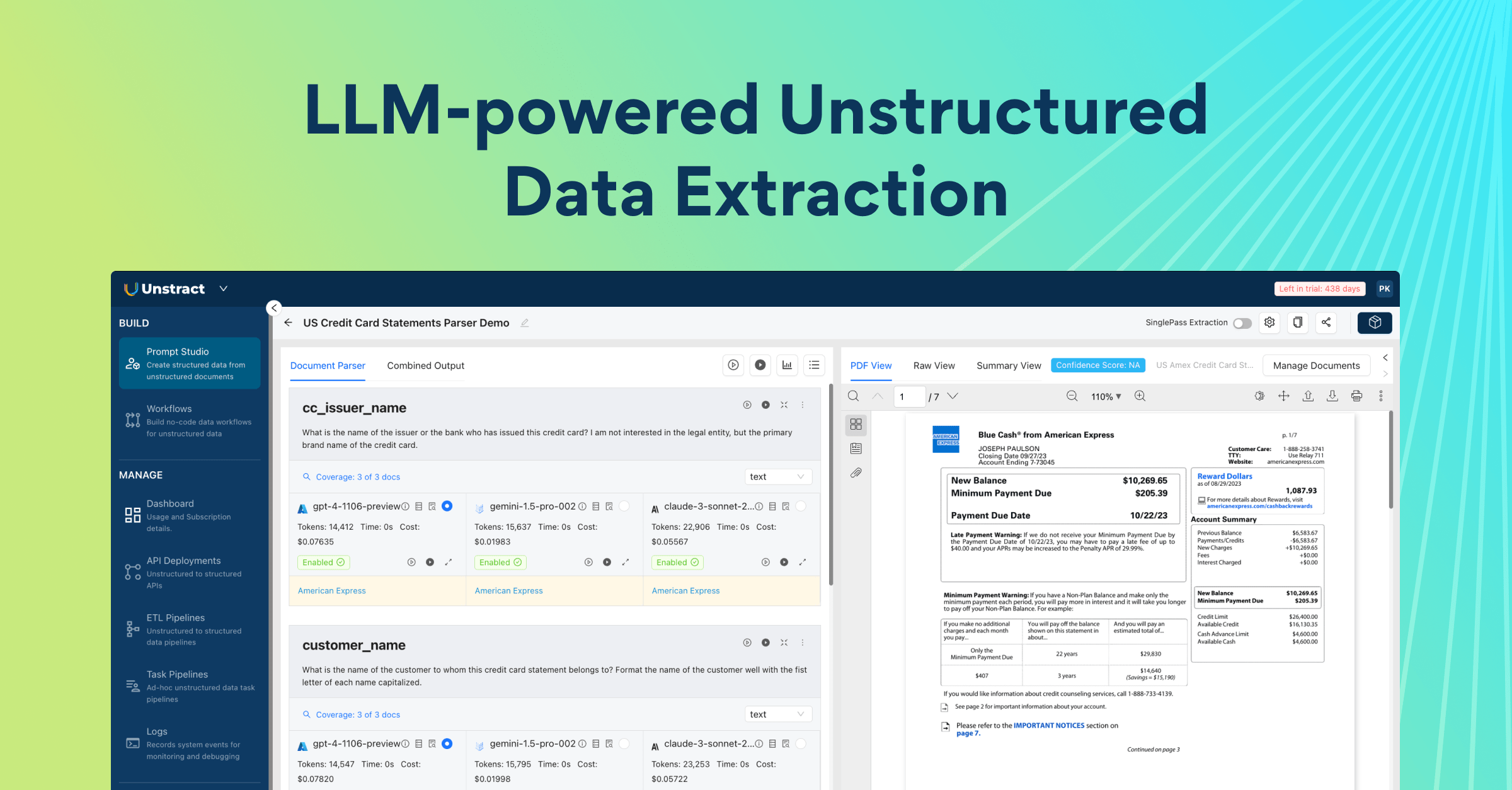

Native Prompt Studio Integration: Unstract’s no-code Prompt Studio sits alongside the MCP server, enabling prompt engineers to spin up new extraction workflows (e.g., key-value mappings, table parsers) from sample documents in minutes—automatically exposed as MCP tools.

End-to-End Extraction Engine: Under the hood, the same high-accuracy, layout-preserving extraction pipeline that powers Unstract’s API is leveraged by the MCP server, ensuring consistent results whether you call the API directly or via an MCP host.

Built-in Session & Context Management: The server maintains conversation and extraction state—document pointers, authentication tokens, user preferences—eliminating the need for hosts to re-implement context tracking for multi-turn workflows.

Robust Error Handling & Observability Timeouts, retries, configurable back-off, and detailed logs make MCP integrations reliable and easy to debug in production.

Capabilities and Compatibility

Full MCP Specification Compliance: Supports MCP discovery (/mcp/discover), invocation (/mcp/invoke), and context propagation endpoints, making it a drop-in tool provider for any MCP-enabled host.

Flexible Deployment Models: Can run as a standalone container behind your firewall, in a public cloud, or as part of a managed Unstract platform—giving you control over data residency, scaling, and security.

Language & Framework Agnostic: Works with any host capable of HTTP/JSON calls: chat clients (Claude Desktop), IDEs (Cursor), orchestration frameworks (LangChain), custom scripts, notebooks, and BI tools.

Multi-Tenant & Configurable: Namespace isolation, per-project API keys, and environment-driven configurations allow teams to share a single MCP server instance without stepping on each other’s toes.

Setup Guide: Deploying an MCP-Ready Project with Unstract

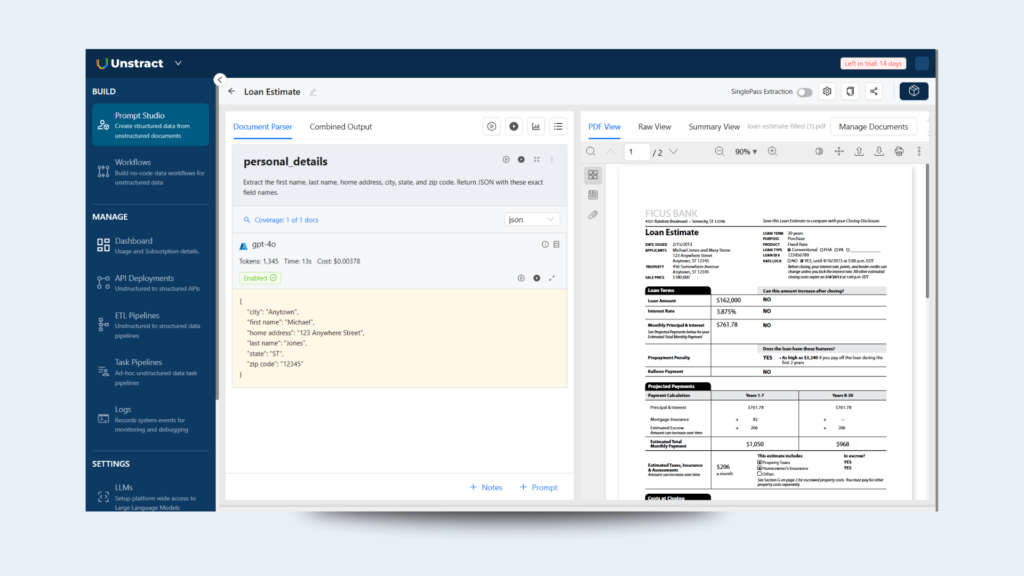

Unstract’s Prompt Studio is the interface where users design, test, and refine AI-powered extraction workflows—all without writing a single line of code.

These projects form the core of what is ultimately exposed as tools via an Unstract MCP Server.

In this section, we’ll walk through how to create and deploy a Prompt Studio project that extracts key fields from structured or semi-structured documents, such as insurance or loan forms.

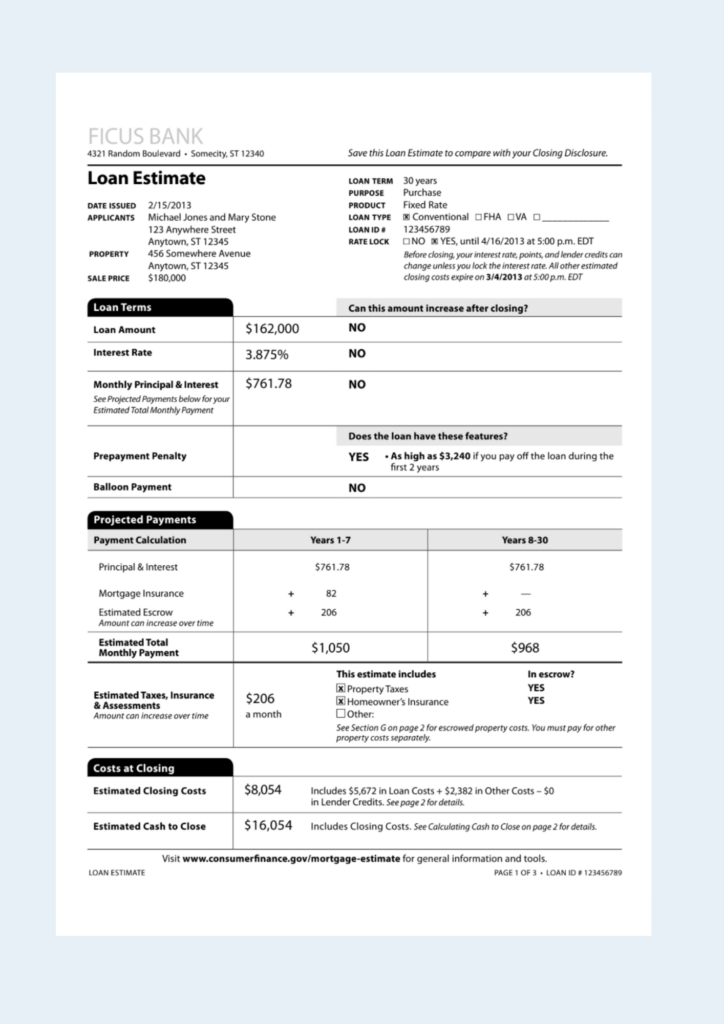

For the examples in this section, we will use a loan form:

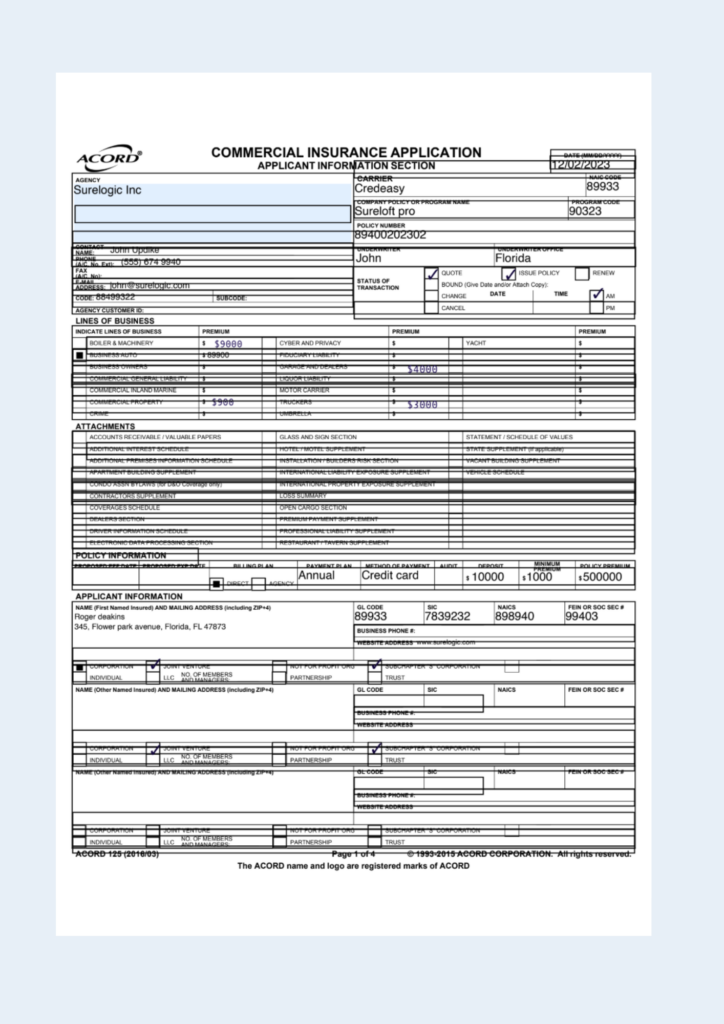

And an insurance form:

The result: an API-ready tool that can be invoked from any MCP host like Claude Desktop or Cursor.

Getting Started

First, visit the Unstract website and create a free account.

You’ll gain access to a 14-day trial with $10 in LLM credits, giving you immediate access to Prompt Studio and Unstract’s advanced extraction engine.

Creating a New Prompt Studio Project

- Navigate to Prompt Studio.

- Click New Project, and give it a name like Loan Estimate.

- Upload your test document (e.g., a Loan Estimate form) under Manage Documents.

Now you’re ready to start crafting prompts that extract structured data from the form.

Example Prompt 1 – Personal Information:

Extract the first name, last name, home address, city, state, and zip code. Return JSON with these exact field names.

Make sure the output format is set to JSON. On running the prompt, the response will be a structured object like:

{

"city": "Anytown",

"first name": "Michael",

"home address": "123 Anywhere Street"

"last name": "Jones",

"state": "ST",

"zip code": "12345"

}

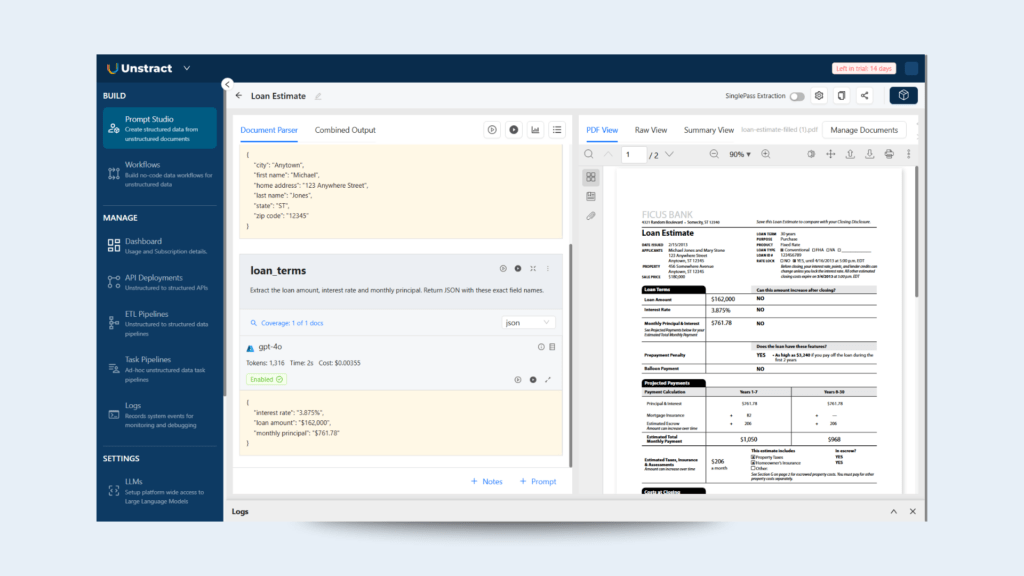

Example Prompt 2 – Loan Terms:

Extract the loan amount, interest rate and monthly principal. Return JSON with these exact field names.

Make sure the output format is set to JSON. On running the prompt, the response will be a structured object like:

{

"interest rate": "3.875%",

"loan amount": "$162,000",

"monthly principal": "$761.78"

}

You can test and iterate on your prompts until extraction is accurate and repeatable.

The combined output should be similar to:

{

"personal_details": {

"city": "Anytown",

"first name": "Michael",

"home address": "123 Anywhere Street",

"last name": "Jones",

"state": "ST",

"zip code": "12345"

},

"loan_terms": {

"interest rate": "3.875%",

"loan amount": "$162,000",

"monthly principal": "$761.78"

}

}

Deploying as an API (MCP-Ready)

Once your prompts are configured and tested, you can deploy the project as an API—which will enable it to be used with the Unstract MCP server.

Step 1: Export as Tool

- In Prompt Studio, click Export as Tool (top-right corner).

- This action makes your prompt logic callable from any MCP-compatible host.

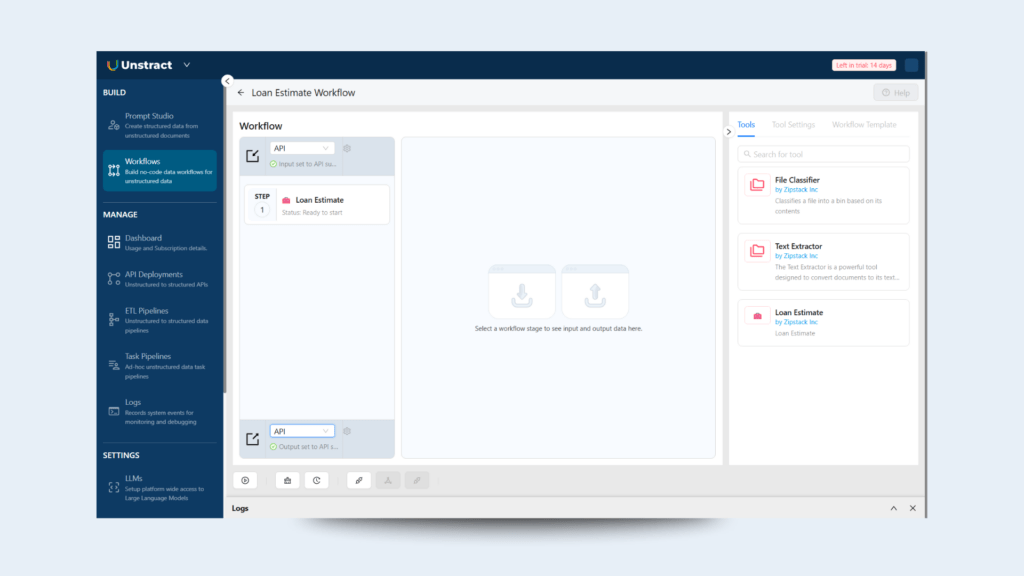

Step 2: Create a Workflow

- Go to BUILD → Workflows.

- Click + New Workflow, and drag your tool (e.g., Loan Estimate) into the editor.

- This workflow defines the logic and order of operations that the server will execute.

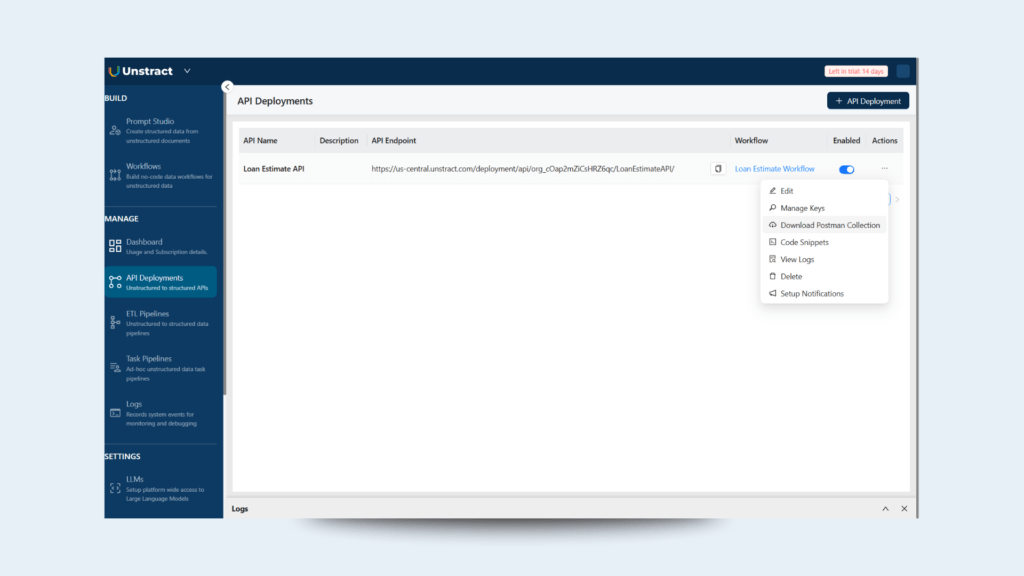

Step 3: Deploy API

- Go to MANAGE → API Deployments.

- Click + API Deployment and select your workflow.

- The deployment page provides management tools:

- Generate API keys

- Download a Postman collection for testing

- Monitor logs and usage

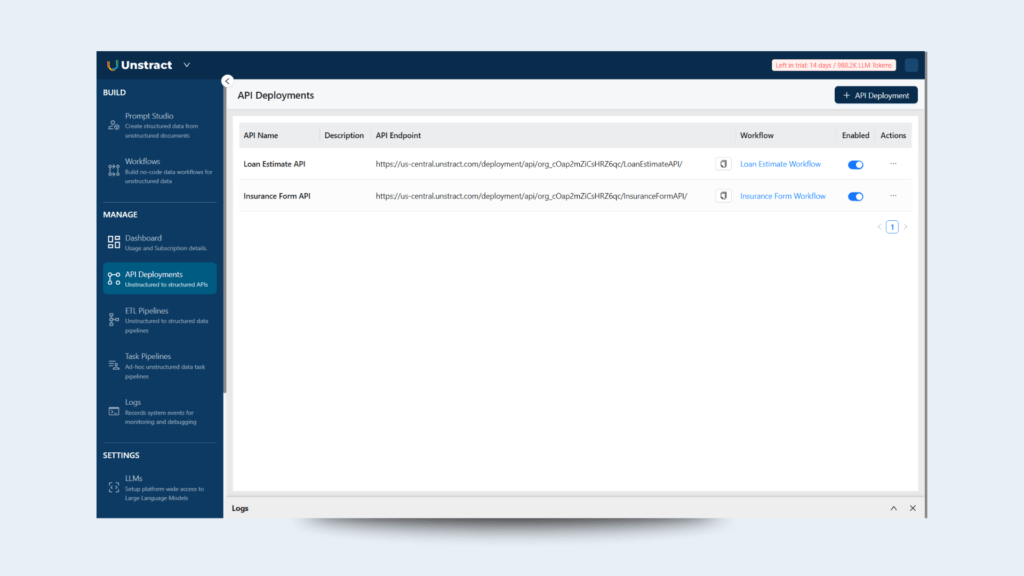

You can repeat the same steps for the insurance form. At the end, you should have a JSON output for the insurance form similar to:

{

"personal_details": {

"email": "john@surelogic.com",

"name": "John Updike",

"phone": "(555) 674 9940"

},

"line_business": {

"BOILER & MACHINERY": "$9000",

"BUSINESS AUTO": "$89900",

"COMMERCIAL PROPERTY": "$900",

"MOTOR TRUCKERS": "$3000",

"YACHT": "$4000"

}

}

And the corresponding API:

Use Case Demonstrations

Use Case 1: Integration with Cursor

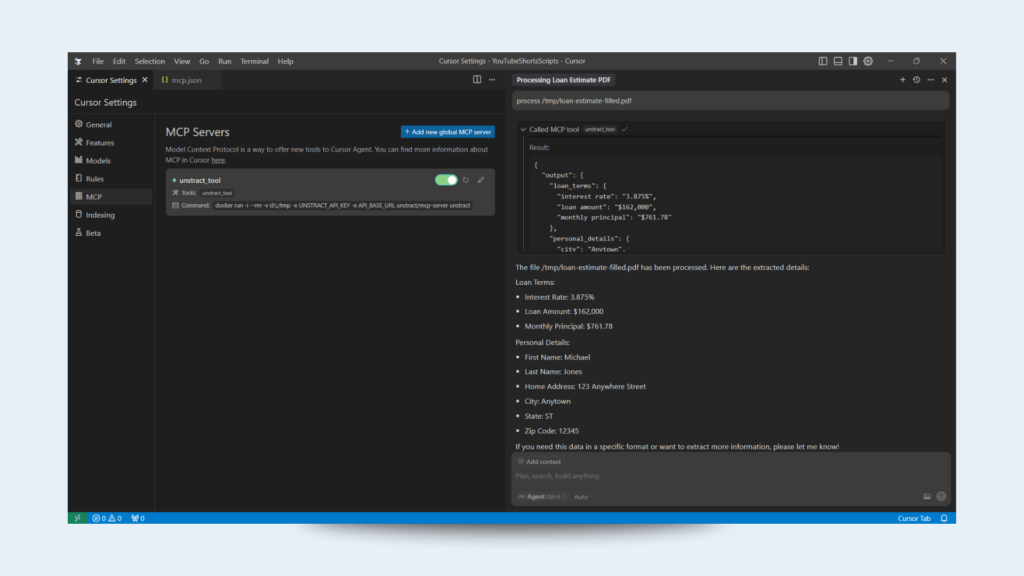

Scenario: A data engineer queries a PDF source document (e.g., Loan Estimate) directly via Unstract API inside the Cursor chat.

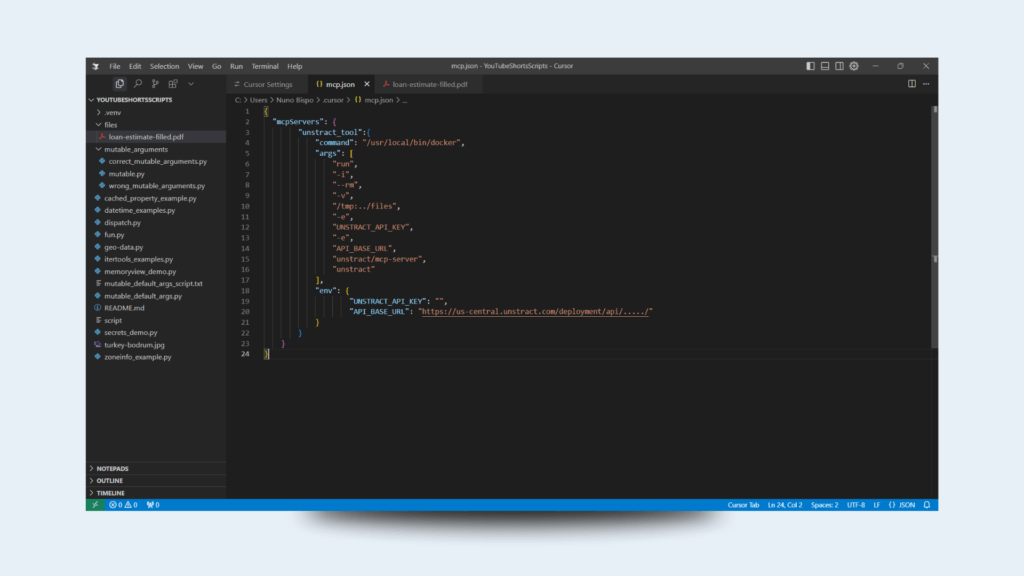

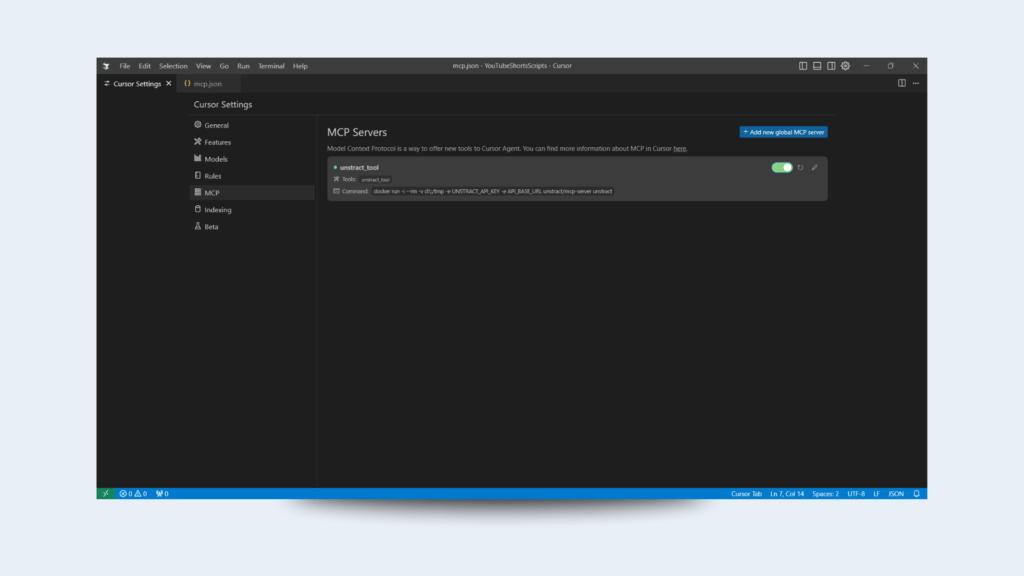

Open ‘Cursor Settings’ and navigate to the option ‘MCP’:

Then click on ‘Add new global MCP server’:

Fill in the following information:

{

"mcpServers": {

"unstract_tool":{

"command": "/usr/local/bin/docker",

"args": [

"run",

"-i",

"--rm",

"-v",

"/tmp:/tmp",

"-e",

"UNSTRACT_API_KEY",

"-e",

"API_BASE_URL",

"unstract/mcp-server",

"unstract"

],

"env": {

"UNSTRACT_API_KEY": "",

"API_BASE_URL": "https://us-central.unstract.com/deployment/api/...../"

}

}

}

}

If on Windows, then:

{

"mcpServers": {

"unstract_tool": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-v",

"d:\\:/tmp",

"-e",

"UNSTRACT_API_KEY",

"-e",

"API_BASE_URL",

"unstract/mcp-server",

"unstract"

],

"env": {

"UNSTRACT_API_KEY": "",

"API_BASE_URL": "https://us-central.unstract.com/deployment/api/...../"

}

}

}

}

Make sure to replace UNSTRACT_API_KEY and API_BASE_URL with your correct information as shown in the previous section.

And fill in your appropriate folder where the documents will be placed.

Once the Docker container starts up, you will see a list of available tools:

Note: If it doesn’t show the green icon, click the refresh icon to restart it.

Open a chat and process the document:

Note: In case there is an error message mentioning ‘file not found’, restart Cursor to make sure the MCP server can find the correct file.

Use Case 2: Integration with Claude Desktop

Scenario: An insurance underwriter queries an Acord Insurance document using Claude Desktop + Unstract API.

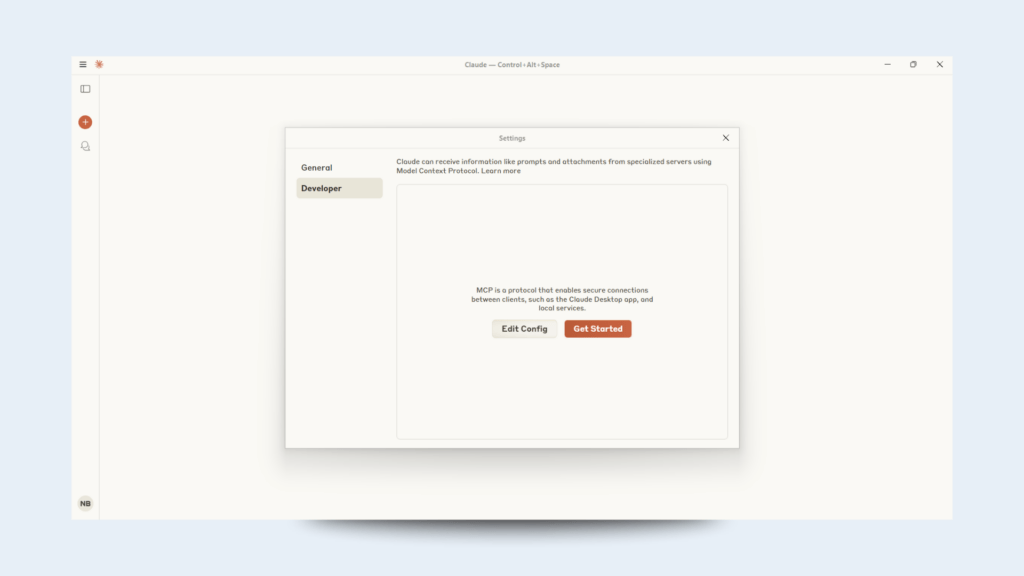

First, start by downloading and installing Claude Desktop if you have not done so already: https://claude.ai/download

After installing and logging in, navigate to the menu ‘File->Settings’ and then ‘Developer’:

Click on ‘Edit Config’ and it will navigate to the location of the file claude_desktop_config.json.

Open the file in your preferred text editor and fill in the corresponding information:

{

"mcpServers": {

"unstract_tool":{

"command": "/usr/local/bin/docker",

"args": [

"run",

"-i",

"--rm",

"-v",

"/tmp:/tmp",

"-e",

"UNSTRACT_API_KEY",

"-e",

"API_BASE_URL",

"unstract/mcp-server",

"unstract"

],

"env": {

"UNSTRACT_API_KEY": "",

"API_BASE_URL": "https://us-central.unstract.com/deployment/api/...../"

}

}

}

}

If on Windows, then:

{

"mcpServers": {

"unstract_tool": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-v",

"d:\\:/tmp",

"-e",

"UNSTRACT_API_KEY",

"-e",

"API_BASE_URL",

"unstract/mcp-server",

"unstract"

],

"env": {

"UNSTRACT_API_KEY": "",

"API_BASE_URL": "https://us-central.unstract.com/deployment/api/...../"

}

}

}

}

Make sure to replace UNSTRACT_API_KEY and API_BASE_URL with your correct information as shown in the previous section.

And fill in your appropriate folder where the documents will be placed.

Restart Claude Desktop for changes to take effect.

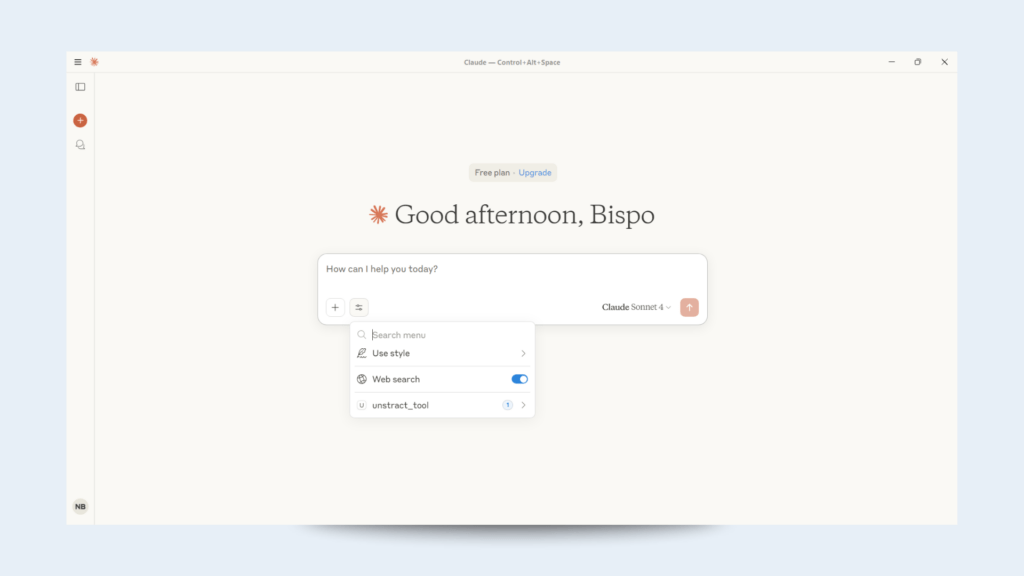

After restarting, create a new chat and you should have access to the unstract_tool:

You can type the following prompt:

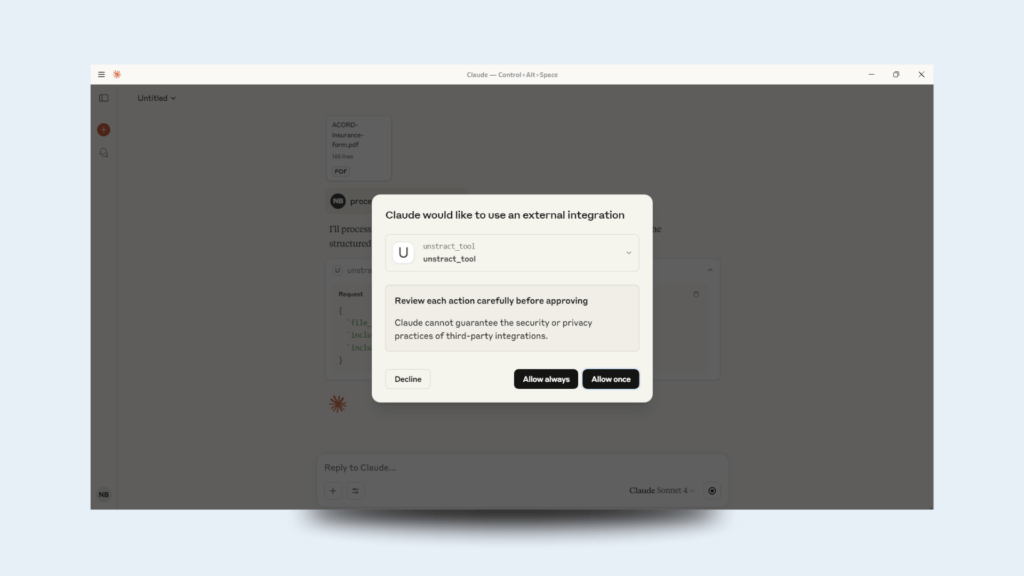

process the document at /tmp/ACORD-Insurance-form.pdfOn the first use of the unstract_tool, Claude Desktop will ask for confirmation:

You can allow always or only once, according to your preferences.

After processing, you will have the following output:

Use Case 3: Custom Python Script

Another way to use the Unstract MCP server is in a Python script with StdioServerParameters, which is a Langchain MCP adapter.

In this example, we will also use a Mistral AI chat model, for which you will need an API key, which can be generated at the ‘API Keys’ section in the Admin panel.

Let’s start by initializing a uv project:

uv init .Create a virtual environment and activate it:

uv venv.venv\Scripts\activateThen install the requirements:

uv pip install -r requirements.txtFor reference, the requirements are:

langchain-mistralai

python-dotenv

langchain-mcp-adapters

langgraph

Next, you need to create an .env file with :

MISTRALAI_API_KEY=<YOUR_MISTRALAI_API_KEY>

UNSTRACT_API_KEY=<YOUR_UNSTRACT_API_KEY>

API_BASE_URL=<YOUR_API_BASE_URL>

Make sure to replace them with your appropriate values.

Then create the main.py file:

# Imports

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from langchain_mcp_adapters.tools import load_mcp_tools

from langgraph.prebuilt import create_react_agent

from langchain_mistralai import ChatMistralAI

from dotenv import load_dotenv

import asyncio

import os

# Load environment variables

load_dotenv()

# Initialize the model

model = ChatMistralAI(model="mistral-large-latest", api_key=os.getenv("MISTRALAI_API_KEY"))

# Initialize the server parameters for Docker

server_params = StdioServerParameters(

command="C:\\Program Files\\Docker\\Docker\\resources\\bin\\docker.exe",

args=[

"run",

"-i",

"--rm",

"-v",

"d:\\:/tmp", # Windows path mapped to /tmp in container

"-e", "UNSTRACT_API_KEY",

"-e", "API_BASE_URL",

"unstract/mcp-server",

"unstract"

],

env={

"UNSTRACT_API_KEY": os.getenv("UNSTRACT_API_KEY"),

"API_BASE_URL": os.getenv("API_BASE_URL")

}

)

# Define the chat function

async def chat_with_agent():

# Initialize the client

async with stdio_client(server_params) as (read, write):

# Initialize the session

async with ClientSession(read, write) as session:

# Initialize the session

await session.initialize()

# Load the tools

tools = await load_mcp_tools(session)

# Create the agent

agent = create_react_agent(model, tools)

# Start conversation history, this is the initial message from the system prompt

messages = [

{

"role": "system",

"content": "You can use multiple tools in sequence to answer complex questions. Think step by step.",

}

]

# Start the conversation

print("Type 'exit' or 'quit' to end the chat.")

while True:

# Get the user's message

user_input = input("\nYou: ")

# Check if the user wants to end the conversation

if user_input.strip().lower() in {"exit", "quit"}:

print("Goodbye!")

break

# Add the user's message to the conversation history

messages.append({"role": "user", "content": user_input})

# Invoke the agent with the full message history

agent_response = await agent.ainvoke({"messages": messages})

# Get the agent's reply

ai_message = agent_response["messages"][-1].content

# Add the agent's reply to the conversation history

messages.append({"role": "system", "content": ai_message})

# Print the agent's reply

print(f"Agent: {ai_message}")

# Run the chat function

if __name__ == "__main__":

# Run the chat function asynchronously

asyncio.run(chat_with_agent())

Here is a description of the major components used in the code:

Docker-Based MCP Server Setup:

- Runs the unstract/mcp-server Docker container.

- Mounts your D:\ drive as /tmp in the container (this is in Windows, adjust for Linux).

- Passes environment variables (UNSTRACT_API_KEY, API_BASE_URL) into Docker.

This example is for Windows; for Linux, adjust the command and path, for example:

server_params = StdioServerParameters(

command="/usr/local/bin/docker",

args=[

"run",

"-i",

"--rm",

"-v",

"/tmp:/tmp",

"-e", "UNSTRACT_API_KEY",

"-e", "API_BASE_URL",

"unstract/mcp-server",

"unstract"

],

env={

"UNSTRACT_API_KEY": os.getenv("UNSTRACT_API_KEY"),

"API_BASE_URL": os.getenv("API_BASE_URL")

}

)

Chat Loop Function:

- Launches and connects to the MCP Docker container via stdio.

- Opens a session for communication with the MCP server.

- Initializes the MCP session.

- Loads available tools (e.g., unstract_tool).

- Constructs a LangGraph agent that can call tools step by step to answer questions.

Conversation Loop:

- Starts with a system prompt instructing the agent to think step by step.

- Reads user input.

- If the user types exit or quit, the loop ends.

- Sends the full conversation history to the agent.

- The agent uses the model + available tools to answer.

- Extracts the agent’s latest reply.

- Adds it to the conversation history for context.

Now you can ask the AI to process documents, let’s see again the example of the insurance form.

You can run the script with:

uv run main.pyThen process the document:

You: process the document at /tmp/ACORD-Insurance-form.pdf

Agent: The structured data extracted from the file at `/tmp/ACORD-Insurance-form.pdf` is as follows:

- **Line of Business:**

- BOILER & MACHINERY: $9000

- BUSINESS AUTO: $89900

- COMMERCIAL PROPERTY: $900

- MOTOR TRUCKERS: $3000

- **Personal Details:**

- Name: John Updike

- Phone: (555) 674 9940

- Email: john@surelogic.com

As you can see, it uses the unstract_tool to process and extract the JSON from the document, which MistralAI model formats for the output.

Conclusion

In this guide, we explored the foundations of the Model Context Protocol ecosystem—how standardized discovery, invocation, and context propagation enable seamless AI–tool integrations.

We saw that by acting as the central “tool provider,” an MCP server simplifies everything from prompt orchestration to multi-turn session tracking.

Unstract’s MCP Server distinguishes itself by embedding its high-accuracy extraction engine, no-code Prompt Studio, and robust observability into a single, compliant service that works effortlessly with any MCP host.

By leveraging Unstract’s MCP Server, organizations unlock a new level of productivity: data engineers can query PDFs and contracts directly from IDEs, analysts can run natural-language extractions in chat interfaces, and prompt engineers can iterate on workflows without touching code.

All of this happens within your existing tools, dramatically reducing context-switching and accelerating time-to-value.

Ready to experience it for yourself?

Spin up an Unstract MCP Server instance, connect it to your preferred host (Cursor, Claude Desktop, LangChain, and more), and start extracting structured insights from your documents in minutes.

Partner with Unstract today to modernize your mortgage processing workflows. Access our demo, API documentation, to experience the power of AI-driven mortgage document processing.

Signup for a free trial to get hands-on experience with Unstract.

Or better yet,schedule a call with us — our team will walk you through how Unstract’s AI-powered automation is transforming document processing in the mortgage industry, and how it significantly differs from traditional OCR and RPA solutions.

From Inbox to Database: Automating Document Extraction with Unstract + n8n

With n8n, you can automatically sort and handle incoming emails. It can check subject lines to figure out what kind of form is attached and then send the file to the right process—all while staying within your data rules.

Unstract works alongside this by handling data extraction. In Unstract’s Prompt Studio, you create custom extractors that know how to pull out specific details—like invoice numbers, dates, or tax codes—from different types of documents. These extractors are available as REST APIs.

When n8n sends a PDF or scanned image to Unstract, it gets back clean, structured JSON data that’s ready to go straight into your database.

Together, n8n and Unstract automate the whole process—from inbox to database—saving time, reducing errors, and removing the need for manual data entry.